This Hybrid RAID NAS Server was an interesting project. It ties together some interesting piece of hardware that might be useful in other projects. The central piece is the CFI A7879 case (Fig. 1) from E-ITX. It is designed for a Mini-ITX motherboard with room for a full size expansion board. There are four hot swap SATA drive bays (Fig. 2).

The system has actually wound up being a general server that is now central to the lab. It is acting as an Internet gateway, file server and it is running a number of virtual machines. But more about that later.

The CFI A7879 case is stylish and it has a large system cooling fan that moves air across the four removeable drive bays. It has space for a full size expansion board. A two drive version of the case is available.

The motherboard's expansion slot allowed me to take advantage of Adaptec's new Adaptec 6805 SAS controller (Fig. 3). The 6805 handles up to 8 SAS or SATA drives directly and up to 256 drives using SAS expanders. It has a x8 PCI Express Gen 2 interface. There is a 4 port version, the 6405E, but has a x1 PCI Express interface providing a lower throughput. They both provide hybrid RAID 1 support where a solid state drive (SSD) and a hard drive are combined into a single RAID configuration. The Adaptec controller also supports RAID 5, 5EE, 50, 6, 60 and JBOD.

The motherboard for this project is Super Micro Computer's (Supermicro) X9SCV-QV4 Mini-ITX motherboard (Fig. 4). It targets a range of embedded applications from digital signage to 12V automotive. The motherboard can handle the range of Intel Core processors as well as the low cost Celeron B800 series but we packed it with the top end Core i7. This allows the NAS box to do a lot more.

The other main components in the project include Corsair's DDR3 SODIMM, Micron's P300 RealSSD SSD enterprise drives, and Seagate's Barracuda XT SATA hard disk drives. The Corsair SODIMMs are available in sizes up to the 8 Gbytes we used providing the operating systems with 16 Gbytes of memory. The 2.5-in Micron P300 RealSSD drives are SLC flash with a SATA interface. The 3 Tbyte Seagate Barracuda XT drives are 3.5-in SATA hard disks. These were paired in a hybrid RAID 1 configuration.

Related Companies

System Configuration

The Supermicro motherboard uses the Intel QM67 chipset and arrived with an Intel Core i7 processor. The compact mini-ITX motherboard has a pair of DDR3 SODIMM sockets that I filled with Corsair memory. It is easier to install the memory before installing the board in the case. Likewise, the cabling to the motherboard for the status LEDs, switches, etc. is easier to do before installing the expansion card. The motherboard has two SATA 3 and four SATA 2 interfaces that were not used. If hybrid RAID support is not needed then these interface could be used allowing the PCI Express slot to be filled by another card such as a video tuner, video capture card or data acquisition card.

The Adaptec controller was installed after the motherboard. A single hydra cable connects the controller to the four SATA connections for the removable drive bays (Fig. 5). The Zero-Maintenance Cache Protection feature was provided. It consists of an external supercap that has a cable and card that plug into the controller. I have already had plenty of chances to check out this feature since we have lost power a number of times due to the weather in the Philadelphia area.

Two of the removable drive slots were used for the 2.5-in Micron P300 RealSSD drives. The other pair housed the 3.5-in Seagate Barracuda XT drives. These days the drives tend to be bolted into the case since the hot swap connector position is now standard. The drive trays have mounting holes for 2.5- and 3.5-in drives. There is no lock on the drives or main door but this type of system is not designed for physical security.

Actual construction time for the system was very short. Configuration of the hard drives using the Adaptec controller BIOS actually takes longer. The BIOS is relatively easy to use and provides defaults for faster configuration.

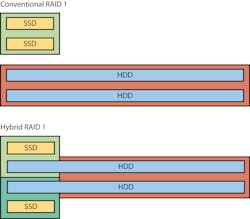

Two basic configurations are reasonable with the drives used in the project. It is possible to pair the SSDs and the HDDs (Fig. 6) although this does not take advantage of the hybrid RAID support. For the hybrid RAID configuration, the smaller SSDs are paired with the larger hard drives. The hybrid RAID configuration provides two RAID 1 partitions that are the same size as the SSDs, 100 Gbytes in this case. These can be designated as two separate virtual drives or one 200 Gbyte virtual drive using a RAID 10 configuration. This leaves the remaining hard drive space to be used in a RAID 1 mirror configuration or as RAID 0 that does not provide any redundancy.

The desired software can be installed once the virtual disk drives are defined. I tested the system using Microsoft's Windows Server and then replaced it with Centos 6 (a free version of Red Hat Enterprise Linux).

System Software

Adaptec's controllers are so new that they are not included as part of the installation disks for either of the selected operating systems. I had to add the latest Adaptec drivers during the installation process. There was no issue with Microsoft Windows but I did run into a configuration issue with Centos because the drivers for Red Hat Enterprise Linux (RHEL) were not not automatically recognized by the Centos installation process. A little manual work fixed the issue and I have been running it ever since.

I installed the Adaptec Storage Manager (ASM) after the operating systems were installed. ASM provides real time control for the local and any Adaptec devices available via the network. I actually now use ASM from a workstation that does not have any Adaptec controller to manage the two servers with Adaptec RAID controllers. The most useful aspect of the tool is the status when a RAID partition when it is being rebuilt because a drive was replaced. This was easily tested by pulling one of the drives and popping it back in. The system continued to run as long as the other drives continued to work properly. RAID 6 with a sufficient number of drives is needed to remove two drives at one time.

The final configuration used Centos 6 with its KVM virtualization support. I took advantage of the dual Gigabit Ethernet interfaces to run NAT gateway on a virtual machine (VM). The VM had access to both of the Ethernet bridge interfaces. This approach had the advantage of letting me check out half a dozen gateway packages. It was simply a matter of downloading an ISO image or a KVM image to test.

Of course, only one VM should be running at the same time since one side of the gateway had a fixed IP address. I finally wound up using Untangle for the gateway. I may get around to writing about the other gateway software I tried. They included the likes of pfSense and IPFire.

I am using a Samba server, also running in a VM, to provide access to the rest of the disk space. This let me minimize the host configuration. I used Linux's LVM (logical volume manager) to manage the disk storage. In the case of the Samba server, its main disk partition uses the hybrid RAID storage while the network shares are on the hard drive RAID 1 parition.

I do have a couple of other virtual machines running some LAMP servers. The nice thing about the configuration is that it is easy to put them on either side of the gateway since it is simply a matter of having them use the appropriate Ethernet bridge device.

The final system has been a joy to use and it has been very reliable. I run the system as a headless server running multiple VMs as already noted. Performance is excellent and the hybrid RAID system provides the desired redundancy.

Mini-ITX RAID Case Sports Full Size Slot

The CFI (Chyang Fun Industry) A7879 Mini-ITX Server Case (Fig. 1) available from E-ITX supports four hot-swap drives and a Mini-ITX motherboard with a full size expansion board without resorting to a riser card. The platform is ideal for a compact NAS (network attached storage) device or data acquisition system. I used the CFI A7879 with Super Micro Computers' Supermicro X9SCV-QV4 Mini-ITX motherboard.

I have done a number of projects including one based on VIA Technology's ArTiGO 2000 (see Nice NAS). The ArTiGO 2000 was a smaller system but its dual hard drives were not removable.

I really like the CFI A7879 because it has space for a full size expansion board. This is a x16 PCI Express slot with the Supermicro X9SCV-QV4. It might be a PCI slot if you use an older Mini-ITX board.

The primary reason for choosing the CFI A7879 is obviously the four removable storage slots (Fig. 2). The storage bays support 3.5- and 2.5-in hard drives (Fig. 3). Still, the ability to include a full size board is significant. It allows the system to be used as a powerful desktop system with a heftier video card. Another alternative a data acquisition board.

For my project I filled the PCI Express slot with an Adaptec 6805 RAID controller (Fig. 4). The Supermicro X9SCV-QV4 motherboard supports some RAID configurations but the Adaptec board is significantly more powerful and flexible. Its hybrid mode is ideal if there is a mix of flash and hard drives being used. The Adaptec 6805 controller also has a supercap-based backup system that the motherboard lacks.

The case comes with a 200W FlexATX power supply sufficient to handle an Intel Core i7 processor that allows pairing of a significant amount of compute power. The power supply has a tiny fan but this is augmented by a large 120mm system fan. It is directly behind the drives and the drive backplane is split allowing better air flow. The large fan allows the fan to run more slowly and quieter.

The front panel has two USB ports along with a pair of drive status LEDs. There is a power LED, reset and power switch blended into the front panel as well.

The standard CFI A7879 system has a SATA backplane. There is a 2 drive version that is shorter. There is also a SAS backplane version but the minimum order quantity (MOQ) is 5000 units.

If you are developing a system that needs removable storage then definitely check out the CFI A7879.

Hands-On A Hybrid SAS RAID Adapter

I have been working with the Adaptec RAID 6805 controller (see Low Cost SAS RAID Controller Handles SDD/HDD Mix) that is part of the low cost, entry-level Series 6 from PMC Sierra. I have been working with it for a couple months now and have been very impressed with its price/performance and flexibility. The eight port 6805 (Fig. 1) has a x8 PCI Express 2.0 interface with bandwidth of 1600 Mbytes/s. The 6405E is the four-port version with a x1 PCI Express 2.0 interface with bandwidth of 400 Mbytes/s. The Series 6 uses the PMC-Sierra's PM8013 SRC 6Gbit/s RAID-on-Chip.

The Series 6 brings a number of features to the entry level environment including a RAM cache that can be backed up using a supercap and the hybrid RAID support we will be looking at here. The 128 Mbytes of DDR2-800 DRAM of cache provides a significant performance boost. This approach is is quite common on higher end SAS RAID controllers.

It support SAS and SATA solid state disks (SSD) and hard disk drives (HDD). The boards support RAID 0, 1, 1E, 10 and JBOD. They also provide hybrid RAID support that combines the best of SSDs and HDDs.

The Disk Drives

I used the system with a pair of 100 Gbyte, 2.5-in Micron P300 RealSSD SATA SLC SSDs and 3 Tbyte, 3.5-in Seagate Barracuda XT SATA HDDs. Both provide 6 Gbit/s transfer rates. The Barracuda XT has a 64 Mbyte cache. The 7200 rpm drive provides a good mix between capacity and performance. It targets consumer applications. I have some Seagate enterprise Constellation ES drives that would provide a more robust solution but they were in use already.

The Micron P300 RealSSD drive is designed for enterprise applications. It has steady state sequential read/write performance of 360/255 Mbytes/s. Its random read/write performance is 44,000/16,000 IOPS. The drive supports secure erase and has static and dynamic wear leveling. The endurance of the 100 Gbyte drive is 1.75 Pbytes. The drive weighs in at only 100g.

I checked out the system using the drives in a number of combinations (Fig. 2). Virtual drives are configured using the RAID controller's BIOS interface. The standard configuration is pairing the SSDs and the HDDs in a RAID 1 configuration. Any SAS controller could handle this configuration. The performance of the Micron P300's (Fig. 3) is great but I was able to get the same kind of performance using the hybrid RAID configuration.

For the hybrid configuration, I paired an SSD and HDD to create a 100 Gbyte virtual drive. This resulted in two 100 Gbyte virtual drives. The remaining space on the HDDs (3 Tbyte less 100 Gbytes) was a third drive. This approach provided three virtual drives. Two were essentially as fast as the RealSSD drive and one was slower but much larger. In hybrid mode, data is written to both drives but it is read from the faster, flash drive. RAID 10 could have been used to provide a fast 200 Gbyte RAID configuration.

Configuring the drives was the easy part. The Series 6 is so new most operating systems do not have the drivers bundled with it. This means installing the drivers from CD. I tested the system using CENTOS 6. This is a free version of Red Hat Enterprise Linux 6 (RHEL6). I did run into an issue because the standard configuration was not recognized so I had to run the pre and post install scripts manually but the system worked just fine once the drivers were installed.

I also installed the multiplatform, Java-based, Adaptec Storage Manager (ASM). This management tool works with the device driver and a monitor daemon to handle the controller when the operating system is up and running. I have three Adaptec controllers spread across various servers in the lab and was able to manage them all from a single console. It is very handy when a drive fails and the alarm on one of the servers goes off.

One of the reason's the Series 6 gets such good performance is the on-board RAM. It is definitely worth the money to invest in the Zero Maintenance Cache Protecgtion super-cap. This keeps the RAM alive when the system fails. It charges quickly unlike a backup battery.

Adaptec' 6805 definitely ups the ante for low to mid-range servers. It was easy to setup and delivered the kind of performance I expected. Check it out if you need performance on a budget.

Mini-ITX Motherboard Handles 12V Environments

Super Micro Computer is well known for high performance servers and high-end embedded systems like the SuperServer 6046T-TUF (see 4U Super Server For Embedded Systems). Super Micro Computer's X9SCV-QV4 Mini-IX motherboard (Fig. 1) targets smaller systems and comes with a 12V version that is ideal for mobile or transportation applications. The motherboard's Socket G2 handles Core i7/i5/i3 Mobile processors. It uses Intel's QM67 chipset that include vPro technology (see Corporate Processors Great For Embedded Chores) that supports remote management. It also handles dual displays including HDMI devices up to 1080p. DisplayPort interfaces can handle resolutions up to 2560 by 1600 pixels.

I use the X9SCV-QV4 in a hands-on project in conjunction with the CFI (Chyang Fun Industry) A7879 Mini-ITX Server Case (see Mini-ITX RAID Case Sports Full Size Slot) available from E-ITX. It supports four hot-swap drives and, not suprisingly, Mini-ITX motherboards. The key is a width and orientation that allows a full size expansion board to be plugged into the X9SCV-QV4 x16 PCI Express expansion slot.

Overall, the X9SCV-QV4 is a very nice motherboard. I tried it with an Intel Core i7 processor that provides plenty of performance for an application server requiring a small cooling fan. The motherboard works equally well with a cooler Core i3 processor that would likely work well in some of the more mobile applications running off a 12V power source. Memorywise, the motherboard supports up to 16Gbytes of DDR3 non-ECC UDIMM 1333/1066MHz using a pair of SO-DIMM sockets. It also has a UEFI BIOS allowing it to handle larger hard drives.

There are lots of multiple interfaces on-board. The dual HDMI interfaces have already been mentioned. The dual display support can take advantage of the VGA interface as well as the LVDS interface.

For storage ther are four SATA2 3Gbit/s ports that support RAID 0, 1, 5, and 10. There are two additional SATA3 6Gbit/s ports that support RAID 0 and 1. A number of USB headers can also be used for internal flash storage. There is a Disk-on-Module (DOM) power connector.

The communication support includes two COM ports, one on the rear panel and one via a header. Ethernet support includes a pair of Gigabit Ethernet ports via Intel 82579LM and 82574L chips. There are 11 USB 2.0 port with 6 on the rear panel. Internally there are four additional ports plus an internal Type-A connection. There is also TPM 1.2 support.

The X9SCV-QV4 has proven to be quite flexible. It provides plenty of storage options as well as nice built-in display and networking capabilities. Just the thing for your next Mini-ITX project.

About the Author

William G. Wong

Senior Content Director - Electronic Design and Microwaves & RF

I am Editor of Electronic Design focusing on embedded, software, and systems. As Senior Content Director, I also manage Microwaves & RF and I work with a great team of editors to provide engineers, programmers, developers and technical managers with interesting and useful articles and videos on a regular basis. Check out our free newsletters to see the latest content.

You can send press releases for new products for possible coverage on the website. I am also interested in receiving contributed articles for publishing on our website. Use our template and send to me along with a signed release form.

Check out my blog, AltEmbedded on Electronic Design, as well as his latest articles on this site that are listed below.

You can visit my social media via these links:

- AltEmbedded on Electronic Design

- Bill Wong on Facebook

- @AltEmbedded on Twitter

- Bill Wong on LinkedIn

I earned a Bachelor of Electrical Engineering at the Georgia Institute of Technology and a Masters in Computer Science from Rutgers University. I still do a bit of programming using everything from C and C++ to Rust and Ada/SPARK. I do a bit of PHP programming for Drupal websites. I have posted a few Drupal modules.

I still get a hand on software and electronic hardware. Some of this can be found on our Kit Close-Up video series. You can also see me on many of our TechXchange Talk videos. I am interested in a range of projects from robotics to artificial intelligence.