Take Chip Package Co-Design Modeling From Concept To System Qualification

The term chip package co-design implies that many activities must happen simultaneously to ensure the first-pass success of a packaged device with minimum trial and error testing. When considering all pertinent care-abouts during the earliest phases of a product definition, this goal is easier to achieve.

For example, if a high-complexity logic device is under evaluation for a portable application, several considerations must be understood alongside the definition of the device functionality. These considerations include form factor, allowable cost, power dissipation, electrical signal integrity, reliability goals, minimum printed-circuit board (PCB) pitch requirements, fan-out design rules, warpage that might impact surface-mount yields, shielding needs, and rework.

The list of interactions is daunting, with multiple variables and tradeoffs. Software tools are necessary to accurately estimate the impact of the variables, and reliable material properties are required for input. The engineering team must judge the tools’ outputs to achieve the best balance of performance and appropriate weighting factors assigned to sometimes conflicting requirements.

Assessing Design Decisions And Possible Issues

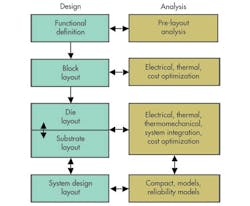

In a typical co-design flow, pre-layout tools are applied to assess such factors as overall system power budget, I/O placement optimization, and basic reliability during functional definition Electrical and thermal optimization is applied to the block definition of the chip. Iterations are performed for the full chip and package layout, with compact models produced for use by the system designer.

A typical chip-package co-design flow includes different levels of interaction at various phases of the design.

Consider the design of an IC die for a portable application where physical size is normally an overriding consideration. To meet the need for smaller, thinner portable devices, semiconductor and packaging developers must reduce the thickness of substrates, die, and mold caps. But these steps can sometimes present difficult challenges.

One issue is that thinner packages can be more susceptible to warpage, which can negatively impact surface-mount yields if it isn’t controlled. Stress analysis can be used to optimize the materials selection to ensure minimum warpage.

The material properties needed for this type of analysis include properties of the substrate core, build up, underfill or die attach, and any mold compound. Simple coefficients of expansion and modulus values are not adequate. Instead, full viscoelastic and viscoplastic properties are needed, with cure shrinkage and moisture swelling factors as well.

Thinner die can also increase thermal management challenges. Silicon is a good thermal conductor, normally spreading heat laterally away from circuit hot spots. But when silicon is thinned, lateral thermal resistance increases, which in turn minimizes conduction, drives up the temperatures of power dense regions, and creates hot spots.

There is a solution, however. Thermal modeling, used in conjunction with a system thermal understanding, can identify cooling options such as thermal via placement and help direct the locations and distributions of circuit blocks on the die.

After initial decisions are made for a design, some changes will be required. I/O placement schemes can change, circuit blocks might be rearranged for timing reasons, and die partitioning may occur where some functions are moved to companion die or companion die are merged together.

Each of these steps requires feedback from either electrical, thermal, or thermomechanical design tools. Ease of geometrical data transfer at these stages is an important consideration for tool selection. If the tools need too much human intervention for geometry transfer, the number of iterative design cycles can be cut, minimizing the optimization of the design.

The Validation Process

At the final stage of the design, before tooling is started, a final pass simulation ensures all concerns have been adequately addressed. Here, to an even greater extent than earlier phases, it is essential that modeling tools and methodologies have been validated, especially if the tools have been used to establish specification limits for the design. Lack of validation can result in costly design respins months after tooling was starting.

These delays can prove catastrophic to products in the highly competitive electronics market. In fact, a recent iNEMI task force estimated that missing a design cycle on a high-volume product can easily cost $500 million in lost opportunity, as well as lost engineering resources. As such, any team relying on co-design should adequately validate the tools.

The validation process includes running benchmarks, comparing tool outcomes to analytical results, matching tool calculations to bench experiments, validating the reliability conclusions of the tool, and performing mesh sensitivity studies to ensure the physics are adequately captured by the descretization.

The suite of benchmark studies should be run after each software release since bugs sometimes creep into the codes that escape the tool vendor’s notice. Often, the software vendor can quickly correct bugs found through such benchmarking. But in some cases, bugs aren’t corrected for many releases. Responsiveness of tool vendors to correct errors should be a top criterion in the selection of tools, especially when the success of the enterprise depends on it.

Don’t Overestimate Software Analysis

The need for knowledgeable experts throughout this process can’t be over-emphasized. State-of-the art software currently supplies numbers, which is extremely important. But there is a strong need for knowledge experts to evaluate the data and draw conclusions related to the design optimization. In other words, the modeling engineer should supply solutions, not numbers.

Co-design engineers should be open to innovative ways to overcome problems, suggest alternatives, make engineering judgments, question whether all issues are addressed, and be as knowledgeable as possible of other fields so they can assess how suggestions will impact others throughout the design chain.

When all the ingredients for effective co-design are in place, design cycles are shortened, respins are eliminated, end performance is improved, and better products result. The advantages are so compelling that most design teams use some form of co-design. With additional strengthening of the methodology, further product and flow improvements are possible.

About the Author

Darvin Edwards

Darvin Edwards, TI Fellow, manages the SC Packaging modeling team at Texas Instruments. His team is responsible for electrical, thermal, and thermomechanical analysis of new products and package developments. He received his BS in physics from Arizona State University and holds 20 patents. He also has authored or co-authored over 45 papers, articles, and book chapters and has lectured on thermal challenges, modeling, reliability, electrostatic discharge (ESD), and 3D packaging