Remember the relatively simplistic days of networking life? PCs functioned with hard disks and network cards, and those network cards were connected to network hubs or switches. Another box usually featured a firewall or gateway.

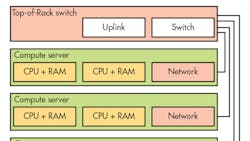

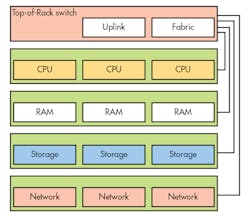

Many data centers have been built around this approach, often with 1U rack-mount systems (Fig. 1). A node incorporated processor, memory, storage, and a network interface in each 1U system. These were linked together and to the outside world using a top-of-rack (ToR) switch. Ethernet typically served as the network interface, although some systems employ other technologies like InfiniBand.

Of course, this approach comes in many packaging variations, including blade servers. Larger form factors also provide more room for more robust nodes, with more storage, memory, and processing power as well as space for other computational units like GPUs.

One other common component is the power supply, which typically provides power for all components within the system. These requirements differ depending on the technology and devices.

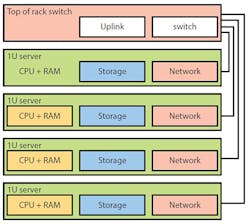

Splitting out storage offers a way to create a more modular environment (Fig. 2). In this case, a storage area network (SAN) or network attached storage (NAS) provides storage support for compute nodes that usually have only a processor and memory plus a network interface that ties everything together. Additional security and throughput can be attained with additional network interfaces for storage, isolating the network links to the outside world. Protocols like iSCSI provide remote block storage access to the compute nodes.

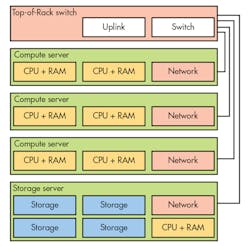

Rack scale architecture (RSA), or rack disaggregation (RD), takes the next step, splitting out all of the components and linking them via a high-speed fabric (Fig. 3). Fiber-optic connections are often considered because of higher bandwidth and extended distance compared to the copper alternative. The biggest challenge involves latency. One technology addressing this space is Intel’s Silicon Photonics.

Density of RSA compute nodes is designed to be higher than other compute systems. Most of the RAM gets moved to a separate area, where it can be allocated as needed to the compute side. Of course, latency is an issue, which usually means larger caches. Fabric-oriented memory controllers remain on the drawing board, while another option still considers keeping processors and RAM together.

Cloud service providers (CSPs) such as Amazon Web Services (AWS) are interested in these platforms because of their massive investment in hardware. RSA provides a way to improve scalability as well as migration to new hardware. It offers the advantage of changing different components when deemed necessary, which is valuable considering technologies like processors, memory, storage, and networking change at different rates and times.

Hardware options aren’t the only things changing in the data center. Network virtualization can be implemented using any of these platforms.

Virtualizing the Network

“Software defined” is the latest trend in the virtualization arena. For instance, the software-defined data center (SDDC) built on the Software-Defined Infrastructure (SDI) can include software-defined networking (SDN) and software-defined storage (SDS). Of course, Network function virtualization (NFV) is also in the mix (see “10 Things You Should Know About NFV”).

SDDC targets RSA with fast, efficient hardware that can run just about everything in software. Hardware acceleration handles elements like Ethernet protocols, but the idea is to use stock hardware to deal with networking and storage chores, including challenging applications such as deep packet inspection (DPI) and network routing functions.

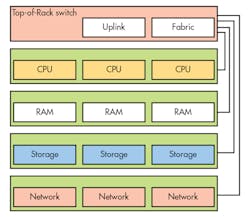

SDI is built out of virtual machines (VMs) that run on pools of compute, storage, and network nodes (Fig. 4). It targets RSA, but the approach works even with 1U server stacks. Running applications in VMs is already the norm. With SDI, however, everything is a VM, including network routing. The latest processor hardware can handle the control and data planes.

Many use Intel’s Data Plane Development Kit (DPDK) to implement SDN and build NFV services. DPDK includes libraries built on a multicore framework for Intel x86-based processors. Moreover, the latest processors and Intel Ethernet controllers have been optimized for DPDK support. Though DPDK isn’t the only solution addressing the SDI, it’s one of the more notable options.

This file type includes high resolution graphics and schematics when applicable.

SDN is still built on switching hardware, but it can handle L2 through L4 and offers configurability. Since it doesn’t deal with the higher-level protocols, it tends to be faster and more economical. Two major switch platforms are Broadcom’s StrataXGS Tomahawk series and Cavium’s XPliant.

The StrataXGS Tomahawk series can deliver 3.2 Tb/s with 32 ports of 100G Ethernet, or 64 ports of 40G/50G Ethernet, or 128 ports of 25G Ethernet. It supports OpenFlow 1.3+ using Broadcom’s OF-DPA (OpenFlow-Data Plane Abstraction). Overlay and tunneling support tackle VXLAN, NVGRE (network virtualization using generic routing encapsulation), MPLS (multiprotocol label switching), and SPB (shortest path bridging).

The 28-nm XPliant CNX88091 Ethernet switch chip also offers 3.2 Tb/s with 128 ports of 25G Ethernet. Other port combinations include 32 ports of 100G Ethernet. XPliant supports overlay and tunneling standards such as OpenStack and OpenFlow, and will support future protocols and standards like Geneve.

NVGRE uses Generic Routing Encapsulation (GRE) layer 2 packets through layer 3 networks. MPLS adds short path labels to packets so that they can be routed without using longer network addresses. Geneve addresses how traffic is tunneled in an SDN environment, and is supported by major VM players such as Microsoft, Red Hat, and VMware. An SDN environment will typically contain a mix of protocols.

These two switch platforms handle the various protocols using five major components: parsing, lookup, modify, queue, and count. Parsing examines incoming packets. Lookup determines where and what should be done with a packet. Packet modification depends on the protocol involved. Queue handles buffering of packets. Finally, count provides the statistics about the system. Typical switches only count packets, bytes and errors, whereas these new platforms provide insight into more traffic and system details.

Both platforms are more generic than the current crop of routers. The switches can be used for leaf/ToR and spine portions of a network, although these often differ in terms of port configurations.

Virtualized Network Functions

Network function virtualization, or NFV, places functions that were often found in network appliances (services like firewalls and VPN gateways) into virtual machines. The advantage of NFV is scalability and management. The challenge, however, was to make the VMs match the reliability of dedicated hardware (see “Achieving Carrier-Grade Reliability with NFV”). Luckily, VMs can easily handle high availability.

The change away from old-style switches and network appliances gives vendors and users more flexibility. It’s very easy to scale functions such as firewalls and to link one NFV VM to another—a task that was previously done with cables. As the load increases, additional VMs can be started. Load balancing is another well-understood function. NFV VMs address Level 4 to Level 7 services.

VMs offer additional security, but they alone don’t make a good security policy. That said, companies like HyTrust provide policy-based security at the VM level. These systems deliver the kind of virtual security that matches the type of physical security used in the past. Physical access to servers and switches tends to be very limited, and for good reason. Security of virtual assets is even more important due to the ease with which these files can be copied and modified.

In general, a VM is a VM, and these will be connected to network and storage resources. A VM can be given access to these resources while being isolated from other VMs and resources within the cloud.

Virtual Storage

In the beginning, there was block storage, on top of which file systems were built. Hard disks and now flash memory constituted the primary base. Virtualization mirrored block-device functionality, providing virtual versions of the disk controllers. Behind the scenes, VMs in an SDI environment use local files or block storage, as well as network protocols like iSCSI, to add storage. An iSCSI target, often a VM with access to physical devices, services any number of iSCSI initiators (or clients).

Designers also must contend with the plethora of new storage technologies. For example, Diablo Technologies Memory Channel Storage (MCS) puts flash memory onto the processor’s memory channel (see “Memory Channel Flash Storage Provides Fast RAM Mirroring” on electronicdesign.com). The technology is found in products like SanDisk’s ULLtraDIMM. Diablo’s NanoCommit technology is designed to mirror DRAM in flash.

Another non-volatile dual in-line memory module (NV DIMM) solution comes in the form of NV-DRAM. Products like Viking Technology’s ArxCis-NV blend DRAM and flash on a single DIMM. A supercapacitor provides power to an on-module processor to copy DRAM to flash if power fails. Flash contents are restored to DRAM after powering on a system. In addition, other non-volatile technologies have begun to emerge in the DRAM socket space. Technologies such as MRAM have found a home in embedded memories until now.

New approaches to storage management include persistent memory file systems (PMFS). This method is designed to take advantage non-block non-volatile storage.

Another technology found in virtualized solutions is Seagate’s Ethernet-based Kinetic disk drive (see “Object Oriented Disk Drive Goes Direct To Ethernet” on electronicdesign.com). This drive has a pair of 1-Gigabit-Ethernet interfaces. Instead of conventional block storage, it uses a key/value model, which has been integrated into many “big data” technologies (e.g., Hadoop).

Maxta’s MaxDeploy architecture provides a VM-centric storage system that even works with existing 1U server configurations. It presents a single virtual interface to storage that’s typically spread across nodes containing compute and storage.

No one configuration addresses all installations, but a migration toward SDI and SDDC is underway. Service providers and enterprise IT utilize large systems that can take advantage of SDN and NFV. Adoption is on the rise as the technologies continue to retool themselves.

This file type includes high resolution graphics and schematics when applicable.

About the Author

William G. Wong

Senior Content Director - Electronic Design and Microwaves & RF

I am Editor of Electronic Design focusing on embedded, software, and systems. As Senior Content Director, I also manage Microwaves & RF and I work with a great team of editors to provide engineers, programmers, developers and technical managers with interesting and useful articles and videos on a regular basis. Check out our free newsletters to see the latest content.

You can send press releases for new products for possible coverage on the website. I am also interested in receiving contributed articles for publishing on our website. Use our template and send to me along with a signed release form.

Check out my blog, AltEmbedded on Electronic Design, as well as his latest articles on this site that are listed below.

You can visit my social media via these links:

- AltEmbedded on Electronic Design

- Bill Wong on Facebook

- @AltEmbedded on Twitter

- Bill Wong on LinkedIn

I earned a Bachelor of Electrical Engineering at the Georgia Institute of Technology and a Masters in Computer Science from Rutgers University. I still do a bit of programming using everything from C and C++ to Rust and Ada/SPARK. I do a bit of PHP programming for Drupal websites. I have posted a few Drupal modules.

I still get a hand on software and electronic hardware. Some of this can be found on our Kit Close-Up video series. You can also see me on many of our TechXchange Talk videos. I am interested in a range of projects from robotics to artificial intelligence.