If we attempt to build a system that will never fail, not only are we demonstrating that we do not understand safe systems, but we are also likely to over-engineer and produce a system that either does nothing useful (for instance, a train that never crashes, but also never moves) or is more complex, and hence fault-ridden and prone to failure, than a system that is just sufficiently dependable.

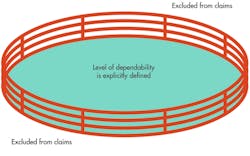

Of course, it is necessary to decide what level of dependability is “sufficient.” In some cases the answer is clear. If the customer requires the device to be certified to Safety Integrity Level 2 as defined in IEC 61508 for continuous operation, then one dangerous failure is allowed every million hours. In many cases, however, we must set the numerical value ourselves.

We will need to choose from the different methods for assessing acceptable risk levels used in different countries and in different industries. These methods include:

- ALARP (as low as reasonably practical): The potential hazards and associated risks are identified and classified as clearly unacceptable, tolerable if the cost of removing them would be prohibitive, or acceptable. All unacceptable risks must be removed, but the tolerable risks are removed only if the cost and time can be justified.

- GAMAB (globalement au moins aussi bon) or GAME (globalement au moins équivalent): The total risk in the new system must not exceed the total risk in comparable existing systems. Translated, GAMAB and GAME mean “globally at least as good.”

- MEM (minimum endogenous mortality): The risk from the new system must not exceed one tenth of the natural expected human mortality in the area where the device is to be deployed. For example, for people in their mid-twenties in western countries, this value is about 0.0002.

All these techniques then must be adjusted depending on the number of people that could be simultaneously affected by a dangerous failure of the equipment.

To decide which risks are unacceptable, tolerable, and acceptable with ALARP, we will need to determine numerical values for the maximum allowed probability of dangerous failure for each risk. With GAMAB and MEM, we will need to determine this numerical value globally.

An aircraft flight-control system demonstrates the importance of clearly and explicitly defining requirements for sufficient dependability. Failure of such a system while in use can have tragic consequences. But in an airplane that can stay in the air no more than, say, 10 hours, the flight controller design can count on a refresh every 10 hours plus a safety margin.

In fact, it may well be better to allow the system such a refresh and focus time and effort on increasing dependability during those 10 hours plus a safety margin when it is in continuous use, rather than on attempting to build a theoretically “perfect” system that never requires a refresh.

A change of context invalidates all dependability claims, though. Our dependability claims for the flight-control system are valid within a context and under specific conditions, and we must note these limitations in the system safety manual. This manual must accompany the system and explicitly state that the system’s dependability claims are valid only if the system is completely refreshed after 10 hours plus margin of error.

Preparing The Development Environment

Clear and explicit dependability requirements are a sine qua non condition for building a safe system. Without them we can never know if we have built the system we need because we do not have standards against which we can measure the system’s dependability.

Expertise and a good development process are not essential for building a safe system. It may just be possible for engineers without training or experience in safe systems working in a chaotic environment to set requirements for a safe system and build that system. It is improbable, though, that they would be able to produce adequate evidence to justify their claims about the system’s safety.

Expertise and a good development process are not guarantees that a system will meet the required level of functional safety. They do not even guarantee that the system will be a good one. They do, however, vastly improve the chances that this will be the case.

Experts And Good Processes

Extensive knowledge of both context and problem is needed to formulate requirements for a safe system. Further, no two software designs are alike, and great expertise is required to produce a design of the simplicity required for a safety-critical system.

Finally, a comprehensive understanding of software validation methods, the software system being evaluated, and the context in which it is evaluated (including validations of similar systems) will be required to demonstrate that the software system in question meets its defined functional safety requirements.

A good process provides a well-defined context, not just for development, but also for the interpretation of the results of testing and other validation techniques. The process, for example, is what allows us to interpret the results of testing. Without the process definition, this interpretation would be impossible (Fig. 2).

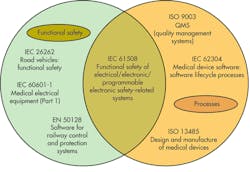

Perhaps nothing underlines the importance of good processes for the development of safe systems than IEC 62304. This standard, which is becoming the de facto global standard for medical device software lifecycle processes, does not define common numerical values for acceptable failure rates, as does, for example, IEC 61508 SIL3.

Rather, affirming the fundamental role of good processes, not just in the development, but also in the maintenance of safety-critical software in medical systems, IEC 62304 sets out the processes (including a risk management process), activities, and tasks required throughout the software lifecycle, which ends only when the software is in longer in use.

Designing The Safe System

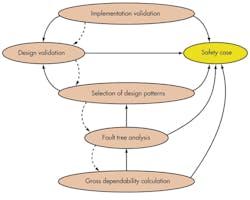

Once we have determined the level of dependability our system requires, we may use a structured approach to create the system architecture and design. In fact, we can apply a set of steps throughout this process (Fig. 3).

Note that this process is not linear. There is feedback and iteration between each pair of steps.

- Gross dependability calculation: Apply simple techniques to see whether the proposed design could possibly meet its dependability target. Although the Markov assumptions do not generally apply to software, Markovian analysis can be helpful here. The failure distribution assumed in Markov models is certainly one of the distributions that the system must meet. Markov analyses are quick and easy to prepare, and the results are necessary but not sufficient to affirm that the system could meet its requirements.

- Fault tree analysis: This step refines the gross dependability calculation to account for consequential failures, more realistic failure distributions, and other factors. The output is a dependability budget for each of the system’s components.

- Selection of design patterns: This is perhaps the most difficult step. As Bev Littlewood noted in a 1980 paper, designers and programmers often respond to being given a dependability budget for their component with, “I am paid to write reliable programs. I use the best programming methodologies to achieve this. Software reliability estimation techniques would not help me.”1 Today, 30 years later, a more professional approach is required. Different design and implementation patterns are appropriate to different levels of required dependability.2

- Design validation: Once we have completed the design and assessed its dependability, we can create formal verifications of key algorithms and protocols.

- Implementation validation: Once the implementation is complete it must, of course, be verified. Testing forms a significant part of this, but regeneration and validation of the implemented design can also play a part.

The results and artifacts from all of these steps will form part of the safety case, which will present the evidence and the arguments for the dependability we claim for the system. Although a safety case can be prepared retrospectively, it is much easier to construct it during the architectural, design, and implementation phases.

Building The Safe System

Safety should be built into a system from the start, and all work should follow from the premise that, as we must never tire of reminding ourselves, all software contains faults and these faults may lead to failures.

A senior manager may decide that software should be written in a language (such as C) that doesn’t provide much protection against programmer errors. Line management may organize work around teams in ways that do not foster good coding practices.

Also, programmers may work under conditions that lead them to make mistakes. An inadequate tool set, overly aggressive deadlines, a lack of sleep, and other factors all can contribute to errors.

Designers and programmers are human and therefore produce flawed designs and code. Testing and design verification misses some of these flaws, so they are not corrected. Post-shipment defenses, such as code written to recover from errors, may contain its own faults as well and fail.

Faults are introduced at every stage of design and development, and inevitably some are missed. Some faults are benign. Others are caught and corrected, or at least prevented from causing errors. Others cause errors, and unfortunately, some of these errors cause failures.

For example, a tired or clumsy programmer may want to allocate 10 bytes of memory, but may type:

char fred\\[100\\];

int x;

or:

char fred\\[1\\];

int x;

Neither fault allocates the correct number of bytes. In the first case, unless the system is subject to severe memory restrictions, the fault is unlikely to produce an error, much less a failure. But the second case may well produce an error, since a programmer, believing he has a 10-byte buffer, may write code that will overwrite x and the next five bytes, an error that could cause a failure.

Working from the premise that all faults may eventually lead to failures, we must include multiple lines of defense when we design and build a functionally safe system. As we build our system we must reduce the number of faults we include in our design and implementation, prevent faults from becoming errors, prevent errors from becoming failures, and handle failures when they do occur.

References

- Bev Littlewood, “What makes a reliable program: few bugs, or a small failure rate?” in American Federation of Information Processing Societies: 1980 National Computer Conference, 19-22 May 1980, Anaheim, Calif., Vol. 49 of AFIPS Conference Proceedings (AFIPS Press, 1980): pp. 707-713.

- There is not room to discuss design patterns, design validation, and further implementation validation here, so we will address them in a future article.