This article appeared in Evaluation Engineering and has been published here with permission.

What you’ll learn:

- The collaboration between Semtech and Intel.

- Functionality of the RealSense LiDAR Camera L515.

- Simon McCaul’s views on the market and industry.

Commercializing advanced technologies is a rising tide that lifts all boats, as any given tech is only as good as the ability to apply it to a purpose. Every advanced tech, from the laser to digital signal processors (DSPs), started out as standalone products before convergence brought them into more advanced integrated systems. Only by having high levels of integration can an advanced solution, for any application, offer the performance and functionality now expected in the marketplace.

One of the areas that is going through a wave of evolutionary change is in sensing systems. Now, not only are multiple sensors integrated into a single product, but their information is also integrated in the most advanced solutions, like autonomous vehicles and systems. This integration of data to create a more intelligent interpretation of the situation around an observing system is called sensor fusion, as it is more than just putting a bunch of sensors in a package.

Sensor subsystems still undergoing evolutionary development involve optical solutions like vision, and most especially Light Detection and Ranging (LiDAR), which offers high levels of object-recognition capability. However, legacy LiDAR solutions can be fairly complex and expensive, since the core technologies involved are still advancing fairly aggressively.

One example of this advancement in capability due to commercialization can be found in Semtech's collaboration with Intel to develop optical semiconductor platforms for LiDAR solutions. The company’s laser drivers and programmable transimpedance amplifiers (TIAs) are integrated into Intel’s RealSense LiDAR Camera L515, enabling the development of power-efficient, high-resolution consumer LiDAR solutions.

The work between Intel RealSense technology and Semtech’s Signal Integrity Products Group targeted the L515 camera’s ability to work in very low signal-to-noise ratio (SNR) environments. The TIAs have low input referred noise (IRN), which lets the L515 LiDAR camera deliver a very high resolution of up to 23 million depth points per second.

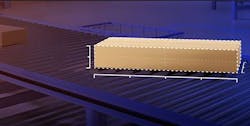

Features of the camera include high-precision depth capability and an error of less than 16 mm at max operating range. Along with high-frequency laser modulation, it can sense objects as small as 7 mm diameter from up to 1 meter away (Fig. 1). To get a better understanding of what the collaboration and its results means to the industry, we spoke to Simon McCaul, Senior Market Manager for Semtech’s signal integrity product group.

On Device Integration

EE: Let's start with the announcement, and then back up to the big picture, and then zoom back in again a little. The big thing is that this Intel LiDAR camera has an incredibly high level of integrated technology.

Simon McCaul: I think it's a good piece of technology, it's put together a lot of different electronic, optical, and mechanical technologies that are unique, and in some ways, the first time that they've been put together in this particular way. These technologies are mature in other areas, but this is a new way of putting them together. It is a product which could really change the way we do things in the future. It's still in the early days, but I think we're seeing increasing interest in applications for this kind of mix of technologies that have been brought together.

EE: You could almost pick a buzzword, and this technology would slot into it because it's among those leading-edge, bleeding-edge technologies that are going to be used in a lot of things.

McCaul: Yes, and there are numerous applications that it can go into. It really does open up a wide variety of possibilities. One of the challenges is that it raises quite high expectations for what can be done, and having to meet those expectations with the technology that's being developed. It's quite challenging. So yes, I think we have a good platform to start meeting those expectations and enabling some of those new areas of technology on a macro scale. It could really change the way people do things.

EE: When you think about application spaces that could benefit from LiDAR technology, that didn't have it before because of cost, or solution complexity, or lack of knowledge, is one of the multiple facets of the core technologies required to create it? This integration is new in its space, but it's also in one sense, a manifestation of the continuous convergence of technology and the multiplication of functionality. Wouldn't you say?

McCaul: Putting these technologies together does create something new and different, and enables a different way, literally, to view the world. These are depth cameras using LiDAR technology, and it can really affect a very wide variety of market areas. It's a vision system and something that, well, not just changes perception and how the world is perceived, it changes what users can do with that new perception of the world. That’s because it will be perceiving it in three dimensions autonomously, and will know what to do with that information.

On Leveraging Edge Computing

EE: Would you say this is directly part and parcel of the growth in edge computing, because you have to increase the functionality at the edge to match the processing power, and vice versa?

McCaul: Yes. What we see is, applications like this will drive the next generations of video, for example. Potentially for augmented reality, although this isn't something that's happening necessarily today with the camera that we have today, but it's part of the world of depth cameras now. It’s also part of the potential for them in the future, and in communication systems. And for edge computing, once you start doing augmented-reality systems, you are increasing the computing power and the communication power that's needed throughout that system.

So you get into a kind of virtuous circle of creating more powerful vision systems, creating higher levels and more valuable levels of data. That's a video stream that's not just containing two dimensions, but it's containing a whole extra level of information and capability. Then you need to be able to process that information. So, as you build up your communications and processing power, you can enable more powerful cameras. And that creates that virtuous circle, which will take us somewhere new if we can get the technology to deliver.

EE: When you think about capabilities to drive applications, and vice versa, the applications ask for solutions, solutions are provided. But sometimes solutions are provided that someone then realizes is good for other applications as well.

McCaul: Bringing together technology and the real needs and the real aims of users is a challenge. That's where we are today, introducing this new technology and connecting it with the desires of users. Sometimes users don't even realize they need something until you present them with the opportunity and the right tools. So, I'd say that's a good observation of where we're going.

We’re trying to match this potential in these new technologies with what can work in the market, and it's happening in multiple different areas at the same time, using multiple different types of LiDAR technology. They have different ranges, different powers, different resolutions, and different cost points. But I think in general, it's getting consumers and users used to these kinds of depth cameras and the potential for them.

On Augmented Reality

EE: Augmented reality could become a big application space, in some ways more than virtual reality, because augmented reality can be shared, which increases market potential and application capability. One could argue that you could use these existing cameras if you were setting up, say, for example, a static environment for augmented reality. Then you could start from there and get to the point where they're wearing LiDAR headsets with glasses.

McCaul: That's one example of what's possible. Once there is a realistic augmented-reality technology, and once users enjoy using it, then it generates all kinds of possibilities by itself to share that information. As we said, it has an accompanying momentum in the rest of the market, bringing up all kinds of systems and computing power that are needed for that. Some companies aim to do that, and that's specifically what they want from the point of view of our LiDAR system.

We are at an earlier basic stage than that, you can see the start of something, the start of bringing depth-camera technology into the real world, and it's good to have that vision, that long-term vision of powerful augmented-reality systems, for example. Another example is things like autonomous driving, which again is very challenging, but the market sees that as a good goal. And the technology has that long term aim.

EE: Even vision systems for autonomous robots, the camera could address something like that very readily.

McCaul: Right now, with the Intel system, one of its early applications is about measurement systems for logistics. Measuring a box in sort of half a second, it gives you the complete dimensions of the box if you just place it in front of the camera showing the depth capability (Fig. 2). This helps to increase productivity for logistics systems. It also helps bring the technology into the real world. A lot of the challenge at the moment is marrying up the capability of the depth cameras with the real-world needs.

EE: Do you have any final thoughts for our readers before we let you go?

McCaul: It's good to keep perspective. It's kind of early days with some of this technology, and there are a variety of LiDAR technologies out there. It's actually quite an exciting time for the industry, because we are seeing the early adoption of these systems. We're seeing a growth in a new way of looking at the world that could have a big influence. It's not there yet, but that's the exciting thing about early days. It's very interesting to watch this space.