A machine-learning acceleration chip developed by startup Groq is set to take on a range of high-performance applications from autonomous vehicles to the cloud and the enterprise. Its Tensor Streaming Processor (TSP) is a single-threaded machine that handles integer (INT8) and floating-point models. Among the features is a global shared memory bandwidth of over 60 TB/s using on-chip memory. The three-quarter length PCI Express (PCIe) card has a x16 PCIe Gen 4 interface.

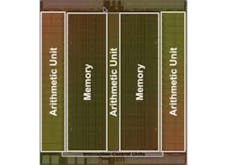

The TSP uses a different compute layout (see figure) designed to deliver high performance. The architecture is designed for compiler-orchestrated execution. This allows for deterministic operation, since there’s no caching system involved. Runtime performance and power requirements are known at compile time. This also avoids potential hacks like Spectre and Meltdown, which take advantage of hardware speculation features in conventional processors that don’t exist in the TSP.

The Tensor Streaming Processor (TSP) provides deterministic performance and eliminates tail latency.

The TSP is optimized for a batch size of one that’s preferable for applications like self-driving cars and financial applications. The system also eliminates tail latencies that can impact enterprise operation in large servers.

The chip is single threaded, but it can quickly switch between models and layers. As a result, the chip can handle multiple models without a lot of data movement that can otherwise reduce performance.

Groq isn’t announcing performance numbers yet, but the company’s collection talent bodes well for a high-end machine. Jonathan Ross is Groq’s technical founder and CEO who also worked on Google’s TPU effort as a 20% project. He designed and implemented the core elements of the original chip. Dinesh Masheshwari is CTO. He worked on multi-threaded multi-processor systems in the mid-1980s. Dinesh served as CVP, CTO of the Memory Division at Cypress Semiconductor. And Michelle Tomasko, Vice President of Engineering, worked Nvidia, Google Consumer HW, and Transmeta.