Remove The Processor Dilemma From Constrained-Random Verification

Block-level verification has become a fairly mature technology over the past 10 years. All of the major EDA players support constrained-random stimulus generation in the simulation testbench. As part of this evolution, languages such as SystemVerilog and methodologies such as the Universal Verification Methodology (UVM) have been defined to ensure portability of models and testbenches.

Related Articles

- One Verification Model To Drive Them All

- Surveying The Verification Landscape

- Sizing Up The Verification Problem

Portability is essential to enable a verification intellectual property (VIP) business to become possible and to enable reuse across projects, business units, and even companies. This is a flawless strategy until the integration phase of a system-on-chip (SoC). Almost as soon as subsystems are created, a processor comes into the picture and creates a dilemma. Should the processor be included in the verification of that subsystem, or should it be removed?

The UVM answer to that is to remove it. It’s worth looking at the justification for this decision.

This file type includes high resolution graphics and schematics when applicable.

Removing The Processor

These days, almost every SoC is constructed using one or more third-party processors. Economics no longer favor a company having a processor that it designed itself and tuned for a particular application. It is cheaper to get the processor from an IP company such as ARM.

Many designs and companies use these processors, so models are subjected to more verification than would have been possible if the processors had been designed in house. When performing verification, there is no reason to waste precious simulation cycles verifying the processor. The best way to avoid this waste is to remove it from the design while simulating.

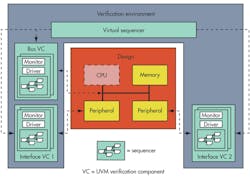

Removal also elevates the processor bus to that of a primary input of the design under the direct control of the testbench. Any sequence of bus cycles can be injected onto the bus, some of which may be difficult to orchestrate by writing actual code to run on the processor. This makes the design more controllable and easier to write tests for. The UVM has no notion of an embedded processor in a design, but the methodology works unchanged if the processor bus is treated as another interface.

A third reason to remove the processor is associated with the question of what software should be run on it if it remains in the design. Production software probably isn’t available at this point in the development flow. Even if it were, an operating system takes too long to boot before an actual verification application could be run. And, who is going to write it? If production software and applications are not available, test software has to be written, putting an extra burden on the verification team (Fig. 1).

The decision to remove the processor was reasonable at the time. But systems have changed. What was a block has become a sub-block of a larger block, and most of these larger blocks include one or more processors. In addition, many chips now comprise many of these subsystems integrated with only a little unique content.

The complete system and aspects beyond traditional functionality have to be verified. Does the chip run within its power budget? Does it operate with the expected latency and throughput? Are resources shared efficiently? Answering these types of questions requires a verification strategy that intimately involves the processor. It may be time to rethink the processor dilemma.

Verification From The Inside Out

Processors are at the heart of most subsystems today, and complete SoCs often contain multiple, heterogeneous processors. By definition, these processors have access to every corner of the chip and frequently compete for the same scarce resources including memory, bus access, and peripherals.

In many cases, these conflicts create chip-level problems such as performance and power. In the worst cases, they can result in missing functionality. To verify these aspects of the SoC, processors are an important part of the verification and must remain included. This does not mean that verification has to focus on processors. In many cases, high-performance behavioral models that execute the same code can replace detailed processor models.

Removing the processor can create problems when a design is moved from simulation to in-circuit emulation or a rapid prototyping system. Neither the testbench nor the UVM is available at this point, making it hard to treat the processor bus as an interface. In actual silicon, the problem is even more severe, since processors must be included and there’s rarely a way to isolate and access the processor bus from outside the SoC.

For a continuous verification strategy across all stages of the development process, the processors must be included. This ensures that verification is performed in ways that would be possible in the final system. For example, bus sequences that never could happen are avoided, and realistic interplays between the processors can be created by actual code rather than fictitious worst-case conditions.

Given the decision to keep processor models in the system, what code is going to run on them? The previous discussion about production software is still valid—it probably is not available and not efficient for functional verification.

Most SoC verification teams accept that the processors must remain and are forced to create custom code to run on them, much like the early days of verification for the rest of the hardware. Custom code creates directed tests, designed to verify a particular aspect of the system. They are tedious to write and maintain, so the market correctly moved away from this type of solution as soon as a better one was found.

The question is how a verification team can recreate the benefits of a constrained-random stimulus generation environment in a way that inherently includes the processor.

Scenario Models

The best way to capture what a system is meant to do is with use-case or scenario models to define the workloads of the system and its functionality. For example, a use-case for a smart phone may be that it has to be able to browse for a picture to send to someone with whom the user is conversing using the radio interface. The phone has to be able to do this without degrading the call quality while remaining within a certain power budget. A second scenario may involve the simultaneous use of the Wi-Fi connection to assist with the picture browsing.

In a recent verification panel, Jim Hogan, a well-known venture capitalist in the EDA and semiconductor field, said that Apple uses about 40,000 of these scenarios to define the functionality of its phones. The creation of 40,000 directed test cases that coordinate all parts of the system is a Herculean effort that most companies could not afford. Even working out how to orchestrate some of those scenarios may be difficult when multiple processors are involved.

It is now possible to create an SoC scenario model that captures all intended behavior without explicitly enumerating every possible scenario. This graph can be used to automatically generate the test software necessary to run on the processors, including the equivalent of 40,000 directed tests and many other scenarios that the software team might never write manually. The scenario model can include constraints that influence the test-case generation much as the UVM constrained-random method influences the testbench stimulus.

The New Constrained-Random Approach

Constrained-random technology has many qualities, such as its ability to verify unexpected conditions for which there was no explicitly defined test. The same capabilities should be true for any verification environment that spans all stages in the development flow, with a few improvements.

Randomness should be constrained to sequences that produce a useful outcome to avoid many wasteful tests produced by tools that do not understand the basic operations of the device. It has been estimated that the same amount of verification can be achieved by one tenth to one hundredth of the patterns using a class of tools sometimes called intelligent testbenches (Fig. 2).

Test cases should be pre-generated rather than generated in an incremental manner for several reasons. The first is efficiency. Many users report that the testbench execution time dominates simulation time. They are spending more time in the testbench code than in running the tests on the model. By pre-generating the test case, it can be executed any number of times with almost no testbench overhead. In addition, pre-generation allows the verification team to see what would be covered in a test before it is run, rather than collecting this information during simulation and slowing it down. A test case created from a scenario model has known functionality, is self-checking, and leverages the inherent coverage model built into it.

Constraining the generation allows test cases to run on an emulator, rapid prototyping solution, or actual silicon. In a real chip, there is no ability to stop the clock and wait for the testbench to decide on the next set of vectors. Stopping the clock to a real chip will result in its state being lost, and it is not possible to continue the processor clocks while other clocks are turned off.

Pre-generation means that all necessary coordination has been built into the test case and it can run in real time until completion. Test cases can be optimized for different target platforms—running longer sequences of scenarios in silicon than those generated for simulation, for example. However, the same scenario model can be used to generate all test cases for all platforms.

Conclusions

At times, a decision made in the past was the right decision then, but later became an obstacle to advancement. Such is the case with the dilemma of including the processor in the verification environment. The makeup of systems has changed and verification demands have moved from being served by software simulation to solutions that must encompass the complete development flow.

Embedded processors have become a central aspect of a modern SoC, and their inclusion is necessary to perform some aspects of verification not covered by old methodologies. Power and performance cannot be verified without the processors and it is not possible to extract them from real silicon, a function that would be necessary to extend the traditional verification methodology beyond the simulator.

The dilemma no longer exists. UVM testbenches for blocks are adequate until the stage of a subsystem with one or more processors. The new generation of constrained-random test cases based on scenario models can take it from there.

Adnan Hamid is cofounder and CEO of Breker Verification Systems. Prior to starting Breker in 2003, he worked at AMD as department manager of the System Logic Division. Previously, he served as a member of the consulting staff at AMD and Cadence Design Systems. He graduated from Princeton University with bachelor of science degrees in electrical engineering and computer science and holds an MBA from the McCombs School of Business at the University of Texas.

About the Author

Adnan Hamid

CEO

Adnan Hamid is the founder and chief executive officer of Breker Verification Systems, and inventor of its core technology. He has more than 20 years of experience in functional verification automation and is a pioneer in bringing to market the first commercially available solution for Portable Stimulus. Prior to Breker, Hamid managed AMD’s System Logic Division and led its verification group to create the first test case generator providing 100% coverage for an x86-class microprocessor. Hamid holds 12 patents in test case generation and synthesis. He received Bachelor of Science degrees in Electrical Engineering and Computer Science from Princeton University, and an MBA from the University of Texas at Austin.