Download this article in PDF format.

The transportation space has seen a burst of technology—not in one particular area, but rather across the board from improvements in electrical power systems to extremely sophisticated telematics to self-driving cars. Cars today have more electronics that ever before. Much more is coming, though, as features such as advanced driver-assistance systems (ADAS) become standard features instead of expensive options.

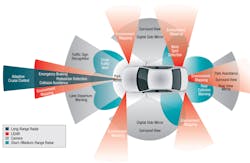

These changes are being made possible by improvements in sensors, processors and memory, software, and even human interfaces that need to be integrated in real time (Fig. 1). Here are some of the latest technologies and how their relationship with other technologies makes them even more important in automotive environments.

1. Multiple, overlapping sensors are needed to provide information for systems to build situational awareness in order to implement safety-critical ADAS support.

Sensor Advances

Smartphones have turned tiny digital cameras into commodity items in a way that other applications—digital cameras, for instance—could not. Automotive applications continue to benefit from the availability of cameras that can stream 4K video. High-definition cameras are being used for obstacle and object recognition for forward-looking ADAS applications in conjunction with artificial-intelligence (AI) machine-learning (ML) software. Here the higher resolution is important, and it’s useful for backup cameras, too.

Multiple cameras are also being used to provide a birds-eye view around the car. Renesas’ R-Car development kit knits together video streams from four cameras into a 360-degree view (Fig. 2). This is very useful when parking or navigating in tight quarters. More advanced ADAS systems highlight areas of potential oncoming collisions.

2. Renesas’ R-Car SoC is able to generate a 360-degree, birds-eye view around a vehicle by knitting together video streams from four cameras.

Two other range sensors that have shown significant improvements lately are LiDAR and phased-array radar. The general technology is not new, but major advances in miniaturization and cost reductions will affect when and where these systems are being utilized.

For example, Innoviz (Fig. 3), LeddarTech, Quanergy, and Velodyne are just a few companies delivering 3D, solid-state LiDAR systems. These systems, which are applicable in other areas like robotics (see “Bumping into Cobots “), are getting so small that multiple units will be hidden around a car.

3. Innoviz is just one of many vendors delivering 3D LiDAR technology. The InnovizOne has a 200-m range with better than 2-cm depth accuracy. It maintains a 100- by 25-degree field of view with 0.1- by 0.1-degree spatial resolution. The device delivers 25 frames/s with a 3D resolution rate of over 6 Mpixels/s.

Phased-array radar overcomes many of the limitations of LiDAR, allowing it to operate in rain and snow that can otherwise fool optical systems. Radar can be used to complement LiDAR and image systems. A number of companies are working to deliver technology in this area. For example, Texas Instruments’ (TI) single-chip millimeter-wave sensor, mmWave, handles 76- to 81-GHz sensor arrays for sensor and ADAS applications (see “Low-Cost Single Chip Drives Radar Array”).

All of these technologies have applications in other areas from manufacturing to security and even 3D scanning and printing.

Software Advances

AI and ML are garnering the limelight these days because they bring efficient image recognition to ADAS that’s critical for safe self-driving or augmented driving experiences. The underlying technology is based on deep neural networks (DNNs) and convolutional neural networks (CNNs) (see “What’s the Difference Between Machine Learning Techniques?”).

Neural networks will not replace conventional software applications, even in automotive environments, but they solve hard problems. Combined with new hardware, they can also do it in real time, which is needed in safety-critical applications such as self-driving cars. Multicore processors help in this case, but GPUs work even better (Fig. 4). Custom hardware bests them (see “CPUs, GPUs, and Now AI Chips”) all, and even specialized digital-signal processors (DSPs) can handle machine-learning chores (see “DSP Takes on Deep Neural Networks”).

4. The Drive PX2 from Nvidia is just the latest of a series of multicore CPU/GPU solutions targeted at automotive applications.

The parallel-processing nature of these solutions plays well to the multicore and transistor count growth in designs, even as upper-level clock frequencies have peaked. The more tailored solutions also have lower power requirements compared to more conventional processor solutions.

The in-vehicle infotainment (IVI) system advance is changing what drivers and passengers are able to visualize, as well as how they can link their smart devices and cloud-based applications to their car. Cellular-based Wi-Fi hot spots in a car are available from all vehicle manufacturers. The plethora of options requires a more robust and open approach. On that front, the GENIVI Alliance (see “Automotive Technology Platform Developed for Linux-Based Systems”) fosters open standards that are operating-system agnostic.

The The Linux Foundation’s Automotive Grade Linux (AGL) is one example of an IVI system that has received wide vendor support. AGL will be used in Toyota’s 2018 Camry (Fig. 5) as well as future Toyota vehicles (see “Toyota Including Automotive Grade Linux Platform in 2018 Camry”).

5. Toyota’s 2018 Camry will be running Automotive Grade Linux (AGL) for its in-vehicle infotainment (IVI) system.

The number of applications and tasks running on automotive systems can be staggering when one considers the amount of information being produced from the large collection of sensors, to the data processed and generated by AI systems, to streaming video moving over in-vehicle networks. Managing data distribution in safety-critical areas can benefit from standards like the Object Management Group’s (OMG) Data Distribution Service (DDS) that can provide secure, real-time, publish-subscribe managed data exchange throughout the system (see “Should DDS be the Base Communication Framework for Self-Driving Cars?”). This approach scales better than many point-to-point solutions typically found in designs that require fewer connections between applications.

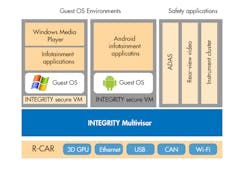

The hypervisors is another tool that has been around for a long time, but not in vehicle-control settings (see “What’s the Difference between Separation Kernel Hypervisor and Microkernel?”). However, that’s changing, and in a big way, due to the number and complexity of multicore solutions as well as the need to mix safety- and security-critical components with IVI and non-critical systems. Hypervisors targeting the automotive space are available from a number of vendors like Blackberry QNX Hypervisor, Wind River VxWorks, Green Hills Software INTEGRITY Multivisor (Fig. 6), and Mentor Graphics' Embedded Hypervisor.

6. Green Hills Software’s Multivisor provides the virtual-machine isolation of a Type 1 hypervisor, which is becoming commonplace in automotive environments that host safety and critical subsystems on the same hardware as IVI subsystems.

Hypervisors allow partitioning of virtual machines (VMs) such that safety and security certifications can be similarly divided. This means that non-critical components don’t also require the same level of certification that takes times and is very costly. Likewise, the safety- and security-related components that normally have limited third-party additions over time are becoming more common in IVI.

Type 1 hypervisors like Blackberry’s QNX Hypervisor 2.0 are designed to be small with a low memory and performance overhead, but features can be critical to performance and security (Fig. 7). QNX provides priority-based virtual CPU (vCPU) with a configurable scheduling policy. The hypervisor is based on the QNX SDP 7.0 RTOS, and thus provides fine-grain management and security. The QNX Neutrino RTOS is a likely candidate for VM that will need safety certification. The QNX OS for Safety is certified for ISO 26262 at ASIL D and IEC 61508 SIL3, and has been used in systems certified to EN 50128 at SIL 4.

7. Security permeates all aspects of the automotive environment, from manufacturing through secure over-the-air updates. Coordinating and supporting this infrastructure can be challenging, although companies like Blackberry are providing a complete solution.

Communication Advances

Even the wirings running around the cars are changing what they are conducting. CAN and LIN are still mainstays for control but CAN-FD, FlexRay, MOST and Ethernet are in the mix as well. Some of these are part of the IVI system but ADAS sensor connections are being added to the mix as well.

RJ0-45 jacks and CAT5 and CAT6 are unlikely to be found under the hood but the OPEN Alliance SIG’s (One-Pair Ether-Net) 100BASE-T1 and 1000BASE-T1 standards are trading power and simpler wiring for distance (see “Automotive Ethernet Was the Hidden Trend at CES 2016”). The IEEE 802.3 standard incorporated 100BASE-T1 in IEEE 802.3bw-2015 Clause 96. This allows conventional micros with Ethernet connections to handle this wiring.

Even Time-Sensitive Networking (TSN) is being used in automotive applications, at least in the IVI side of things for audio and multimedia synchronization (see “Time-Sensitive Networking for Real-Time Applications”). TSN actually started out as Audio Video Bridging (AVB) support for automotive applications.

Not all automotive communication will be wired. Wireless communication for Wi-Fi access point support and cellular links to the outside world are already here, but much more will be coming: Vehicle-to-vehicle (V2V) and vehicle-to-infrastructure (V2I) are part of the V2x mix designed to improve ADAS as well as provide self-driving cars with more information about their environment (see “Car Technology Drives CES 2017”).

The V2X discussion is ongoing, but test deployments are in progress at select cities around the world. This testing is often part of a smart-city approach that offers information ranging from cross traffic detection and smart stoplights to finding parking spaces or providing vehicle tracking.

User-Interface Advances

Advanced IVI and ADAS work better with improved displays. Many technologies that have proven popular elsewhere, like OLEDs, are creeping into concept cars, providing features from improved viewing to curved displays.

Heads-up displays (HUDs) are becoming more common and more complex, delivering more information in a larger space. Even portable and aftermarket HUDs are now available.

8. Texas Instruments' DLP3000-Q1 digital micromirror device (DMD) and chipset are automotive-qualified and provide a 12-degree field of view.

TI applied its DLP technology to HUD use (Fig. 8). Its DLP3000-Q1 digital micromirror device (DMD) and chipset are automotive-qualified. The platform, which provides a 12-degree field of view, is light source agnostic, and doesn’t require a polarized source. The latter means the system will not conflict with glasses that have polarized lenses.

We’ve been talking about all of the high tech going into a car. Occasionally, a low-tech approach combined with some high-tech support can provide users with an interesting solution, such as the Mpow Universal HUD (Fig. 9). It uses your smartphone to display information, using the same approach as other HUD implementations. An app can display speed based on sensors in the smartphone. This isn’t the same as a HUD built into the car, but it’s hard to beat the $30 price.

9. The Mpow Universal HUD uses a smartphone to provide a drive with a heads-up display.

This overview should convince you that cars are going to be one of the most sophisticated devices around. Slapping a steering wheel on a frame that has four wheels and an engine is by no means easy, but that isn’t the extent of what will be rolling off the assembly line. Not to mention, some of those cars will likely be missing that steering wheel in the future.

About the Author

William G. Wong

Senior Content Director - Electronic Design and Microwaves & RF

I am Editor of Electronic Design focusing on embedded, software, and systems. As Senior Content Director, I also manage Microwaves & RF and I work with a great team of editors to provide engineers, programmers, developers and technical managers with interesting and useful articles and videos on a regular basis. Check out our free newsletters to see the latest content.

You can send press releases for new products for possible coverage on the website. I am also interested in receiving contributed articles for publishing on our website. Use our template and send to me along with a signed release form.

Check out my blog, AltEmbedded on Electronic Design, as well as his latest articles on this site that are listed below.

You can visit my social media via these links:

- AltEmbedded on Electronic Design

- Bill Wong on Facebook

- @AltEmbedded on Twitter

- Bill Wong on LinkedIn

I earned a Bachelor of Electrical Engineering at the Georgia Institute of Technology and a Masters in Computer Science from Rutgers University. I still do a bit of programming using everything from C and C++ to Rust and Ada/SPARK. I do a bit of PHP programming for Drupal websites. I have posted a few Drupal modules.

I still get a hand on software and electronic hardware. Some of this can be found on our Kit Close-Up video series. You can also see me on many of our TechXchange Talk videos. I am interested in a range of projects from robotics to artificial intelligence.