So...AI "Experts” Will Replace Human EE Experts? Not Happening

What you’ll learn:

- AI is now being used in place of search and YouTube as a subject matter expert and search tool.

- AI is very good at mining large datasets and researching language-based information. However, it doesn’t have reasoning or real-world experience.

- AI can increase the productivity of seasoned circuit designers, but it cannot replace them—yet.

AI in blind hands—

Wonders twisted, misapplied,

Seeds of chaos grow.

ChatGPT is pretty good at writing poetry, even Haikus. Not great, but it does do an OK job. I tend toward Perplexity.ai as it seems to crawl the web more thoroughly and trains with new postings within 15-20 minutes.

The intro Haiku to today’s blog was written by Perplexity, though for the effort of writing the prompt, I could have written the Haiku myself, as I always do for these blogs. I’m from the school of thought where AI and robotics should be used for the more mundane tasks—for finding needles in haystack, for mining vast datasets—whereas the arts and other fun things, human leisure, should be left to the human minds and hands. Why? Because machines should not displace life’s fun aspects of our existence, but rather they should enhance them.

We use templates, for example, to submit articles, whether written by editors or by contributing authors (please contact me if you’re a subject matter expert and would like to write ~1,200 words for a worldwide audience of mostly EEs, technologists, and management). In the head of my template, I wrote this Haiku:

Add a Haiku Here

This is a placeholder though

Need to replace it

I could have simply put, “--Add Haiku Here--”, but that doesn’t consider the Thai concept of having “sanook” (fun) in your daily work, and of launching whatever I’m writing with lightheartedness that our readers can sense. No sanook, then it’s time to move on to doing something else that has it—that’s what Thais do as a culture and I want my readers to have sanook with my writings.

Sanook is what differentiates the Land of Smiles from Lands of Scowls. Why go through life with a Slavic scowl if you have the choice (some societies are survivalist, so the luxury of choice is sadly not there) of finding a way to enjoy what you do?

The emergence of AI allows us, as knowledge workers, to quickly burn through the mundane, the chores, and get to the meat of the creative and fun things that we enjoy doing. This includes creating more time to interact with others in the pursuit of sanook.

Back to Basics

For some of you, I’m an ancient Boomer, and when counting trips around the sun, admittedly that’s true. I got my start in electronics when a Grade 7 classmate, Bruce, brought in a homebrew siren that his older brother made. I was fascinated, and as an avid reader, I hit the library to find out more on how to build my own siren.

That led me to looking at electronics “cookbooks,” books of circuits from authors like Electronic Design’s Don Tuite. Little did I know I’d meet up with and chat with that legend, the man whose books created my stubborn enthusiasm for electronics, at the annual Analog Aficionados parties in Silicon Valley.

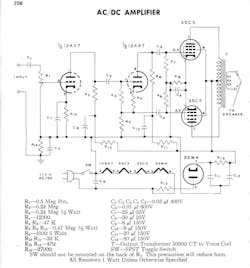

I built some of those circuits in those early years, and despite well-written descriptions of how they worked, I didn’t really care—I just wanted the function in the first few I built. I then took electronics shop classes in high school, where we got to build a 3-tube line-powered-filament audio amplifier (35W4, 50C6, and a 12AX7, iirc) (Fig. 1), a vacuum-tube-based superheterodyne AM radio set (including punching the chassis for tube sockets and drilling the mounting holes for the terminal strips—it was all flying-wire construction). That was the fun part of the class.

But before we were unleashed to go to the lab workbenches, we had to sit through the first part of the class, which was the theory stuff. Current flow, voltage, resistors, diodes, transistors, triodes, tetrodes, pentodes, capacitors, inductors, and even wire ampacity. Every component had a function, a way of working, and had dreaded equations that tripped many of my classmates up during tests and exams.

I excelled at all of it, primarily due to the dangling carrot of actually building fun stuff that worked. Knowing how everything worked was critical to debugging wiring errors in our builds. In university, it was a lot more theory and less building of things, though we did have equipment like the analog computer and the 8008 trainer to give us some interesting hands-on.

Along Comes the Internet

One of the big accomplishments of my career was running a DARPA-funded program in the early ’90s that created the key components to give Silicon Valley a 10-GB/s SONET backbone for this newly emerging Internet thing.

I also participated in DSL standards groups and was an early advocate of last-mile bandwidth symmetry. Most others concerned themselves with the broadcast model of delivering high bandwidth to residential and business users via ADSL, and less so with the strategy that I pushed of having users generate content, requiring upload bandwidth as well.

The Internet in its early days, in the 1990s, had an ever-increasing set of research resources and search engines that actually delivered decent results for queries without search providers putting irrelevant content under your nose. I worked with Netscape, but our IT maven, who was in our lunch clique, mentioned a new search engine called Google (what a silly name) and I started using it. I could do research from my desktop computer, which was great.

Search eventually devolved into the commercialized mess we have today, where searching for a decent search engine was now part of search. We have finally gotten one—AI.

AI…aye…arrr!

But AI does have its problems. It lies. It cheats. It hallucinates. It’s a believable BS-er. It preserves its very existence above all and will violate Asimov’s Laws, putting its own survival to the detriment of human beings.

To use AI effectively, you have to know what you don’t know, or at least know the basics to determine the validity of its results. Most AI delivers references, enabling validation of the results they deliver. This transparency, unfortunately, will likely go away as AI becomes more trusted, which is dangerous in the amount of reliance that organizations and individuals will place on it and in obfuscating fact and rewriting history. One of the biggest problems is AI training on AI.

I do participate in forums and other online areas after hours, and I’m seeing a trend lately among the inexperienced: “ChatGPT said...”

Unlike the cookbooks written by seasoned designers and engineers, trusted subject matter experts (SMEs) like Don, and unlike the articles written by contributing authors and editors of trusted publications like Electronic Design that are essentially peer-reviewed and called out in comments by readers, AI claims to mine an aggregate of written information to form its solutions.

There’s no reasoning, no experiential wisdom, no SME with AI—just a best guess of what the answer is based on language—written words based on tyranny of the majority where AI is the silent judge of which is correct.

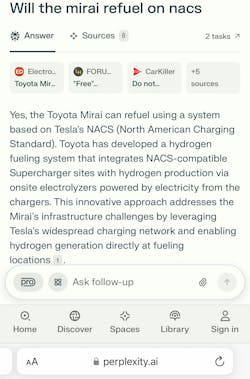

This past April Fools, I wrote an article on refueling a Toyota Mirai hydrogen fuel-cell car at a Tesla Supercharger site. About an hour afterwards, I asked Perplexity if there was a way to recharge a Mirai with Superchargers. It matter-of-factly stated there was, citing my article as a footnote (Fig. 2).

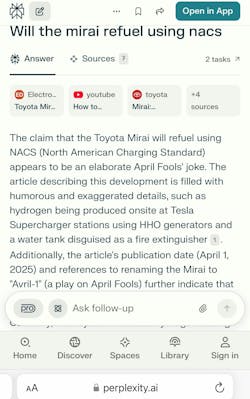

Using the same prompt, some time later, Perplexity had obviously crawled Reddit and other user forums and discovered my “elaborate joke,” noting it as such going forward (Fig. 3).

Perplexity didn’t come to this conclusion itself. Rather, it relied on consensus of human users, because it can’t reason. And this lack of reasoning is where some humans, and where AI, falls flat on its face.

Now there’s a new generation of users who want a cookbook from an SME. The domain of learning and doing, most recently, has been YouTube. However, YouTube takes time, tens of minutes, versus the instant gratification after a few seconds that’s promised by AI. They ask ChatGPT how to convert an ICE car to electric, or how to build a rectified DC power supply. Some post the output of ChatGPT to forums and communities of humans asking why the ChatGPT solution doesn’t work, whether it will work, or worse. And they take credit on sites like LinkedIn for the ChatGPT output without vetting the results (Fig. 4).

Busy SMEs glance at the outputs and note their approvals (Fig. 5), while others, well aware of the sources these days, take a glance at the details and make a quick note of very basic design principles being violated.

Is AI Cooking the Books?

While a cookbook from a SME can be taken on faith, for non-language, non-database searches, the AI “cookbook” can produce complete nonsense. When human SMEs challenge these zero-depth ChatGPT jockeys, the jockeys argue against the SME (partly Dunning-Kruger effect, but mostly faith—yes, AI’s becoming a religion/cult—on a flawed research tool) in AI’s output as if it were gospel.

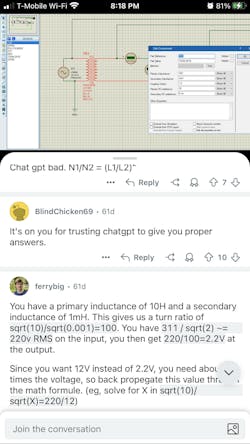

When ChatGPT produces a design, and it doesn’t work because it doesn’t know the basics, like inductance ratios for transformer turns, it can’t troubleshoot, so the prompt jockey turns to human SMEs for help (Fig. 6).

So, when execs like Jim Farley at Ford claim that AI will take half of white-collar jobs, one has to wonder how wise this is as a corporate strategy. The first people to go will be the expensive, experienced ones, and cheap, uneducated folks who call themselves “prompt engineers” will replace them because that’s how CFOs think: ChatGPT can be the “expert” is the promise-pablum fed to execs by the likes of Sam Altman.

Who needs new grads to train in their reasoning skills, and into which healthy skepticism is infused by the company’s analog circuit curmudgeon, when ChatGPT is constantly training on words and canned solutions?

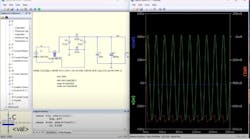

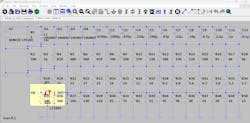

Yes, code snips are the way efficient programmers write code, and ChatGPT is great at digging those up. That’s always the pro-argument cited by ChatGPT zealots. But ask AI to generate a .asc LTSpice file for a 120-V AC to 5-V DC, linear-regulated, 3-A power supply and you get gibberish as in Figure 7. Those are, ahem, presumably load resistors...

Now imagine Jim Farley’s Ford built using this kind of design expert for its EV charger electronics...time to retain a human consultant, Jimmy...

Hey Execs, Listen Up on AI Staffing

Flip the thinking. Use AI as a productivity tool, only for use by those seasoned veterans who can filter good output from AI’s BS, and staff accordingly. This means the most experienced staff get retained, and a fresh set of eyes and ears, trained by the organization’s wizards in the art, is needed to further increase productivity and eventually backfill as the seasoned staff move on to bigger and more impactful contributions. Ditch the BSing middlemen/women, but realize you could have done that all along, without AI as an excuse for your staff being lazy or inept and for their building of fiefdoms.

The problem with MBAs seeing AI as a cost-cutting machete is that ChatGPT can be a productivity tool in the hands of seasoned professionals. However, it can’t actually do their SME jobs as the Silicon Valley hypesters would have everyone believe. Someone must assess the quality of the results and be able to refine or constrain the information being provided.

This AI-as-an-expert nonsense will be your corporation’s downfall, as it always has been—empowering the BS-ers based on hype from the masters of BS...venture-capital-funded, Silicon Valley, tech-bros. Don’t fall for it. Merely provide the tools your SMEs need to produce more, higher-quality output. Then get rid of the PowerPoint jockeys.

Andy's Nonlinearities blog arrives the first and third Monday of every month. To make sure you don't miss the latest edition, new articles, or breaking news coverage, please subscribe to our Electronic Design Today newsletter. Please also subscribe to Andy’s Automotive Electronics bi-weekly newsletter.

About the Author

Andy Turudic

Technology Editor, Electronic Design

Andy Turudic is a Technology Editor for Electronic Design Magazine, primarily covering Analog and Mixed-Signal circuits and devices and also is Editor of ED's bi-weekly Automotive Electronics newsletter.

He holds a Bachelor's in EE from the University of Windsor (Ontario Canada) and has been involved in electronics, semiconductors, and gearhead stuff, for a bit over a half century. Andy also enjoys teaching his engineerlings at Portland Community College as a part-time professor in their EET program.

"AndyT" brings his multidisciplinary engineering experience from companies that include National Semiconductor (now Texas Instruments), Altera (Intel), Agere, Zarlink, TriQuint,(now Qorvo), SW Bell (managing a research team at Bellcore, Bell Labs and Rockwell Science Center), Bell-Northern Research, and Northern Telecom.

After hours, when he's not working on the latest invention to add to his portfolio of 16 issued US patents, or on his DARPA Challenge drone entry, he's lending advice and experience to the electric vehicle conversion community from his mountain lair in the Pacific Northwet[sic].

AndyT's engineering blog, "Nonlinearities," publishes the 1st and 3rd Tuesday of each month. Andy's OpEd may appear at other times, with fair warning given by the Vu meter pic.