Data acquisition costs too much to be undertaken without a purpose in mind. There may be a legal requirement to archive test data as a safeguard against future litigation. This is a common activity for companies developing new drugs, for example. More often, data acquisition provides the input to an analysis program that extracts information relevant to a new design or as feedback to a production process.

To find out how some companies accomplish this sometimes difficult and complex task, we asked Ford, General Electric, Caterpillar, and Kohlman Systems Research to share their test and design interface procedures with us. Testing and data analysis are fundamental parts of product design within Ford, GE, and Caterpillar and the sole reason that Kohlman Systems Research was founded.

Aircraft Flight Testing

Aircraft flight testing is major business at Kohlman Systems Research. During an aircraft test flight, measurements from hundreds or even thousands of points are collected in binary format on magnetic tape for detailed analysis. A computer with several software modules is used for flight evaluation, and the system goes through many steps to present analyzed data to the aircraft designers within 24 hours.

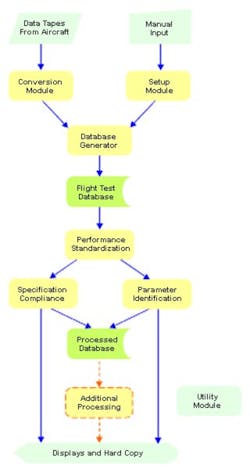

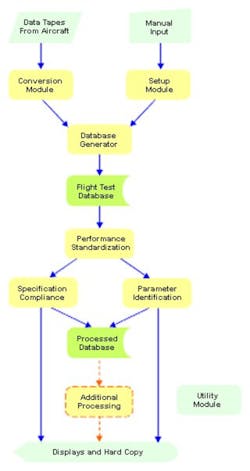

Stewart Platz, director of flight safety analysis at Kohlman, outlined the processing steps the company uses in flight data analysis. These steps, shown in Figure 1, are uniquely related to aircraft test, yet the concept has many characteristics similar to data processing on any large-scale program.

Before analysis can begin, data reduction is performed by a software conversion module. From binary data and transducer calibrations, it scales the data in basic engineering units such as degrees, grams, and meters. The software compensates for any drift caused by instabilities in the data acquisition process.

The setup module stores manually entered aircraft-specific data and data relationships that are constant throughout the test flight. These include position correction, weight, center of gravity and inertia, propulsion details, and calibrations for angle of attack and sideslip. The module factors in fuel burn schedules and any payload changes such as a drop of supplies. Also, engine definitions are loaded into this module.

Information from the conversion and setup modules is used in the database generation module to calculate a derived-engineering-units time history of the flight. This includes measurements that are not necessarily available directly from transducers, such as air speed, altitude, Mach number, lift, thrust, pitch, roll, yaw, acceleration, and weight. The output of this section of the program, along with unmodified digital counts, is placed in the flight test database for use by the three succeeding modules.

Some test flights are made on a subzero day in January and others on a scorching day in August. Several conditions such as temperature make a big difference in the performance of the aircraft, so the performance standardization module converts measurements from the database—stabilized flight, climbs, descents, acceleration/deceleration, turns, takeoffs and landings—into what they would have been with zero wind, good weather conditions, and standard weight. All these are essential elements in evaluating any given day’s performance in comparison to tests of the same type aircraft under different ambient conditions.

The parameter identification module computes longitudinal and lateral stability and control derivatives, using a simplified version of NASA’s Modified Maximum Likelihood Estimation (MMLE) analysis techniques.

The specification compliance module interacts with the operator to compute handling qualities as defined by MIL-SPEC-8785C and compares the results with recommended limits for 21 types of maneuvers. It takes into account longitudinal and lateral stability and handling, primary flight control characteristics, and secondary control systems. Results are displayed and can be output as a hard copy.

The utility module is a general-purpose interactive or batch tool set that can be used on any of the data sets in the processing sequence. The primary utilities in the set are plotting, listing tabular values in the appropriate units, and conditioning to edit wild points, filter, reduce the sample rate, or make manual deletions. Other utilities include curve fitting and cross-plotting of data at fixed intervals.

Both databases are available to the aircraft designer for further processing. The propulsion system, for example, can be the subject of a very comprehensive analysis by its designers. Structural loads and stresses can be evaluated in greater detail. Structural dynamics is another subject for the aircraft designer to study.

Rotating Machinery

The ways in which you wish to analyze your data can have a major influence on how it is acquired initially. For example, a large number of products can be classified under the heading of rotating machinery such as machine tools, farm equipment, factory equipment including conveyor belts, rotating kilns, and even fans.

As consumers, we encounter many products with electric motors as well as cars and mowers with internal-combustion engines. Because none of these products is perfectly balanced, they all vibrate as they operate. The noise spectrum that is generated can be very complex if multiple elements such as gears, pulleys and belts, and shafts are rotating at different speeds.

Analysis of rotating machinery can be greatly simplified if data is acquired relative to rotational (angular) position rather than time. There are three common ways in which to provide such a rotational time base:

-

A shaft encoder is fitted to the machine to produce a fixed number of pulses per revolution, regardless of speed.

-

The function of a shaft encoder is simulated by an electronic circuit synchronized to the rotating element of interest.

-

Data including shaft position is captured relative to a very high-speed time base and resampled post-acquisition.

Each approach has its merits. A shaft encoder provides accurately positioned pulses. Against this, an encoder that can produce 1,000 pulses per revolution at 5,000 rpm or faster is expensive. Before tests can begin, the encoder has to be coupled to the rotating shaft without introducing resonances or additional loading on the machine being tested.

The difficult part of shaft encoder emulation is maintaining synchronization between the electronic circuit and the shaft rotation. At least one pulse per revolution is required, but more are desirable. The number of times per revolution the electronic time base is resynchronized to the instantaneous shaft speed determines short-term sample placement errors. Resynchronizing many times per revolution—perhaps as often as there are teeth on an engine’s flywheel ring-gear—minimizes these errors.

Sampling at a high rate and then resampling the captured data to correspond to uniform angular increments is an equivalent process, although it can improve performance. Because the actual speeds at successive resynchronization points are known, a smooth transition can be provided in the resampling algorithm. This is an improvement in accuracy compared to the resynchronization step that may occur in the real-time system. In both cases, to ensure data sample timing accuracy, information about the shaft position must be obtained often.

Relating samples to shaft position instead of time allows you to acquire operational data that is independent of engine speed. When viewed in real-time, it’s easy to see the changes to engine peak pressures and ignition timing that occur as the rpm increases. In contrast, the display of samples taken at a fixed time base shows data from a variable number of ignition cycles as the engine speed changes. Sure, the peak pressures and timing are changing, but so too is the X-axis of the display.

Ford’s Powertrain and Fuel Subsystems Lab uses a hybrid approach to valve-train analysis. The valve train is mounted on an unpowered test engine driven by an electric motor. A shaft encoder connected to the crankshaft provides a pulse every degree and a separate indication of top dead center (TDC).

Allen Jackson, the engineering technologist in charge of valve-train testing, uses an in-house-designed instrument to generate triggers for an oscilloscope. The scope time base still runs in real-time, but the triggers result from counting encoder pulses and correspond to a number of degrees from TDC. Because he has more than one independent trigger available, Mr. Jackson can observe both the valve opening and closing on the scope display and determine the angular distance between them from the settings on the test instrument. See Figure 2.

Load-testing a valve-train assembly is not a trivial exercise. “We have designed a proprietary transducer that can record the force that a valve exerts on its seat when it closes,” Mr. Jackson said. “At low speeds, you’re only going to see the valve-spring load value. Of course, as speed increases and resonances occur in the valve train, the loads increase dramatically.

“We can observe when valve bounce takes place and how long it lasts with a resolution of 0.1° of the crankshaft rotation and the corresponding valve-seat load,” he explained. “If the valve-seat loading and the duration of the valve bounce get too large, you’ll start breaking valve stems, rocker arms, or pushrods.”

Order Analysis

Before trying to determine what part of an engine may be faulty, it can be useful to have an overview of the vibration being produced. The rotational time-base equivalent of spectrum analysis in the time domain is called order analysis in the rotational domain. In complex mechanisms, the relationships among the moving parts are much more easily discernable in an order analysis display than in a conventional spectrum analyzer output. See Figure 3 in print in the March 2000 issue.

A spectrum analyzer displays harmonics that are integer multiples of a fundamental frequency. In contrast, order analysis displays constant multiples or submultiples of a fundamental component rotation. Because samples correspond to angular position, orders are independent of rotational speed. Instead, they are determined by physical relationships among components.

For example, “…many interesting orders are noninteger multiples of the first order. A speed reducer has an output shaft order vibration at less than the first order. An automobile engine has order components that are higher ordered noninteger multiples. These may be gear-mesh rates, timing-chain engagement, or valve action, for instance.”1

Practical Data Transfer and Display

Statisticians work with tables of numbers, but engineers prefer to see the big picture that a graph presents. Harley Hayden, a research scientist at GE Research Center, said that test results generally are viewed graphically at first, and aberrations can be highlighted in that viewing. Then a point-by-point analysis of the digital details can be performed. If the equipment engineer is in another GE plant, often the data analyst will take a photo with a digital camera and put it on the Internet. This way, both parties can look at the photo as they discuss it on the phone.

Specific analysis and display methods are negotiated with the equipment designer so test results can be viewed in the most meaningful format. They start with information in Excel for common access, then process and display it for each application. Bulk transfer of test results is made using a medium such as PCMCIA so the designer can process and display it at another facility.

Ford also uses a variety of technologies to transfer data. John Jachman, a research engineer at Ford’s Powertrain and Fuel Subsystems Lab, said, “We started off by dumping data to a CD, then we added a high-speed network capability. We actually use both, and we also store data on Jazz drives. We picked a CD because the media is fairly inexpensive, and it lets the designer have almost an archive copy of the data.

“The network allows us to transfer large amounts of data quickly,” he added. “Either with the network or CD method, the design engineer can have the data at his facility and analyze it further with his own computer.”

Like any automated or semi-automated process, data acquisition can appear to be working properly, but it’s only producing rubbish. According to Owen Jury, a research engineer at Caterpillar, in real-time analysis the basic data quality is important. Is the data there? Are the filters correct? Does noise pose a problem? And are the intended test objectives being accomplished, or should they back up and start over?

Playback analysis takes much, much longer than real-time data collection. Caterpillar has a flexible in-house analysis software package initiated more than 20 years ago that is revised quite frequently to meet user needs. It starts with a data-vs-time plot for cursory examination, and in some cases, this is all they need. It can be followed by a data-vs-frequency fast Fourier transform (FFT) or power spectral density (PSD) analysis. This may be followed by a comparison of data with mathematical predictions.

Data is displayed on various types of video monitors or strip charts. Some of the display modes are extremely complex to meet the needs of users in analysis of large earth-moving equipment.

The company has designers all over the world, plus several test sites. Data is transferred on tape or other storage medium from a test site to a central analysis lab in Peoria, IL. Results of the analysis are sent to designers on disk as well as on strip charts. The processor of choice for data handling is the PC. A supercomputer is used for math modeling, but its resolution, accuracy, and speed are not required for data observation.

Conclusion

Well-developed data acquisition and analysis procedures are key to successful product development as evidenced by Ford, GE, Caterpiller, and Kohlman. These companies routinely deal with test scheduling, data collection, and communications between test requesters and data analysts. Try this approach in your own work: You may be able to eliminate many post-acquisition problems by taking a similar broad view that includes all stages of testing, analysis, and reporting.

Reference

-

“Order Analysis,” Hewlett-Packard, Fall 1996–Winter 1997.

Return to EE Home Page

Published by EE-Evaluation Engineering

All contents © 2000 Nelson Publishing Inc.

No reprint, distribution, or reuse in any medium is permitted

without the express written consent of the publisher.

March 2000