A 20-year veteran of product development shares some thoughts on when to refine or abandon an existing technology for something new.

With advances in technology, it seems obvious that companies should always launch new products. In fact, the entire start-up industry is basedon the notion of launching the next disruptive innovation. But is this always the case? When is it better to continue to refine an existing technology, and when is it better to abandon the current solution and look for something new?

Innovation

Disruptive innovation, first championed by Clayton Christensen, basically spells out a set of requirements necessary for a new technology to displace existing products. In his work, he looked at two main areas for disruption: new technology/new market and low-end entry.

In the first case, competition is against nonconsumption. A simple example is the now ubiquitous cell phone where, before its introduction, alternatives such as CB radios were vastly inferior.

In the second case, competition comes from a new value proposition that delivers a product at an entirely different cost structure. An often-cited example is the introduction of an inkjet printer as a lower cost substitute for a laser printer.

These introduction models stand in contrast to sustaining technology advancements. For instance, eking out more capacity from a hard drive or adding a color screen to a mobile phone simply adds functionality to an existing product. Generally speaking, disruptive innovations favor new entrants while sustaining technologies benefit incumbents.

Within these broad categories, additional refinement is needed to help assess the likely success of a technology development and introduction effort. For instance, the adoption of fiber-optic communications links provides an interesting example of the balance between disruptive and sustaining technologies.

Telecommunications Links

Until the late 1970s, the primary methods for long-distance communications consisted of coaxial cable, a microwave radio, or occasionally a satellite. These transmission methods provided links between a series of switches that processed and routed signals to their appropriate destination. The problem with these links centered on their limited capacity and high cost of ownership.

With the development of optical cable and transmission electronics, fiber-optic links quickly overtook other media for long-distance communications needs. Although initially higher in installed cost, this disruptive innovation offered vastly superior reliability and the capability to transmit significantly more information over longer distances. The replacement of copper by fiber optics proceeded unabated until the year 2000 when optical links accounted for roughly 98% of all connections longer than a few kilometers.

The intriguing part is the failure of optics to completely penetrate the communications link. By the mid 1990s, it was assumed that optical links would continue to replace copper under the banners optical backplanes and fiber to the home (FTTH). Obviously, this hasn t happened. So what went wrong?

Optical Backplanes

The large switches and routers that comprise the brains of the communications links include a set of circuit boards that processes and redirects information. As the need for more link capacity grows, so does the need for greater speed and capacity of the circuit boards and their interconnection technology.

Until the mid 1990s, information was passed from board to board via wires or other forms of copper-based interconnects. When the distance between equipment spanned a few dozen meters or more, the electrical signals were translated to optical signals to accommodate the more efficient method of fiber-optic transmission.

As people looked at the growth rate of optical transmission technology vs. that of copper, it seemed inevitable that copper eventually would fail to keep pace with market demands. In essence, the same disruptive technology that replaced copper over long distances would do the same over short distances.

As it turns out, the main flaw in this argument was the wildly optimistic underlying assumption of bandwidth requirements growing at 200% compounded per year. Looking back, actual bandwidth requirements grew at a slower rate, perhaps on the order of 50% per year.

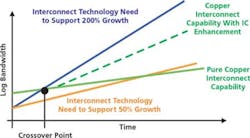

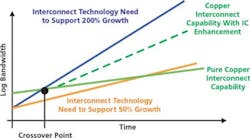

This single change in the growth-rate assumption had a dramatic effect on the need, or lack thereof, for adoption of new technologies. Referring to Figure 1, growth in transmission bandwidth has a direct correlation to the bandwidth needs of interconnect hardware, such as servers and routers.

Figure 1. Growth in Transmission Bandwidth

When the growth rate was predicted to be 200% per year, the need for bandwidth at the backplane and for short reaches outstripped the rate of innovation of copper interconnects. This is depicted in Figure 1 by comparing the expected need (blue line) vs. the expected capability (green line).

Meanwhile, two interesting things happened to keep pushing out the adoption of optics at the backplane. First, with a slower growth rate in the need for transmission bandwidth, the crossover point for copper's demise moved out several years. This bought time for signal-conditioning chips, an entirely different type of technology, to augment the capability of copper interconnects. These new chips in conjunction with incremental improvements in copper interconnects completely altered the pace of innovation (dashed green line) in Figure 1.

Fiber to the Home

On the FTTH front, the introduction of optics by the incumbent regional Bell companies (RBOCs) was stymied, but for different reasons. As early as the late 1970s, the first trials were held to bring optical links to the home. The promise was higher bandwidth and better quality of service. While technology issues make the component price of optics slightly higher than comparable copper-wire alternatives, this difference doesn t explain the vacuum of FTTH applications.

A bigger issue and as it turns out a largely insurmountable one is the financial incentive on the part of the RBOCs. They view the problematic mix of the installed base, service tiers, localized monopolies, regulations, and the potential for stranded assets as a rationale for indefinitely delaying FTTH adoption.

For instance, a commercial T1 line yielding 1.5 Mb/s can run from a few hundred dollars to more than $1,000 per month in some circumstances. Obviously, this is well beyond the reach of the average homeowner. As a result, the price tiers don't support offering wideband service to the low-end segment without the capability to segregate them from the commercial users.

Second, even if the RBOCs do support the expense of building out their network for new homes, there remains the significant possibility that they will be forced to lease the lines to competitors. In other words, the incumbents bare the risk but may not benefit from the reward.

From an installed-base perspective, it makes even less sense to rip up old wires to replace them with new technology. The process of removing old wires would result in an immediate write-off of the remaining asset value of the installed base.

At the same time and particularly where the cable TV modems pose a threat, digital subscriber line (DSL) service adds incremental life to the old copper network. DSL service pushes the costs to the consumer who must buy new interface hardware vs. the RBOC that incurs only minor upgrade costs.

So, a technology that started out as disruptive to the incumbent (fiber-optics to copper) wound up blocked from further market entry through a combination of advancements in supporting technologies and issues with the overall market structure.

Product Introductions

As we look at what's needed for successful product introduction, either disruptive or sustaining, it's important to include market dynamics and the effects of related technologies. In general, the more networked the technology, such as technologies that derive benefit from multiple users with the same standard, the greater the tendency to suffer from significant delays in technology adoption. At the same time, a networked technology provides a powerful incentive to adopt sustaining technologies at the expense of disruptive ones.

For instance, witness the difficulty in getting an alternative operating system launched into the computer environment. Even products that literally are given away, such as Linux, suffer from slow adoption rates.

It also is important to understand how the product fits into the overall value chain. Looking at the migration from VCRs to DVDs, the product is disruptive, sustaining, or no change depending on your perspective.

For the magnetic head manufactures, it was disruptive as the VCR recording mechanism migrated to laser for DVDs. For the movie industry, it was sustaining because not only did customers buy new DVDs, but they also replaced old VCR tapes. For the rental stores, what started out as sustaining is turning out to be disruptive as the price points for new DVDs encroach on their rental business. Lastly, for the metal-enclosure manufacturers, the change from VCRs to DVDs is pretty much transparent.

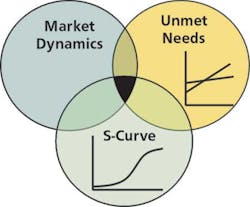

Because the intersection of these dynamic forces affects the product-introduction process, we tend to favor scenarios where there is alignment of three key areas (Figure 2).

Figure 2. Dynamics Affecting Product Introductions

Generally, we favor business areas where the buying chain benefits and aligns to the adoption of the technology. At the same time, the market segment of the immediate user has to have an addressable unmet need. The third segment is defined by location on the product life cycle. Trying to launch products too early usually wastes money and resources and potentially takes you in the wrong direction. Launching a product too late means a direct fight against incumbents.

Conclusion

While the successful launch of a new product is a tricky balance of many competing forces, it remains the most lucrative area for many companies. By understanding these forces and their relationship to the proposed product, it is possible to target your R&D, marketing, and sales efforts to improve the probability of product success.

About the Author

Russell Shaller, the global division leader for Gore's Electronic Products Division, has 20 years of experience in the fields of microelectronic design, manufacturing, business development, and general management. He began his career in the Advanced Technology Group at Westinghouse. In 1993, he joined W. L. Gore and Associates as a product manager for microwave products and later held a number of positions including general manager for the optical products group. Mr. Shaller received a B.S.E.E. from the University of Michigan, an M.S.E.E. from Johns Hopkins University, and an M.B.A. from the University of Delaware. W. L. Gore and Associates, 402 Vieve's Way, Elkton, MD 21922, 410-506-4431, e-mail: [email protected]

All contents • 2004 Nelson Publishing Inc.

No reprint, distribution, or reuse in any medium is permitted

without the express written consent of the publisher.