Machine vision systems have been used for years to automate processes. Applications can range from checking the amount of fluid in bottles on production lines to inspecting the number and alignment of pins on a microchip or detecting flaws in fruit before it is packaged for shipping.

Most of these applications deal with very tangible, defined measurements. For example, in bottle inspection, the camera system is triggered via a proximity sensor when the bottle is in front of the camera. Then edge detection simply looks for a sharp transition in intensities to determine how high the liquid is in the bottle.

Similarly, checking the pins on a chip involves triggering the system when the chip is in front of the camera and performing the same edge detection for each pin. The only change would be the addition of a caliper measurement between each of the pins to ensure none are bent. These calculations aren�t overly demanding on a processor. As long as the system has enough processing power to navigate the array of pixels from the camera fast enough to keep up with the hardware trigger being received, there aren�t many complications. Usually, these inspections end with a pass/fail result that can be fed into a system that rejects the bad parts or into a storage system that maintains a cache of failed inspection images.

But in recent years, engineers and scientists have had more advanced computer components as well as image acquisition algorithms with which to develop machine vision systems for implementing applications that previously weren�t even considered. Video surveillance, for example, often is done with multiple cameras and an individual watching video screens that receive these images. Even with multiple people watching the monitors, this can be a daunting task.

People Tracking

The demand for a smart surveillance system is increasing. At many places, including subway stations, airports, and theme parks, there are far too many cameras installed to implement real-time observation by human operators.

The Institute for Digital Image Processing at Joanneum Research recently designed and implemented high-level computer vision algorithms for object detection, classification, and tracking applications. Using these algorithms, the institute tried to address topics such as how to detect and track pedestrians in video streams.

This was no simple inspection with a pass/fail result. It involved tracking objects of different sizes, velocities, accelerations, directions, and colors. Unlike bottles, microchips, or fruit, each person had a mind of his own, adding another level of difficulty to detecting and tracking people with a computer-based application.

Joanneum Research has a long history of working with vision acquisition and analysis algorithms. While creating an application to sort recycled plastic, it started developing shape-recognition algorithms using Fourier transforms to classify objects.

Fourier features are detectable regardless of rotation, translation, or scale changes of the original pattern. Using Fourier features, certain objects commonly found in recycle sorting could be easily detected, reducing development time and increasing the accuracy of the sorting algorithm.

Joanneum Research developed this system with the National Instruments LabVIEW graphical programming environment and the NI IMAQ Vision library. NI LabVIEW has built-in fast Fourier functions to develop and test the sorting algorithms. The NI IMAQ Vision library contains commonly used machine vision algorithms.

In a later project, Joanneum Research designed a new set of shape analysis functions based on principal components analysis (PCA), a way to map higher dimensional data sets to a lower dimension for analysis. It is known as the optimal linear transformation for keeping the subspace that has largest variance. It also is a system-intensive algorithm and was not possible in real time until faster computer hardware was available.

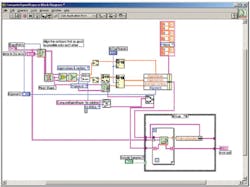

Another part of this set of algorithms is something called the eigenshape principle, which Joanneum Research used to describe complex shapes with just a few simple parameters. Figure 1 shows a LabVIEW diagram of a routine to compute eigenshapes. The eigenshape principle was an ideal solution for object tracking because vehicles or people could be located regardless of color, speed, direction, or consistency in motion with just a few parameters.

By using an iterative process to find and compare these parameters to the existing background template, all objects in a camera field of view that were not a static part of the environment could be effectively located. This process is known as the active shape model (ASM), which Joanneum Research completely prototyped and implemented in LabVIEW and IMAQ Vision.

Joanneum Research also conducted motion detection and tracking in its surveillance-based lab. It started with background subtraction methods commonly used for this type of application and implemented Gaussian mixture models coded as formula nodes for easy insertion into existing code.

For motion detection in any real-world surveillance system, it is essential to have some idea of where objects are going. The algorithms used for detecting optical flow are numerous.

To have an optimized solution for both efficiency and accuracy in a pedestrian tracking application, Joanneum Research chose to implement the Kanade-Lucas-Tomasi (KLT) point-tracking algorithm. The KLT algorithm uses local gradient and image differences to calculate the motion of small parts from one image to the next in the video stream.

Once a moving item in the image was located through KLT algorithms, it was evaluated to determine if it was a person, vehicle, or some other object in the camera field of view. To classify the items, first the entire object that was moving had to be identified.

To do this, Joanneum Research extracted an appropriately sized section of the original image, identified features in this area, and applied a classifier to determine if the object was a person, a vehicle, or just a component of the background. PCA was used for vehicle detection, and Gabor filters and histograms of gradients features for pedestrian detection. All of these methods, such as face detection using eigenfaces algorithms, create subregions that are easily stored for further analysis.

Summary

Combining the different algorithms and functions they had developed, Joanneum Research created a prototype for pedestrian detection and tracking. Figure 2 shows a composite of a current video frame with superimposed tracks of several people. In each frame of the video, pedestrian candidates were extracted using ASMs and the resulting shape candidates verified with the pedestrian classifier algorithms.

To track a person from frame to frame, Joanneum Research calculated the person�s motion with the KLT point-tracking algorithm and verified the person�s location with a texture-similarity measure based on co-occurrence matrices. Then data structures were used to handle the storage of tracks, filter and recognize duplicate pedestrians, and manage the appearance and disappearance of objects.

About the Author

Matt Slaughter is a product engineer for National Instruments and manages the NI Vision product line. Previously, he worked as an applications engineer for real-time embedded applications and industrial communications protocols. Mr. Slaughter holds a B.S. in computer engineering from Texas A&M University. National Instruments, 11500 N. Mopac Expwy., Austin, TX 78759, 512-683-0100, e-mail: [email protected]

February 2007