Many manufacturing managers would prefer to avoid thinking about circuit board test, often regarding it as a necessary evil. �It doesn�t add value,� they assert. �We only test because we have to� goes the refrain. Even so, the worldwide electronics manufacturing community spends billions annually on PCB test in equipment purchases, ongoing applications and support costs, personnel, rework, scrap, and overhead.

OEMs and electronics manufacturing services providers use lean initiatives and move production to lower-cost geographies to drive down cost and increase razor-thin margins. However, test gets an exemption from most serious efforts to reduce cost beyond downsizing test-engineering staffs. The test community has failed to address the dramatic changes of the past decade, continues to rely on the trusted methods of the 1990s, and generally is caught up in the fear of change that seems to pervade our industry.

Three major issues have changed the board test landscape over the past decade: proliferation of in-system programmable (ISP) devices, loss of access for test probes, and the shift in the manufacturing fault spectrum.

Ubiquitous ISP Devices

Designers are using ISP devices such as embedded microcontrollers, serial flash, and FPGAs in just about every type of product to meet both cost goals and shorter design cycles. ISP chips also let designers add enhanced features to new products with minimal hardware redesign.

Constantly increasing ISP device functionality and memory size have led to a shrinking of circuit board dimensions. This, in turn, enables smaller board sizes with increasing circuit density even as product functionality grows.

In turn, manufacturing engineers now prefer to produce multiple smaller boards in a panel to maintain throughput. The combination of larger memories and greater panelization has led to a geometric increase in the amount of data that needs to be programmed onto a panel of boards containing ISP devices. This requirement poses a manufacturing throughput challenge for the traditional methods of programming ISP devices�both ISP programming systems as well as in-circuit testers that incorporate the capability.

Loss of Access

Board testing pundits have been forecasting the inexorable loss of electrical access required for in-circuit testing and the rise of boundary scan since the mid-1980s. While it has taken at least a decade longer than many thought it would, boundary scan is gaining popularity as many products become increasingly complex and their boards increasingly dense and inaccessible.

While in-circuit test (ICT) retains its formidable economic advantages over alternatives, boundary scan and automated optical and X-ray inspection will grow alongside but not replace ICT. With ICT still squarely placed in the manufacturing and test flow, demands on platform flexibility will increase.

The Shifting Landscape of Faults

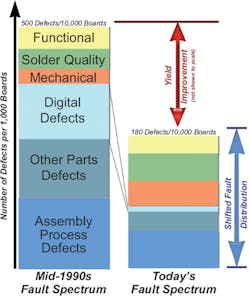

Device and circuit board technologies have evolved remarkably during the past 10 years, creating substantial changes in the magnitude and distribution of faults that occur during the manufacturing process. Even in the face of shrinking physical dimensions with many components now at 1 mm x 2 mm, increasing placement density, and thinner trace widths routed closer together, automated assembly and improved component quality have resulted in far higher manufacturing yields.

Along with higher yield has come a significant redistribution of the types of defects that occur�the fault spectrum shift�which, in turn, has noteworthy implications for test strategy, especially ICT. Root causes of the fault spectrum shift include the following:

� The component parts defect rate usually measured as device failures per million operations (DPMO) has decreased substantially, especially for digital ICs. Ten to 15 years ago, digital failures accounted for upwards of 35% of the total faults. Today, digital defects make up less than 2.3% of the board faults or one in 2,200 boards providing a 98% yield.1

� The automated SMT process is subject to fewer process defects in placing parts on boards than the older through-hole process. Placement errors now are a smaller proportion of the total fault spectrum.

� Solder-related connectivity problems (opens) tend to occur more frequently in the SMT process than do the solder bridges (shorts) that characterized the through-hole process.

� Most solder-related defects now are latent or quality-related, such as cold solder joints, component tombstoning, and billboarding. Taken as a whole, solder-related defects now make up a larger proportion of the SMT fault spectrum.

� Smaller, denser connectors and tighter physical tolerances, especially in small, hand-held products such as mobile phones and MP3 players, have resulted in mechanical defects becoming a proportionally greater percentage of today�s fault spectrum.

Once we accept the reality of the shifted fault spectrum, we�re motivated to move on to the next step: collecting and using data to understand the impact of the shifted fault spectrum on real-world test strategies.

Collecting and Analyzing Fault Spectrum Data

The fault spectrum is driven by board design and layout, the components used, and the assembly process itself. Unfortunately, there is no handy fault spectrum measurement instrument as such, leaving us with the task of collecting real data from a real manufacturing process or at least estimating what that data would be.

Actual parts and process defect rates often are available, especially in most high-volume production environments. We can apply these rates against component and solder-joint parameters for a given board to calculate the projected fault spectrum of that board.

A typical metric describing the magnitude of each fault class is the number of defects per 10,000 boards built. The fault spectrum distribution itself is the relative magnitude of each fault class, summing to 100% of total defects.

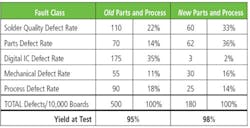

Table 1 compares the two fault spectrums derived from a hypothetical collection of failure data categorized into five fault classes collected across a family of related board types. The old column describes fault rates for boards built in a traditional through-hole manufacturing environment. The new column shows fault rates for the same categories for a family of newer boards built in a modern SMT process.

The upshot for the new boards built in a new process is a defect rate that is just 36% of the old process as well as a significantly shifted distribution of the same five fault classes. Both the higher yield and shifted fault spectrum affect the economics of ICT and the choice of an ICT system (Figure 1).

Once we know the fault spectrum, we have objective data on which to base a rational test strategy decision. For example, as shown in Table 1, the digital IC failure rate of the old fault spectrum comprised 35% of total defects while the new digital IC failure rate is just 2%. On the other hand, for example, the proportion of solder quality defects has increased from 22% to 33% of the total defects.

These two observations imply that the test strategy for the old board family calls for using an in-circuit tester with substantial digital vector test capability. However, the test strategy for the new board family requires far greater attention to solder defects, suggesting visual or X-ray inspection.

At the same time, digital test capability can be effectively eliminated. This means the test manager can make an intelligent trade-off in test and inspection technology. One reasonable approach would be to purchase a low-cost in-circuit tester, which reduces cost by eliminating the overhead involved with digital vector test, and use the resulting savings to offset an investment in automated X-ray or visual inspection equipment.

By examining failure rates and distribution, we gain insight into how to design and implement an optimum board test strategy that addresses the faults at hand. A test strategy should provide sufficient fault detection capability to identify defects that actually occur while eliminating the test coverage and overhead cost of fault classes that rarely, if ever, happen.

Test strategies that worked well a decade ago do not match the needs of the current fault spectrum. Today, a much more flexible approach is needed. Relying just on the traditional ICT and functional test standbys is insufficient.

Test engineers must deploy a variety of techniques to achieve the test coverage required for today�s dense and complex boards. Today, these engineers must determine which multistep test strategy is best and then implement each of those test steps while maintaining a flat or declining budget and reduced staff (Figure 2).

To be successful in dealing with the shifted fault spectrum, do not waste time and money on test steps that do not add fault coverage. If your board doesn�t need automated X-ray inspection, don�t do it. It will just pull resources from test steps that actually can help improve quality.

The key to making the right decisions in the test-strategy phase is the willingness to analyze the data and change the approach based on the data. Sticking to the tried-and-true methods of a previous decade will always generate incomplete fault coverage and inefficient testing. So, why is it so hard for test engineers to change?

Everything else about electronics manufacturing has changed. Circuit assemblies are denser, smaller, and increasingly portable. Consumer electronics, where every penny of cost matters, has eclipsed traditional electronics mainstays such as computers and communications equipment in dollar volume and brevity of product life. Much electronics manufacturing has moved to Asia. So why the paradox of advancing technology everywhere else and test stuck in the 1990s, especially in North America and Europe?

The classic rationale for resisting change is the timeworn refrain: �If it ain�t broke, don�t fix it.� As it is widely practiced today, test is not exactly broke. But for many manufacturers, if left unexamined, board test could well become a contributing factor to broke in the economic sense of the word.

Three basic concerns help explain why circuit board test remains generally unexamined and stuck in the past: fear, a data vacuum, and expensive complexity.

The Paralysis of Fear

When managers provide multiple justifications for leaving test as is, fear doubtless lurks at the back of their minds. A product that fails in the field is an economic and marketing disaster, costing 10 to 20 times more to retrieve, repair, or replace than it did to build in the first place.

Even after the product is repaired or replaced, that failure can tarnish even the most powerful brand reputation. The fear of shipping bad products makes otherwise cost-rational executives willing to pay almost any price for comprehensive test insurance. This usually includes more test features and capabilities than they�ll ever use because doing so promises to reduce risk of product failure in the customer�s hands.

But fear crowds out objective thinking. Instead of a 360-degree view of test as a set of costs and benefits, managers come to view test only for its downside. This downside-only view distorts motivation and inhibits action.

Manufacturing and test managers stick resolutely with the test strategy or tester that has always worked in the past. But in light of changing board, device, and process technology, what appears to work is, at best, generating excess cost and, at worst, creating an illusion of complete test where, in fact, it may be inadequate.

Test seen only as having a downside affects the motivation of those actually doing the testing. While looking constantly over their shoulders at downsizing, outsourcing, and shrinking capital budgets, test managers and engineers are relentlessly pressured to make ever-shorter deadlines on ever-smaller budgets.

These are the very people who understand the weaknesses of the current process and, more importantly, know how to improve it. Not only is there no time or budget to experiment with new, more productive approaches, but management�s view of test as a necessary evil also invariably snuffs out initiative.

OEMs using contract manufacturers to build and test their products face another, even more costly, motivation issue. Though some contract manufacturers are proactive enough to identify and propose test cost reduction to their customers, most have zero incentive to do so.

Nonrecurring engineering costs such as developing test jobs generally are charged back to the OEM, regardless of their magnitude. Contract manufacturers also want to use the testers they already own whether or not it is the most cost-effective for the OEM�s job. Some contract manufacturers even consider their test engineering groups as profit centers, motivating those test groups to maximize revenue in the form of further increased cost to their OEM customers.

The Data Vacuum

Manufacturing engineers and managers generally are scrupulous about collecting data regarding the value-added portions of the process. Statistical process control (SPC), process capability (Cpk), yields, and other metrics are tracked routinely.

Test yields usually are available, but it is a rare manufacturer who delves below those yield numbers to examine the root causes of yield, particularly the type and distribution of various defect classes or the fault spectrum. Yet, the fault spectrum is a primary driver of test strategy essential to the test manager in identifying what defect classes need to be tested and what test technology is most suitable for diagnosing them.

Buying this universal test insurance rather than using data to match tester capability to what needs testing has been especially lucrative for test-equipment suppliers. They are only too happy to sell their most capable and expensive systems. But much of this capability ends up unused on manufacturing test floors, even though it keeps generating overhead expense.

With 1990s test approaches, about half of the cost of buying, maintaining, and using traditional in-circuit testers is tied up in sophisticated digital vector driver/detector circuitry. This was an appropriate choice a decade ago when digital faults occurred frequently, but today those faults are measured in the low ppm range. For the vast majority of electronics manufacturers, paying for this unused digital capability is like paying for flood insurance in the Sahara Desert.

Moreover, today�s fault spectrum dictates flexible testers that are compatible with the multistep test strategies in use today. Open platforms that allow engineers to design, deploy, and maintain a variety of test techniques in the easiest possible manner are critical for success in the current environment.

Expensive Complexity

Today�s electronics assembly processes have almost the same level of automation and complexity as some semiconductor fabs. Test managers quite naturally wish to invest in complex test equipment, claiming the purchase is needed to match the technical requirements of the sophisticated boards being assembled and tested in this complex process.

The downside of complexity is additional cost, not just equipment but also overhead. Complex testers demand skilled, experienced, and expensive test-engineering and test-support resources. Typically, this takes the form of very expensive test fixtures and arduous programming processes and software resources to craft straightforward test programs.

Processes themselves can grow unnecessarily complex as well, usually by accretion rather than intention. As new technologies such as ISP devices emerge, their requirements must be integrated into an existing process. Each additional step added to the critical assembly path not only raises production cost, but also increases the odds of unplanned production stoppages. Without continual focus on how both equipment and the process itself can be simplified or consolidated, both cost and risk will increase.

So What Should Managers Do About Test?

Several manufacturers have identified test as a ripe cost-saving opportunity and been rewarded with less expensive but still highly efficient test strategies, increased test capacity at lower cost, and improved manufacturing margins. One Tier 1 contract manufacturer has used test cost reduction to differentiate itself from old-school competitors, providing proof of how today�s test process reduces both risk and cost for customers.

So how do we get other managers to see board test as an opportunity rather than a downside? A demonstration based on data rather than feeling is one place to start.

Test managers can advocate a well-defined methodology that appeals to management�s rational side. Changes in attitude will follow objectively implemented strategies with measurable results. Like going to the gym every morning, we need to follow a defined and fairly fixed routine, conscientiously applied over the long run:

� Understand root causes that affect board test strategies.

� Collect and analyze fault spectrum data.

� Reduce tester platform and process complexity.

� Demonstrate the economic upside to a flexible test strategy.

In fact, by simply treating test as just another step in the process rather than an activity with a downside, managers and test engineers naturally become allies rather than adversaries.

How does this happen? Here are a few of the numerous possibilities:

� Realigning the test strategy based on data rather than supposition or wishful thinking.

� Incorporating new test and inspection technologies such as vision and X-ray when needed to address the fault spectrum.

� Seemingly radical thinking such as replacing expensive big-iron in-circuit testers with low-cost in-circuit testers.

� Reducing complexity of the tester by eliminating unneeded capability and its overhead cost.

� Simplifying the process by consolidating steps such as ISP onto the in-circuit tester.

It all starts with understanding based on data rather than vaguely negative feelings and being open to change. The consequences in terms of improved test and process efficiency at lower cost are results that everyone involved can enjoy.

Reference

1. Low-Cost ICT Evaluation, Jabil Advanced Manufacturing Technology Group, 2005 IEEE International Board Test Workshop

About the Author

John VanNewkirk is president and CEO of CheckSum. He led the acquisition of CheckSum by an investor group, Teton Industries, in 2003. Before entering the test industry, Mr. VanNewkirk conducted a successful turnaround of a steel service center in southern China and served as a management consultant with Bain & Company in Hong Kong and San Francisco. He received a B.S. from Brown University and an M.B.A. from Harvard University. CheckSum, P.O. Box 3279, Arlington, WA 98223, 306-435-5510, e-mail: [email protected]

FOR MORE INFORMATION

on test strategy analysis models

www.rsleads.com/705ee-211

May 2007