Ego-motion and Localization Interface Design for Automated/Autonomous Driving

For automated driving, which ranges all the way up to the autonomous-driving level, it’s essential that information about the current position of the vehicle is known to the car’s systems. This task may sound trivial, but a closer look reveals its complexity.

A classical navigation system provides the absolute GPS position of the vehicle in a global coordinate system such as the WGS84 geodetic reference system. This is suitable for navigation applications to determine the current vehicle position on a map database, and perform tasks such as route guidance, or even compile an electronic horizon that passes information about the course of the road in front of the vehicle to predictive driver-assistance features.

However, many applications in the context of automated or autonomous driving require additional information about the position and movement of the vehicle body. This information usually involves the fusion of signal from several sensor systems: those that perceive the vehicle’s movement and those that sense the vehicle’s surroundings by means of localization sensors such as GPS.

The positioning information must then be distributed among many ECUs and software modules so that they can each fulfill their respective functions. Nevertheless, the modules in an automated or autonomous driving system don’t all require the same kind of ego-motion or positioning information at the same rate or in the same quality. Moreover, the type of data needed depends greatly on the actual function to be executed.

For example, a smooth trajectory is essential for the safe execution of lane changes and other maneuvers for automated driving on a road or highway, in addition to the lane-accurate global position. On the other hand, an automated valet-parking application needs the absolute position to sub-decimeter level to locate the correct parking spot. Furthermore, many additional applications such as eCall or vehicle-to-everything (V2X) communications also benefit from accurate positioning data.

A Closer Look at Sensors and Their Capabilities

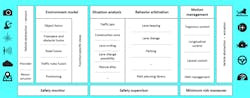

The so-called “sense-plan-act” path is the basis of most modern automated driving architectures. This path consists of the actual sensor systems and their signal fusion, a decision module that follows a system goal, and actuators to execute the decision (see figure).

Most modern automated driving architectures follow the so-called “sense-plan-act” path: Sensor systems and their signal fusion provide location input, a decision module follows the system goal, and actuators finally execute the decision.

The sense part of the path uses a wide variety of sensors for localization. These can be roughly grouped into three categories:

1. Localization sensors: These sensors provide information about the absolute position in a global coordinate system. The foremost examples are GNSS (Global Navigation Satellite System) sensors, with GPS being the best known.

2. Exteroceptive sensors: These sensors use the surroundings of the vehicle to measure its movement relative to detected features, for example. Cameras, radar, or LiDAR devices are examples of such sensors.

3. Interoceptive sensors: Since these sensors measure the movement of the vehicle from within it, they don’t require environment information, unlike the other two sensor categories. Gyroscopes, accelerometers, or odometers are examples of such sensors.

Each of these three sensor categories supports the calculation of position and ego-motion information for automated or autonomous driving. This information simplifies many algorithms in the perception and decision modules. However, no single sensor can provide a highly precise safety-rated position at a frequency high enough to support the system's decision or environmental perception unit.

Things are further complicated if a system requires a global position to relate to other vehicles in a global frame, or to match against global map data. A software component that provides a highly accurate, safety-rated position based on multiple sensor inputs is therefore crucial to satisfy the needs of automated or autonomous driving applications.

Global vs. Local Position

The short-term relative movement of the vehicle can already be calculated by using interoceptive sensors only. Interoceptive sensor data can also be utilized to provide a local position that’s independent of the availability of sensor data from the other two sensor categories.

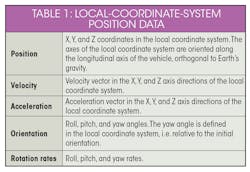

The advantage of local-coordinate-system position data is that it describes smooth motion relatively accurately without the typical correctional jumps of incoming GNSS sensor measurements. Furthermore, the data is immediately available on start-up, with no need to wait for a GNSS fix, for example. In fact, local position output is available even without any GNSS or localization sensor input. Table 1 provides an overview of the position data available in a local coordinate system.

All of the local position data described will typically include estimated accuracy information.

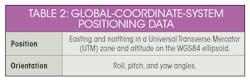

In contrast, some applications demand absolute, global-positioning information. In this case, the position is referred to a global coordinate system. While the local position inherently drifts over time with the integration of sensor measurements, the global position has the advantage that it’s constantly corrected by the localization sensors. Table 2 shows the positioning data typically available in the global coordinate system.

Here, too, the global position data described can include estimated accuracy information.

Moreover, the positioning data can be referred to different coordinate systems that are used for different purposes. Three important concepts are introduced below:

• Global positioning data typically relates to a world coordinate system (WCS). The point of origin can be very different depending on the reference system used. This point lies west of Africa in the Atlantic Ocean for WGS84, whereas each UTM zone has its own point of origin for UTM coordinates. The X axis in UTM points toward the east and the X-Y plane is orthogonal to gravity.

• Local positioning must be based on a self-defined point of origin that can be fixed in relation to the car, e.g., the middle of the rear axle projected onto the road plane along gravity. This so-called vehicle coordinate system (VCS) arranges the X-Y plane orthogonally to gravity, and the X-axis along the ego line of sight.

• A local coordinate system (LCS) defines its point of origin as a fixed position on the ground but without reference to a WCS. For example, the point of origin is the position at system start.

Angles can be stated as Euler angles, and rotations can be expressed using the intrinsic Z‑Y’‑X′′ Tait-Bryan rotation convention. Not all coordinate systems for velocities and accelerations move with respect to the earth’s surface. For example, the VCS at a certain timestamp doesn’t move with the vehicle, but it remains at the position of the vehicle at that timestamp. Thus, the data ultimately results in the velocity and acceleration figures over the ground.

Applying Localization Principles in Typical Software Environments

A closer look at typical software solutions for environmental models and automated driving shows how the positioning data actually interacts with various components and applications.

The sensor data is first converted into abstracted data structures to make the subsequent layers independent of the individual sensor type. This sensor data is then processed by different modules in a subsequent “sensor data fusion” layer. This layer consists of, for example, an object hypothesis fusion module, a grid fusion module, or a highly accurate positioning module.

The results of the sensor data fusion process can then be shown in function-specific views. These views summarize the fused sensor data to provide a specialized view for the functions that follow. For example, an emergency brake function needs the object hypothesis immediately after initial detection (e.g., to pretension the brakes), whereas adaptive cruise control may require a more stable object hypothesis, since very rapid reaction isn’t so much of an issue here. Another advantage of different views is the ability to adapt the amount of data to different system architectures in which the communication buses may have different performance.

The central part of typical software architectures provides the automated driving functions. It splits the overall system into many smaller functions, each for a specialized situation. This enables developers to concentrate on a specific task. Moreover, the system can be enhanced over time by adding more and more functions to the original ones.

The use of multiple vehicle control functions creates a need to moderate this access, which is fulfilled by situative behavior arbitration. This collects the demands from all of the functions for control of the car and decides which one or which set of behaviors can access the actuators. These commands are then executed by the motion management or HMI management layer components. In these layers, the system applies vehicle-independent control and optimization algorithms to execute the requested commands. The vehicle-independent commands are then converted into vehicle or actuator specific commands by the abstraction layer (see figure).

Sensor Calibration

Localization data is typically provided by a dedicated positioning component in the software architecture. Sensor calibration is one of the first steps executed by such a component. This operation is necessary because although interoceptive sensors respond to specific physical quantities, their response usually isn’t reported as a value in physical units.

Mathematical transformations are required before the data can be used to estimate the position. The sensors being used are calibrated for this purpose. Most of the sensors have two significant parameters that describe the relationship between the sensor measurement and its value in the corresponding physical units.

Scale describes the change in the sensor measurement when the measured physical value changes by one unit.

Bias describes the sensor measurement when the measured physical unit value is zero.

These parameters don’t remain constant, either in the short term (e.g., they’re often temperature-dependent) or over the life cycle of the sensor. In addition, the integrating nature of the fusion process means that small deviations in these parameters lead to large errors in the fused output. For high-accuracy applications, therefore, all sensor calibration parameters must be estimated carefully and updated continuously while the system is running.

In the positioning component, the raw sensor measurements are input to a sensor calibration component. This estimates the sensor calibration parameters, and outputs calibrated sensor measurements. These measurements are fed into separate local and global position estimation components.

Accuracy Evaluation

The accuracy of the estimated position output is one important factor that ensures the quality and reliability of a positioning component. Accuracy in this context means the observed total deviation of the estimated position output from the true position.

There are many sources of deviations. Some are systematic, while others are probabilistic in nature. Each sensor exhibits a set of impairments regarding measurement accuracy, which range from measurement noise to a non-ideal scale, biased values, cross-sensitivity, nonlinear scaling effects, and many others. Measurements (especially GNSS input) can be affected by error conditions that are hard to detect, such as multipath effects. Sampling rates and delays in communication or measurement are also crucial factors in the positioning accuracy.

As well as the sensor measurements, the fusion itself introduces deviations into the output. The movement model is always described ideally. Differences obviously exist between the model and the real movement of the vehicle. This situation causes some maneuver-dependent perturbations in the accuracy of the fused output.

Analytical approaches to predict the expected accuracy of the positioning component aren’t meaningful because they require many assumptions to be made about the sensors and the upcoming driving maneuvers. Therefore, it turns out to be a better strategy to determine the accuracy empirically. For example, a reference system with high-grade sensors can be used to provide the true position with good accuracy.

The estimated position can then be compared to the corresponding reference and the deviations can be aggregated into several statistical views. However, the accuracy of the full system can’t be expressed by a single number. Other aspects besides the absolute position error must be evaluated. These include the yaw angle, vehicle movement, and longitudinal or lateral errors. Statistical views include the results for specific driving maneuvers such as braking or driving through curves, as some applications are concerned with these special situations.

Practical Use Cases for Positioning, Ego-motion Description Interfaces and Data Structures

Being that the Elektrobit EB robinos software product line is what the authors of this article work on daily, it’s used here as a real-life example for the practical implementation of the described concepts into interfaces and data structures.

In past years, the industry has encountered various ego-motion description and positioning interfaces. Four localization and ego-motion interfaces that support all automated driving-related use cases can be derived from this experience. These interfaces provide an ego-motion description, relative local positioning, and global positioning at a high rate that satisfies different quality and safety levels. They’re based on interoceptive, exteroceptive, and global positioning sensors.

The data structure to describe a global position comprises the following parameters: UTM coordinate on the east-west axis, UTM coordinate on the north-south axis, altitude, and rotation of the vehicle in the X, Y, and Z axes orthogonal to gravity. The variances of the X, Y, and Z positions as well as of roll, pitch, and yaw are also included.

The data structure to describe a local position contains the following parameters: X and Y axis position relative to the vehicle start point, Z axis position relative to the driving surface, rotation of the X and Y axes referred to the driving surface, and rotation of the Z axis referred to the initial driving direction. The structure also includes the variances of the X, Y, and Z positions as well as of roll, pitch, and yaw.

The delta position data structure allows the derivation of the current position relative to the local coordinates at the start time. It includes the deltas for the X, Y, and Z axes in relation to the VCS of the start timestamp as well as the delta rotation around the Z axis with reference to gravity. This structure again also includes the variances of the deltas for the X, Y, and Z axes as well as of the yaw value.

Finally, the vehicle dynamics data structure comprises the speed orthogonal to gravity and the rotation speed, the variances of the speed and rotation speed, the acceleration and rotation acceleration, as well as the variances of acceleration and rotation acceleration.

These four data structures are usually combined into a single large structure. This results in huge data-transfer rates from one component to another. Because the values are often derived from different sensors, not all data fields can be updated at the same time. This results in outdated data or a mixture of predicted and measured data. This quality information can of course also be included in the data structure, but it results in an even larger structure.

The four data structures described can be used by different components:

The global position is used every time the vehicle needs to relate to another entity in a WCS. The location of the vehicle on a map is the most obvious case, but a V2X use case also needs the global position.

The local position is aimed at components that need short-term track information but aren’t concerned with the actual position relative to a global coordinate system. The determination of a relative trajectory is a typical use case.

Trajectory controllers are often only concerned with the delta position. They must correct the actuating variable based on the error accumulated since the last controller step. The delta position can provide a very smooth and highly reliable measurement for such use cases.

Vehicle dynamics don’t depend on a global coordinate system, as they only describe relative movement. Every component can decide whether this information is needed for its function by extracting it from the position information into a separate data structure.

Summary

Typical software platforms for automated driving require global and local position inputs, and they must supply them in the form of adapted data structures to different types of applications. Concepts such as sensor calibration and accuracy evaluation ensure the reliability of positioning data. Further, a particularly smooth local output trajectory can be provided by separating sensor calibration from position estimation. Ideally, the positioning accuracy can also be measured and monitored empirically by comparing the generated output with a highly accurate reference positioning system.

Sebastian Ohl is Head of the Technology Center for Environmental Perception and Henning Sahlbach is System Architect at Elektrobit.

About the Author

Sebastian Ohl

Head of the Technology Center for Environmental Perception

Sebastian Ohl is Head of the Technology Center for Environmental Perception in the product group Highly Automated Driving at Elektrobit (EB). He has a PhD in electrical engineering from TU Braunschweig, Germany, in automotive sensor data fusion. Sebastian joined EB in 2012 and started working with the product development of software components in the field of autonomous driving at EB in 2014.

Henning Sahlbach

System Architect

Henning Sahlbach is the System Architect of the EB robinos product family, which is developed in the product group Highly Automated Driving at Elektrobit (EB). He has a degree in computer science from TU Braunschweig, Germany, where he also worked as a researcher in the field of embedded-systems design for autonomous driving for several years. Henning joined EB in 2014 and started working with the product development of software components in the field of autonomous driving in 2018.