The "System Optimizing Compiler" Revs Up Vehicle-Electronics Design

Thanks to Moore’s law, automotive electrical systems have undergone rapid technological growth. Modern automobiles have evolved from simple engine electrical systems, coupled with an AM radio to those containing several advanced electronic systems replete with functionality, from engine control, advanced driver-assistance systems (ADAS), traction and stability control to infotainment, and in some cutting-edge applications, autonomous capability.

The rise in deployment of electronic systems within automobiles brings with it several challenges that designers must address:

- Performance: Real-time, low-latency and highly deterministic performance is required to implement many vehicle features such as ADAS, ECU, traction, and stability control.

- Security: Automotive electronic systems implement critical functionality where a malfunction could result in injury or loss of life. Systems must therefore implement information assurance and anti-tamper techniques to prevent unauthorized modifications.

- Safety: Must conform within the Automotive Safety Integrity Levels as defined by ISO26262.

- Interfacing: Must be able to interface with a wide range of sensors, drives, and other actuators.

- Power efficiency: Must operate efficiently within a constrained power budget.

- Software defined: Enabling flexibility to address the differing standards and conditions in a range of markets.

To help overcome these challenges, developers of automotive electronic systems are deploying heterogeneous system-on-chip (SoC) devices. Heterogeneous devices combine a processing unit, usually multicore, with one or multiple heterogeneous co-processors, e.g., GPU, DSP, or programmable logic.

Combining a processing unit with programmable logic forms a tightly integrated system, enabling the inherent parallel nature of the programmable logic to be leveraged. This allows the programmable logic (PL) to be used to implement high-performance algorithms and interfacing, while the processing system implements higher-level decision making, communication, and system-management functions. When combined, this enables the creation of a more responsive, deterministic, and power-efficient solution thanks to the ability to offload processing into the programmable logic.

When it comes to interfacing, the heterogeneous SoC can support a range of industry-standard interfaces which can be implemented via the processing system or the programmable logic. Crucially, legacy and bespoke interfaces can be implemented using the programmable logic thanks to the flexibility of the IO structures. This does, however, require the addition of an external PHY to achieve the physical layer of the protocol providing any-to-any connectivity.

Some heterogeneous SoCs facilitate several device-level and system-level security aspects which can be implemented with ease. These devices provide the ability to encrypt and authenticate the boot and configuration process. If the processor core is based on ARM processors, then Trustzone can be used to secure the software environment. With Trustzone, the development team can create orthogonal worlds limiting software access to the underlying hardware, with the use of a hypervisor. There are also several additional design choices, such as functional isolation, which can be implemented in the design to further strengthen the security solution depending on the requirements.

The traditional development flow of a heterogeneous SoC segments the design between the processor system and the programmable logic. Such an approach has in the past required two separate development teams, which increased the non-recurring engineering cost, development time, and technical risk. This approach also fixed design functions as being in the processor cores or the programmable logic, making later optimizations difficult.

What was required was a development tool that enables software-defined development of the entire device, with the ability to move functions from processor cores to programmable logic as required, without the need to be an HDL Specialist.

System Optimizing Compiler

This is where a system optimizing compiler is used. A system optimizing compiler enables software definition of the entire system’s behavior using high-level languages such as C, C++, or OpenCL. Functional partitioning between the processor system and programmable logic is then performed using the system optimizing compiler, which has the capacity to seamlessly move functions between running in the processor system or being implemented in the programmable logic (Fig. 1).

1. Selecting functions for acceleration using a system optimizing compiler.

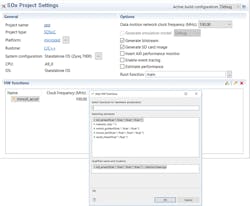

Identification of which functions are causing bottlenecks can be performed using built-in timers within the processing system to time the execution of functions, creating a list of bottleneck functions. These bottleneck functions are then candidates for acceleration into the programmable logic using the system optimizing compiler (Fig. 2).

This movement between the processing system and programmable logic is enabled by the compiler’s combination of High Level Synthesis (HLS), a tool that can convert C, C++, and OpenCL into a Verilog or VHDL description, and a software-defined connectivity framework. The framework seamlessly connects the results of the HLS into the software application, enabling the design team to move functions between the processor and the programmable logic with the click of a button.

2. Resource and performance estimation using a system optimizing compiler.

Of course, when users move functionality to the programmable logic, they also gain a significant increase in performance that comes naturally with the use of the programmable logic. Acceleration into the PL also provides an increase in determinism and a lower latency compared to a CPU/GPU solution, which is very important for applications such as an ECU and ADAS.

Library Support

Many automotive applications are developed using industry-standard open-source libraries—for instance, the use of OpenCV or Caffe in ADAS systems or standard math libraries within an ECU. To enable faster development for these applications, a system optimizing compiler needs to be able to support several HLS libraries that the developers can use in their applications.

A system optimizing compiler should support several key libraries, including:

- OpenCV: Ability to accelerate computer vision functions

- Caffe: Ability to accelerate machine-learning inference engines

- Math Library: Provides synthesizable implementations of the standard math libraries.

- IP Library: Provides IP libraries for implementing FFT, FIR, and Shift Register LUT functions.

- Linear Algebra Library: Provides a library of commonly used linear algebra functions.

- Arbitrary Precision Data Types Library: Provides support for non-power-of-2, arbitrary length data using signed and unsigned integers. This library allows developers to use the FPGA’s resources more efficiently.

Supplying these libraries gives the development team a considerable boost since they don’t need to develop similar functions.

Real-World Example

One key element of many automotive applications is securing data to prevent unauthorized modifications, which may result in unsafe operation. One common algorithm used to secure both stored and transmitted data is the Advanced Encryption Standard (AES). AES is a good example of an algorithm that’s described at a high level, but best implemented in programmable-logic fabric.

3. AES acceleration results for different operating systems when using a system optimizing compiler.

To demonstrate the benefit of using a system optimizing compiler, a simple AES 256 application targeted at three commonly used operating systems was developed. This example was first executed only in the processor system and then with functions accelerated into the programmable logic (Fig. 3).

Conclusion

Heterogeneous SoCs are able to address the challenges faced by automotive electronic system designers. System optimizing compilers enable the development of these devices using a high-level language. The functional partitioning between the processor system and the programmable logic can be optimized once the application functionality is developed and prototyped using the processor. This reduces the development time and produces a more secure, responsive, and power-efficient solution.

About the Author

Giles Peckham

Regional Marketing Director

Giles Peckham has worked in the field of IC design for more than 30 years, covering the whole spectrum from full custom SoC designs through standard cells, gate arrays, and all programmable FPGAs and SoCs. He has held various positions in design and sales & marketing at Philips Semiconductors and European Silicon Structures before joining Xilinx, where he now has global responsibility as Director of Regional Marketing. His experience in both the technical and commercial sides of ASICs, ASSPs, and programmable logic has given him a clear perspective on this market.

Adam Taylor

Adiuvo Engineering and Training

Adam Taylor is an expert in design and development of embedded systems and FPGAs for several end applications. Throughout his career, Adam has used FPGAs to implement a wide variety of solutions from radar to safety-critical control systems, with interesting stops in image processing and cryptography along the way.

Most recently, Adam was the Chief Engineer of a space imaging company, being responsible for several game-changing projects. He is the author of numerous articles on electronic design and FPGA design, including over 200 blogs on how to use the Zynq for Xilinx. Adam is a Chartered Engineer and Fellow of the Institute of Engineering and Technology, and is the owner of Adiuvo Engineering and Training, an engineering and consultancy firm.