Experimenting with the latest system-on-chip (SoC) solutions is easier these days with platforms like the BeagleBone, Raspberry Pi, and Arduino. I especially like the new BeagleBone AI (Fig. 1), which is a great, all-around platform for control, computation, and machine learning (ML) at the edge.

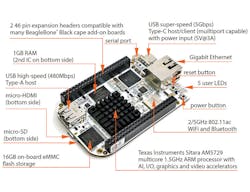

The board is built around Texas Instruments’ (TI) AM5729 system-on-chip (SoC). The SoC is augmented with 15 GB of eMMC flash, 1 GB of RAM and 2/5-GHz 802.11ac Wi-Fi as well as Bluetooth support. A Gigabit Ethernet jack provides wired connectivity, and display connectivity comes via a micro HDMI connection. A single USB high-speed port is handy for keyboards and cameras. Power can be supplied via the USB Type-C connection. This also provides a 5-Gb/s USB interface for debugging. Dual 46-pin headers offer expansion support.

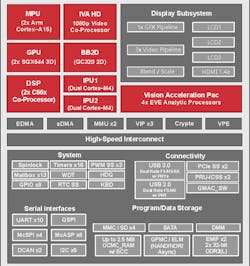

The heart of the BeagleBone AI, which is available from Newark, is the AM5729 (Fig. 2). The SoC doubles up on almost everything, including dual, 32-bit Arm Cortex-A15 cores, dual image-processing units (IPU) that each have dual Cortex-M4 cores, two dual-core programmable real-time Unit (PRU) subsystems, dual C66x DSP cores, dual PowerVR SGX544 3D GPUs, dual memory-management units (MMUs), and four embedded vision engines (EVEs). The SoC has single USB 2.0 and USB 3.0 ports with PHYs, yet both are dual role. There’s also a plethora of serial and parallel ports.

That’s a bit of double talk, but almost all of these cores can provide some form of ML support—although this will usually target subsystems like the EVE, IPU, and DSP cores. The biggest challenge with the BeagleBone AI is how to split up the application and what subsystems mesh best with control and machine-learning chores. For example, the EVE subsystems can handle high-performance ML chores, but real-time ML support may fit better on the IPUs.

BeagleBone AI runs Linux and can support a graphical desktop. However, the default configuration is command-line-only in addition to a web-based interface that’s built around Amazon’s AWS Cloud9 IDE. Cloud9 can also tie into AWS, allowing work to be done in the cloud, including development of cloud-based applications, in addition to local applications that would run on the BeageBone AI. Thus, a single interface can span support platforms as well as target platforms.

The BeagleBone AI lets the TI Deep Learning (TIDL) software handle ML development. TIDL targets most of TI’s processor platforms, including those with hardware acceleration like the AM5729. One advantage is that TIDL opens up opportunities to target other platforms while learning one software framework. On the flip side, there’s less BeagleBone AI-specific documentation and TIDL, so step-by-step introductions are more limited.

It took me a little longer to get into the ML support with the BeagleBone AI due to that limitation, but now I have a better appreciation and understanding of TIDL. It also means that I can take advantage of what TI is doing, as well as target any of its platforms rather than having an experience that’s applicable only to the BeagleBone AI. Likewise, the Cloud9 support is something that’s useful in general and not specific to the board.

As impressive as the ML support is, the display and real-time capabilities make the SoC stand out. The IPU, PRU, and DSP subsystems are top-notch, allowing a single platform to handle real-time chores in addition to application code and ML tasks. As a learning platform, it’s ideal because any aspect of embedded development can be done on this hardware. I know it’s taken me a while to investigate just some of the features of the BeagleBone AI. It looks to be a great platform for a drone or robot.

About the Author

William G. Wong

Senior Content Director - Electronic Design and Microwaves & RF

I am Editor of Electronic Design focusing on embedded, software, and systems. As Senior Content Director, I also manage Microwaves & RF and I work with a great team of editors to provide engineers, programmers, developers and technical managers with interesting and useful articles and videos on a regular basis. Check out our free newsletters to see the latest content.

You can send press releases for new products for possible coverage on the website. I am also interested in receiving contributed articles for publishing on our website. Use our template and send to me along with a signed release form.

Check out my blog, AltEmbedded on Electronic Design, as well as his latest articles on this site that are listed below.

You can visit my social media via these links:

- AltEmbedded on Electronic Design

- Bill Wong on Facebook

- @AltEmbedded on Twitter

- Bill Wong on LinkedIn

I earned a Bachelor of Electrical Engineering at the Georgia Institute of Technology and a Masters in Computer Science from Rutgers University. I still do a bit of programming using everything from C and C++ to Rust and Ada/SPARK. I do a bit of PHP programming for Drupal websites. I have posted a few Drupal modules.

I still get a hand on software and electronic hardware. Some of this can be found on our Kit Close-Up video series. You can also see me on many of our TechXchange Talk videos. I am interested in a range of projects from robotics to artificial intelligence.