Now and Then: Processors Have Come a Long Way

This article is part of Now and Then series and the Electronic Design's 70th Anniversary series.

What you'll learn:

- The drastic changes in processor technology development over the decades.

- Some of the key players in the early days of mainframes.

- The rise of microprocessors.

- The shrinking size of today's microprocessors and the push for security measures.

The UNIVAC I was actually the second commercial computer; it had a liquid mercury, delay-line memory containing 1,000 words of 12 alphanumeric characters. It ran at low megahertz speeds and could read 7,200 decimal digits/s. The thought of a computer of any sort that fit in the palm of your hand, which could communicate wirelessly with the rest of the world, was in the realm of science fiction along with ray guns and flying cars. Those other items haven’t progressed as fast as processor technology, but we have them all now.

The AMD processor is built on 5-nm transistor technology running at gigahertz speeds. Its power consumption is on the order of a couple hundred watts compared to early mainframes that required many kilowatts along with forced-air or even water cooling. The UNIVAC used 125 kW of power and weighed in at 13 tons. Multichip servers typically have gigabytes to terabytes of external storage, not to mention on-chip registers and multilevel cache systems.

Processor and computer architectures were just evolving. The UNIVAC 1103A was the first computer to have interrupts. Inputs were often punch cards, paper tape, or magnetic tape, while output typically included reams of 132-column paper (14-in.-wide paper at 10 characters/in. plus borders). The blinking status lights were not LEDs and rows of toggle switches were standard fare.

Mainframes

Mainframes were usually housed in special rooms, with raised floors for cabling and cooling being the norm for decades. The famous International Business Machines (IBM) System/360 (S/360) started out in 1964 (Fig. 2). The S/360 replaced five other IBM computer families.

The S/360 introduced IBM’s Solid Logic Technology (SLT). This packaging technology mixed custom hybrid circuits that included discrete, flip-chip-mounted, glass-encapsulated transistors and diodes plus silk-screened resistors on a ceramic substrate. The basic architecture starts with sixteen 32-bit registers, 8-bit bytes, and 24-bit addressing. The EBCDIC character set was the preferred encoding with ASCII becoming the accepted standard now. Nine-track magnetic tape was ubiquitous and eventually the IBM 3340 "Winchester" disk drive was added to the mix.

IBM was joined by the "BUNCH" (Burroughs, UNIVAC, NCR, Control Data, and Honeywell). Each had its own architecture. Programming languages like FORTRAN and COBOL joined assembler, making processors easier to program.

Operating systems were typically written in assembler, but the Burroughs B5000 mainframe was programmed in Algol. The Burroughs ESPOL (Executive Systems Problem Oriented Language) Algol-variant provided system access and was used to write the Master Control Program (MCP). There was no assembler for these mainframes. The C programming language didn’t become available or popular until much later. The term MCP was later used as the name of the antagonist in the Tron movie franchise.

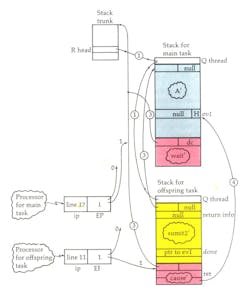

I like to mention the B5500 since it was one of the first mainframes I used. It had a descriptor- and stack-oriented architecture that was implemented in hardware (Fig. 3). It also employed tagged words that differentiated data and code. The B6500 moved to an 8-bit variable length instruction set.

Multiprocessor systems were common, but each processor was typically a box or board. Though mainframes still exist, they have migrated to the latest multicore chip technologies.

Minicomputers

The desire to shrink computers and make them more accessible morphed the mainframes into minicomputers, which were available from mainframe companies plus a host of others including Digital Equipment Corporation (DEC), Data General, Hewlett-Packard (HP), Prime Computers, and Wang Laboratories.

The typical minicomputer was 16-bit and weighed in at about 50 pounds; it didn’t require a custom-built room although air conditioning helped. One could be acquired for as little as $10,000. They were constructed using readily available LSI technology. The 7400 series of transistor-transistor logic (TTL) logic chips was a popular implementation tool. These chips are still available but rarely used to implement processors anymore.

I used a dual-processor version of the HP 2000 minicomputer in high school (Fig. 4). It was a timesharing system that could host dozens of teletype terminals with optional punch-paper tape units and eventually cathode-ray-tube (CRT) displays. Long-distance connections courtesy of 300- and 1200-baud modems were the norm.

The 12-bit DEC PDP-8 was a popular platform and the 16-bit PDP-11 was eventually turned into the LSI-11 chipset.

Microprocessors

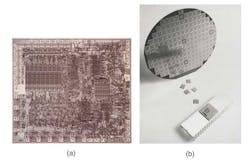

Microprocessors compatible with minicomputers were available. However, 8-bit microprocessors that eventually drove the personal-computer (PC) revolution can track their evolution to the Intel 8008, which was replaced by the Intel 8080 in a 40-pin dual-inline package (DIP) (Fig. 5). The initial clock rate was 2 MHz and instructions required a minimum of four cycles to complete. There was no caching, pipelining, or multithreading yet.

The 8080 was implemented using N-type metal-oxide-semiconductor logic (NMOS) and non-saturated enhancement-mode transistors as loads. It was compatible with 5-V TTL. The MPU had 8-bit registers that could be combined into 16-bit registers. A 16-bit stack pointer provided a recursive environment.

The Intel 8080 was the heart of the IMSAI 8080 microcomputer (Fig. 6) that showed up in the movie WarGames and was mentioned in Ready Player One. The Intel 8085 was a single-voltage, 5-V part that was eclipsed by the Zilog Z80. The Z80 was inside the Epson QX10 with 256 kB of RAM that ran an all-in-one office suite called VALDOCS, which I worked on while at Rising Star Industries.

Intel wasn’t alone with the 8080. A host of other 8- and 16-bit microprocessors like the Motorola 6800 and MOS Technology 6502 was in everything from the Atari 2600 to the Apple II.

But it was the 16-bit Intel 8088 and the 8086, along with IBM, that created the Personal Computer family. The 8088-based IBM PC and its BIOS started the consumer side of things. It had a floppy disk and eventually a 5-MB hard disk.

The 8088/86 architecture was designed to make migration from the 8080 easy, but they were neither source- nor code-compatible. It’s a world of difference from the x86 compatibility we know today.

Current Architectures

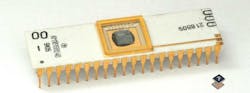

Processors have scaled up in performance and down in size. It’s possible to get 8-, 16- and even 32-bit microcontrollers in BGA packages only a couple millimeters on a side. They typically have on-chip clocks, flash memory, serial and parallel interfaces, as well as analog-to-digital and digital-to-analog converters. Some even have on-chip sensors for testing things like temperature.

That’s a far cry from the first single-chip microcontroller I used, the Intel 8748, an 8-bit processor with a UV EPROM (Fig. 7). The current NXP Kinetis KL03 is available in a 2-mm2 chip-scale package (CSP). The 32-bit, Cortex-M0+ runs at 48 MHz with 32-kB flash compared to the Intel 8748 featuring an 11-MHz clock delivering 0.73 MIPS with a 2-kB UV EPROM.

Packaging also is changing how processors are put together. 2.5D and 3D stacking has been used extensively for memory and for higher end processors. There’s also wafer-based solutions like the one from Cerebras Systems that puts a trillion transistors to work with a focus on machine-learning algorithms.

The choices now at hand for developers are radically different from years ago. Multicore processor chips are readily available from small microcontrollers to chips designed for cloud-based servers. Processor architectures have included x86, Arm, MIPS, RISC-V, SPARC, and POWER. Of these, x86 and Arm now dominate with RISC-V on the rise.

Having access to a massive amount of transistors has made system-on-chip (SoC) solutions with many different processors possible—the variety is mind-boggling. Likewise, the use of dedicated processors for functions from security to network management are now common.

The ramp up of the Internet of Things (IoT) and IoT devices has pushed the need for security processors and secure storage on chip. Dedicated communication processors are sometimes added to the mix as well as utilizing low-power processors to augment a higher-performance processor when reduced computational requirements are in effect. Low-power options have led to always-on operation, so there’s no real off button these days.

While I have concentrated on the basic central-processing-unit (CPU) architectures, we should not overlook the plethora of new architectures like graphics processing units (GPUs), FPGAs, and programmable accelerators for tasks such as machine learning. General-purpose GPUs (GPGPUs) and CPUs share multichip communication links. GPU programming takes single-instruction, multiple-data (SIMD) and vector computations to another level, and software developers are now mixing target platforms to achieve optimal performance.

There’s also a move to disaggregation in server architectures. This is again changing how we look at chip and system design. Whereas the SoC looks to put different things on one chip, disaggregation enables storage and communication to be moved to a remote location.

About the Author

William G. Wong

Senior Content Director - Electronic Design and Microwaves & RF

I am Editor of Electronic Design focusing on embedded, software, and systems. As Senior Content Director, I also manage Microwaves & RF and I work with a great team of editors to provide engineers, programmers, developers and technical managers with interesting and useful articles and videos on a regular basis. Check out our free newsletters to see the latest content.

You can send press releases for new products for possible coverage on the website. I am also interested in receiving contributed articles for publishing on our website. Use our template and send to me along with a signed release form.

Check out my blog, AltEmbedded on Electronic Design, as well as his latest articles on this site that are listed below.

You can visit my social media via these links:

- AltEmbedded on Electronic Design

- Bill Wong on Facebook

- @AltEmbedded on Twitter

- Bill Wong on LinkedIn

I earned a Bachelor of Electrical Engineering at the Georgia Institute of Technology and a Masters in Computer Science from Rutgers University. I still do a bit of programming using everything from C and C++ to Rust and Ada/SPARK. I do a bit of PHP programming for Drupal websites. I have posted a few Drupal modules.

I still get a hand on software and electronic hardware. Some of this can be found on our Kit Close-Up video series. You can also see me on many of our TechXchange Talk videos. I am interested in a range of projects from robotics to artificial intelligence.