Download this article in PDF format.

Robots have become ubiquitous in assembly lines, doing everything from manhandling a car to assembling a smartphone. They are fast, accurate, and do not require breaks or overtime pay. They have been unfeeling, literally, which often makes them hazardous to us humans.

Robots have killed and injured their human counterparts, but this usually occurs because safety mechanisms have allowed them to continue to operate after a person has moved into the space reserved for robotic operation. These types of production robots are typically caged, either physically with barriers or electronically with optical or ultrasonic “curtains.”

These restrictions have changed, though. So beware, cobots, the truncated word for cooperative robots, may be coming to a street near you (Fig. 1). For example, Marble’s robot is controlled by an Nvidia Jetson TX1, delivering food in San Francisco’s Mission and Potrero Hill districts that was ordered using a Yelp Eat24 app. These autonomous ground delivery vehicles (ok, they are tiny self-driving cars) roll along the sidewalk with their tasty treats, avoiding people, cars, and pets while cruising at walking speeds to the intended diners.

1. Marble’s robot, controlled by an Nvidia Jetson TX1, delivers food in San Francisco that was ordered using a Yelp Eat24 app.

Programs running on the Jetson TX1 take advantage of the latest deep-neural-network (DNN) machine-learning technology (see “What’s the Difference Between Machine Learning Techniques?”) to analyze information from an array of sensors that surround the cobot with a virtual sensor map. “Through Jetson’s AI and deep-learning capabilities, our robots can better perceive the world around them,” says Matt Delaney, CEO and co-founder of Marble. “Together we’re working to make urban transportation of goods more accessible.”

Cobots in the wild “outdoors” are just an extension of cobots in more controlled spaces like hospitals. Aethon’s Tug (Fig. 2) has been trundling around medical facilities for years (see “Robotics Moves To The Mainstream”). The Tug’s LiDAR and other sensors are similar to what’s used by Marble. However, the Tug has a couple of advantages, such as Wi-Fi communication with doors and elevators, that have been thoughtfully configured to interact with these almost silent robot couriers. This allows a Tug to move throughout the hospital without human intervention except for the exchange of materials.

2. Aethon’s family of Tug cobots have found a home in controlled environments like hospitals, delivering everything from medicines to food.

Exchanges are similar to the Marble Robots system. The robot has one or more electronically locked compartments that are opened in response to a code entered by a user. These days, this could be done using a panel on the robot or with another device like a smartphone. A person is still required to open the door and add or remove items. The robot can then continue along with subsequent deliveries, or it may return to a control point or charging station, since most of these robots are electrically motivated.

Generally speaking, Marble Robots looks to change urban logistics, while Aethon has already done so in hospitals. More articulate delivery robots are already being employed in controlled spaces such as warehouses. These range from smart forklifts to inventory pickers.

Armed and Not Dangerous

Putting a robot behind a physical or virtual barrier is handy within an assembly environment if the robot can operate in isolation as materials move through the system. This has been very successful to date, but there are advantages to robots that can operate in close proximity to humans. Examples of this include Rethink Robotic’s one-armed Sawyer (Fig. 3) or its two armed counterpart, Baxter (see “Interview With The Creators Of Baxter - Rethink Robotics”).

3. Rethink Robotic’s Sawyer is a one-armed cobot designed to operate in close proximity with humans.

Like many cobots, Sawyer is designed to detect humans through sight, sensors, and touch. However, it also has limbs that are designed to minimize their power. Furthermore, it’s designed for low impact operation with a counterweighted arm.

In fact, the easy movement of the arm by a person is one way of programming Sawyer and Baxter. Enable its training program, move the arm around and adjust the grippers, show an object to be manipulated to the camera in the hand, and so on. Then stand back and allow it to repeat the process without fear of getting knocked off your feet by that robotic arm.

4. Festo’s BionicCobot, a pneumatic robot with seven joints, can be in close proximity with humans.

Festo’s BionicCobot (Fig. 4) is a pneumatic lightweight robot that can work in close quarters with humans. It has seven joints with a drive approach that makes it possible to exactly determine the force potential and rigidity level of the robot arm. The pneumatic arm automatically eases off in the event of a collision to protect nearby humans or objects, including other cobots.

The arm has three axes of freedom in its shoulder area, one each in the elbow and lower arm, plus two axes in the wrist. At each point, there is a rotary vane with two air chambers. This pneumatic motor can be infinitely adjusted like a mechanical spring by filling the chambers with compressed air (Fig. 5).

5. The BionicCobot mixes pneumatics with electronics to provide precise control.

Of course, these cobots will not be lifting cars, anvils, or other large and heavy objects in close proximity to humans because the objects are dangerous. Designers and users need to keep this in mind, since the cobots aren’t the only part of the equation. A beaker of acid may not weigh much, but it can have severe consequences if spilled.

Look and Do Touch

The range of sensors employed by cobots continues to grow as the cost drops for these devices. Low-cost video cameras combined with machine learning and DNNs are just one way cobots, self-driving cars, and other robots are gaining more information from their surroundings.

6. Bebop Sensors’ materials have been used in a range of smart-fabric applications, from shoes to smart baseball bats.

Bebop Sensors’ smart-fabric technology (Fig. 6) applications range from shoes to smart baseball bats. The washable fabric can measure XYZ location in addition to bending, twisting, rotation, and force being applied. The BeBop materials are available in woven and non-woven base cloth, and multiple sensors can be placed on same piece of fabric in sizes up to 60 in. wide. Thickness ranges from 0.25 mm to 0.50 mm. The printed layers can include dielectric, resistive, and controlled conductive structures, along with USB, BLE, CAN, or Wi-Fi electronics. This can give a cobot real feelings, so to speak.

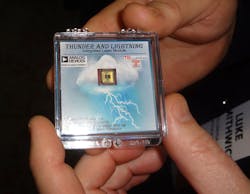

In another area, TriLumina’s vertical cavity surface-emitting laser (VCSEL) array emitters are paired with Analog Devices’ high-speed laser drivers (Fig. 7) to deliver 3D LiDAR illumination that will radically change the LiDAR landscape. LeddarTech and TriLumina demonstrated a 256-pixel LiDAR solution at the 2017 Consumer Electronics Show.

7. TriLumina’s VCSEL array emitters are paired with Analog Devices’ high-speed laser drivers to produce 3D LiDAR illumination.

Conventional LiDAR relies on mechanical means for laser scanning; many LiDAR systems are, in fact, 2D. They’re not cheap or low power, compared to the emerging solid-state alternatives. Systems with a resolution up to 512 by 64 pixels on a field-of-view of 120 by 20 degrees, and detection ranges that exceeds 200 m for pedestrians and well over 300 m for vehicles, should be available in 2017.

LeddarTech and TriLumina aren’t alone in the race for 3D LiDAR dominance that, at this point, is targeting automotive applications. Quanergy’s S3 has a 150-m range, an accuracy of ±5 cm at 100 m, and a 120-degree horizontal and vertical field-of-view. The forthcoming S3-Qi is even smaller and lower power, with a range over 100 m.

Velodyne’s Velarray, which leverages the company’s proprietary ASICs, comes in a 125- × 50- × 55-mm package. It offers a field-of-view of 120degree horizontal and 35degree vertical with a 200-m range. It will have an ASIL B safety rating. Multiple units can provide a 360-degree view to provide safe operation in L4 and L5 autonomous vehicles.

These technologies will revolutionize other application areas like self-driving cars, and most likely will do the same for cobots.

About the Author

William G. Wong

Senior Content Director - Electronic Design and Microwaves & RF

I am Editor of Electronic Design focusing on embedded, software, and systems. As Senior Content Director, I also manage Microwaves & RF and I work with a great team of editors to provide engineers, programmers, developers and technical managers with interesting and useful articles and videos on a regular basis. Check out our free newsletters to see the latest content.

You can send press releases for new products for possible coverage on the website. I am also interested in receiving contributed articles for publishing on our website. Use our template and send to me along with a signed release form.

Check out my blog, AltEmbedded on Electronic Design, as well as his latest articles on this site that are listed below.

You can visit my social media via these links:

- AltEmbedded on Electronic Design

- Bill Wong on Facebook

- @AltEmbedded on Twitter

- Bill Wong on LinkedIn

I earned a Bachelor of Electrical Engineering at the Georgia Institute of Technology and a Masters in Computer Science from Rutgers University. I still do a bit of programming using everything from C and C++ to Rust and Ada/SPARK. I do a bit of PHP programming for Drupal websites. I have posted a few Drupal modules.

I still get a hand on software and electronic hardware. Some of this can be found on our Kit Close-Up video series. You can also see me on many of our TechXchange Talk videos. I am interested in a range of projects from robotics to artificial intelligence.