Data Fusion Enables Next-Generation Smart Mobile Applications

This file type includes high resolution graphics and schematics.

Imagine a world where your phone has really become your personal assistant, able to detect your needs and anticipate your next move. Let’s say your friend Eva sends you a text message reminding you that you’re having dinner with her after work. It’s 7 p.m. and you’re in a rush to leave the office to make it to your 7:30 p.m. restaurant reservation.

By having access to your messages and schedule, and detecting that you’re headed for the train station, your phone determines that you’re on your way to meet Eva. What you didn’t know was that there are massive delays in the subway. But because your phone also has access to your favorite public transit app, it alerts you that your train is running late so taking a cab is your best bet. Phew! A mini-crisis is averted. Now you can focus on the things that really matter.

Related Articles

- Sensor Fusion For Play And Profit

- Sensor Fusion Or Sensor Confusion?

- Database Advances Gesture Control In Consumer Electronics

This scenario requires your phone to have access to a lot of different information and types of data. This growing demand for applications that understand our context drives a need to analyze a wider variety of data sources. This, in turn, requires a shift from platforms that offer “sensor fusion” to those that feature “data fusion.” And as we’ll see, data fusion solutions will power the next generation of smarter devices and services.

Sensor Fusion Falls Short

Sensor fusion intelligently combines and processes data streams from multiple sensors so the output result is greater than the sum of individual sensor results. It eliminates deficiencies of individual devices and provides a synthesized smart output from a combination of accelerometers, gyroscopes, and magnetometers. The signals are consumed and processed simultaneously to detect device orientation and enable compelling mobile applications such as games, tilt-compensated e-compasses, augmented reality, and more (see “Augmented Reality Will Be Here Sooner Than You Think”).

Sensor fusion can provide a very accurate 3D orientation based on inertial sensor data. Many other features then can be built on top of this data. The technology raises awareness of the power of using sensors in combination and is a market driver for devices using combinations of microelectromechanical systems (MEMS) in mobile devices.

Today, sensor fusion solutions are pretty widespread, offered by semiconductor manufacturers and sensor fusion software experts. However, many solutions on the market are not robust enough and are tied to specific hardware, since they’re provided by hardware manufacturers, in effect limiting customers to their solutions. Continued market growth will increasingly depend on features that require additional sensors and different types of data on the device and in the cloud.

The demand for smarter applications and services on increasingly connected devices drives requirements for OEMs. Consumers are asking for a higher level of awareness and intelligence—devices that know, for example, where they are, what they’re doing and with whom, and provide personalized services based on these data points. Moreover, they want applications that can leverage this context and other data to anticipate users’ needs and deliver the right information, at the right time, in the right way.

By the same token, phone OEMs and app designers need to provide access to available services anytime, anywhere by providing developers the best context-based platform to enable easy-to-use and high-performing devices and applications. To facilitate this, OEMs and the application developer community need developer kits, cloud-hosted libraries of data models, and a platform that enables natural user interfaces without sacrificing power, computing, and appropriate sensor availability.

The Move To Data Fusion

Delivering on the promise of smarter and context-aware applications demands a shift from sensor fusion to data fusion—gathering data to determine the device’s state, the user’s activity, and environment, and merging with additional user data present on the phone or in the cloud. Data fusion is a critical enabling technology for pervasive context awareness on mobile devices. A wide variety of conditions could be detected:

• Device state: on the table; connected to a docking station; in hand; by an ear; in a backpack; in a pocket; in a purse; in a holster; in a shoulder bag

• User activity: standing, sitting, walking, or running; biking, riding, or skating; lying face up or down

• User environment: in a car, bus, train, or plane; in an elevator; going in/out the door

Many companies want to provide context awareness solutions for their customers, including Intel, Qualcomm, Google, and Freescale Semiconductor. But the industry needs to come together to overcome several obstacles to enable pervasive applications and services for smarter devices.

Among the challenges in developing a user-centric offering is an open framework supportive of data fusion, in which different players with very different skills can contribute to create smarter devices and apps. This open framework needs to multiplex many sources of data in vastly different formats across heterogeneous networks.

This file type includes high resolution graphics and schematics.

The results can be tuned to new data types and new use cases, which is yet another challenge. Then one needs to accommodate different data rates, data synchronization, and data loss. Effective learning strategies need to be developed when no a priori knowledge exists about mappings from data to response. Finally, data and metadata representation standards need to be developed.

Framework And Architecture

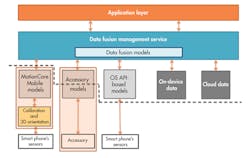

The next generation of intelligent and aware mobile applications will rely on enabling data fusion models and services to gather and process data from a variety of sources in a power-efficient and cost-efficient way.

Data fusion can use embedded motion processing models that would be deployed to mobile phones and accessory devices. It also can provide operating systems (OSs) to these models through a data fusion management service that accesses external data and provides more accurate models, which then can be exposed to applications running above the OS. Embedded motion models examples include:

• Small untriggered gestures, such as face up/down, free fall, directional shake, single/double tap, and seesaw

• Activity recognition and energy expenditure

• Complex gestures, both for triggered and untriggered recognition

• Pedestrian navigation for such functions as a 3D magnetic compass, floor change detection, step cadency detector, and distance estimator

The data fusion models ensure a robust communication process between motion-based and other kinds of models such as user personal information, agenda, social media, or cloud. The models also can manage data synchronization issues and data validity and data lifecycle. They additionally can implement some system rights and manage competitive access to models when needed (Fig. 1).

In addition to these embedded models, data fusion models also can run at the OS layer where they include map matching for indoor navigation optimization and activity monitoring and location combined with additional external information, such as e-mail, weather, train schedules, and traffic, enabling developers to create new compelling apps and services.

Context-Aware Applications

In effect, combining embedded and hosted data fusion models enables smarter applications, based on user location, activity, environment, and external data. Smart application examples include:

• Locating the position of people when driving to the airport to pick them up

• Turning off a cell phone when entering a theater

• Analyzing activity and schedules to indicate the best route to an appointment

• Locating where you are, what you are doing (walking, sitting, running), and sending notifications when your train is late so you don’t have to rush

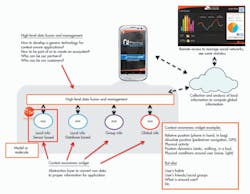

Let’s take a look at one data fusion application in detail: how to find your car in a parking lot, or how to find your favorite store in a mall (Fig. 2). Data fusion merges a user’s motion data and maps it for accurate indoor navigation. Raw accelerometer, gyroscope, and magnetometer data are calibrated and serve an input into the heading and step count models. The heading model computes the smart phone’s change in direction with respect to due north. The smart phone’s observed direction is the heading of the trajectory.

When the user is walking, the step count model calculates the user’s step cadency. Combined with the user’s step length and height, the model computes the distance walked by the user in a given time period. The trajectory model then uses an initial position, the current heading, and the distance walked to create a trajectory, based on a sequence of positions over time, expressed in the coordinates of a given map. The system also uses a pressure sensor to detect floor changes, which can be used to trigger the map-matching module to load different maps according to the current floor.

Some algorithms can detect whether you’re walking, sitting, standing, in an elevator, running, or lying down. This posture and activity detection enables a smart phone to adapt application behavior in accordance to the context, depending on the position and location. As an example, knowing that you are sitting down at work, the system will power down the location services.

Today’s solutions enable consumers to use their smart phone and other accessory devices to monitor, track, and share their activity to encourage healthy behavior and choices. Such offerings are available from companies such as Nike with the FuelBand, Jawbone with its Up, and Fitbit with its Flex.

By adding data fusion models to these products, we can expect more groundbreaking advances in the near future. Data fusion offers the ability to measure and track a wider array of sports and personal activities for advanced analyses of running, tennis, cycling, and more, making the mobile device and its accessories the single entry to a brand new way of better knowing yourself.

Looking Ahead

Integrated services based on a user’s context and consumer’s desire for smarter applications that can anticipate our needs and provide us with the right information at the right time and in the right way are the market drivers for mobile OEMs and apps developers to expand beyond basic sensor fusion. Data fusion, which merges and processes data from a wide variety of sources, is the key to enabling more intelligent apps.

However, there is still a need for smooth integration and a long learning curve since standards need to be developed. The industry shift to data fusion allows the creation of a new level of intelligent mobile applications such as pedestrian navigation (allowing you to find your way anywhere outdoors and indoors), advanced activity monitoring (for a unified solution to measure yourself and make healthier decisions), and simplifying your everyday life, adapting the device behavior to the context.

The promise of a truly connected and contextually aware world is within our grasp. But to make it a reality, ecosystem players, mobile OEMs, semiconductor manufacturers, applications developers, and data giants need to unite and collaborate now to deliver this wide array of new intelligent services consumers are demanding.

Dave Rothenberg is Movea’s director of marketing and partner alliances. He has more than 14 years of go-to-market experience productizing and commercializing new technologies for companies in Silicon Valley and Europe. He takes an interdisciplinary approach to business, having held senior management roles in marketing, business development, and engineering across a range of markets including enterprise software, wireless and mobile, consumer electronics, health and fitness, and Web services. He holds dual degrees in aerospace engineering and physics from the University of Colorado, Boulder.

This file type includes high resolution graphics and schematics.

About the Author

Dave Rothenberg

Director of Marketing and Partner Alliances

Dave Rothenberg is Movea’s director of marketing and partner alliances. He has more than 14 years of go-to-market experience productizing and commercializing new technologies for companies in Silicon Valley and Europe. He takes an interdisciplinary approach to business, having held senior management roles in marketing, business development, and engineering across a range of markets including enterprise software, wireless and mobile, consumer electronics, health and fitness, and Web services. He holds dual degrees in aerospace engineering and physics from the University of Colorado, Boulder.