Ethics in AI System Design: Creating a Language Game that All Can Play

Download this article in PDF format.

To many in the artificial-intelligence (AI) community, ethics is a daunting if not outright off-putting topic. For technical experts, it’s easy to envision ethics as a long, drawn-out, and somewhat boring course of incomprehensible lectures about dead writers exploring self-evident topics. Or, worse still, ethics might mean the dreaded “ethics training”: Sitting in a dimly lit conference room listening to a corporate trainer describe conflict-of-interest declaration forms.

Overcoming perceptions of ethics is the first challenge of creating ethically aligned AI. The second challenge is making ethics relevant for the domain. While on-going projects by the Institute of Electrical and Electronics Engineers (IEEE), British Standards Institute (BSI), and others will produce guidance documents to bring ethics to artificial-intelligence programmers and designers, programmers working today are right to wonder how to bring ethics into their field. One possible pathway is to address ethics as part of the language of the project itself. In this article, I present relevant questions to create a project or firm-specific ethics language to guide AI teams.

The Purpose-Driven Product

Ethics is not an appendage to a well-designed project, nor is it necessarily a mandate from corporate. Whether in the AI field or elsewhere, successful products or processes have ethics “baked in” to them. The first ingredient to set out when working to build ethically conscious AI is the purpose of the project. The second component is a team whose work is conscientiously and consistently driven by this purpose.

The Purpose-Oriented Team

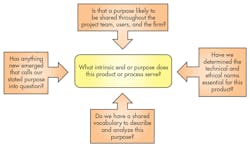

What is the purpose of this product or process?

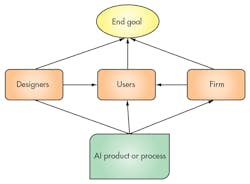

Designing ethics into artificial intelligence starts with determining which ethics matter to the designers, users, and sponsors of the project. While ethics is often associated with only moral or legal terms, such as virtues, ethics in the applied field of AI should also include technical terms.

Technical norms that programmers must master to produce a successful artificial-intelligent system include the ability to confidently code, debug, and execute a task in a particular programming language, such as C/C++. Successful coding requires attention to conventional ethical terms, such as accuracy, accountability, responsibility, and reciprocity. Those technical terms are, ideally, supplemented with conventional ethical norms, such as trustworthiness, honesty, and fairness.

Design-side ethics are instrumental to the purpose of the product or process. Whatever purpose we code for or code into the AI is the intrinsic or final end. Intrinsic goods we wish to achieve in design are more difficult to define because the range of ethical normative commitments are vast and encompass our views on life and work in toto.

The process of defining a purpose becomes a task of sieving the gamut of tastes and commitments to identify those that are relevant. For this task, it’s helpful to return to the work of philosophers to ask “for what purpose is this built”? This question probes what philosophers identify as a teleological question, or one that queries the intrinsic values and ends for which we create things, including our lives themselves.

Choosing which value to stake as the end purpose of our projects is the most daunting task. Fortunately, however, recent work by the IEEE has suggested that, when we evaluate the possible ends for our AI systems, we should consider “well-being” as the ideal end.

Is this purpose agreed upon, communicable, and shared?

AI workers outside of the realm of philosophy may find the instruction to consider good or well-being as daunting as the instruction to consider ethics generally. However, some important resources exist for AI workers to develop a working definition of well-being, such as The Organisation for Economic Co-operation and Development (OECD) guidelines for measurement of well-being and the IEEE Ethically Aligned Design and IEEE P7000 documents.

The OECD recommends that 11 factors might be included in a program, product, or process that promotes well-being: income and wealth, jobs and earnings, housing, health, work and life balance, education and skills, civic engagement and governance, social connections, environmental quality, personal security, and subjective well-being. While not statements of end purposes or intrinsic values themselves, these 11 factors encompass arenas in which a product or process might serve the well-being of users, designers, or the company sponsoring the project.

De we have a shared vocabulary to describe and analyze this purpose?

Whether a team selects well-being or good or trust as their highest purpose for the project, it’s essential that all team members have a shared sense of the purpose and can identify what their role is in creating that intrinsic end. Shared sense of purpose is helped along by shared meaning of the terms within the design team.

As famed philosopher John Rawls points out, the process of attaining a “reflective equilibrium” is useful to create a set of shared and coherent meanings that groups can act upon. Two pathways unfold to define ethics for concerned teams: establishing an independent ethical dictionary internal to the team itself, or establishing an ethical dictionary that references existing ethics language, such as that produced by philosophers.

Pathway 1: Designing your own value system

To the extent that thoughtful teams wish to define their own ethical terms that align with their firm’s mission and values, there’s no a priori reason that this path should not be taken. Working from foundational intuitions—gut feelings about what is good or right beyond technically correct—group members trade statements about the ranking of mission values, definitions of those values, and visions about how those values cohere to achieve well-being or another similar intrinsic indicator.

The process of dialogue itself both creates meanings and generates buy-in to those meanings by team members. An ideal outcome of this dialogue might be a shared glossary of value terms that each member could incorporate into their daily practice. To add gravitas to this process and documents, a helpful tool to emphasize the core values—perhaps a thoughtfully designed poster or infographic—should be distributed throughout the team.

Pathway 2: Designing a value system using AI

A complementary pathway, or one to be taken independently, is to use work from the discipline of ethics to identify shared meanings. Philosophical journals provide a rich trove of definitions of particular terms against which machine-learning algorithms could be set to determine the definitions and uses that are consistent surrounding the term of interest.

Such an empirical, text-mining approach to definition creation gives team members a set of further learning sources to which they could refer in order to best capture how to define or deploy ethical language. This also gives the team the opportunity to buy into the process of creating shared meaning by bringing the task of defining ethical values into the normal workflow and expertise of an AI team.

The Time Challenge with Ethics

A set of shared meanings and dictionaries of ethical terms are only a starting point for building AI projects with an eye toward ethics. The bigger, more cumbersome, more time-consuming project is to ensure those ethics are imbued throughout each phase of the project lifecycle.

This requires teams to continuously engage in practical reflective equilibrium wherein they conscientiously evaluate whether the technical and aesthetic norms that were determined to be essential to achieving the end goal are met. In the event that new technical norms must be incorporated or new uses and aesthetic concerns arise, these must be defined and assessed as being coherent with the desired original end state.

Although the language of reflective equilibrium may be unusual for the domain, the practice of it is not. Reflection on whether a new product or process meets well-defined aesthetic goals is similar to the processes of assessing technical conformity, continuous quality improvement, software code review, and product beta-testing. Building ethics into an innovative system takes a substantial investment in time and intelligence.

Fortunately, philosophers and the cast of AI teams share another unexpected similarity: An obsession with the problem of time. Indefinite reflective exercises are of little use to AI teams hurriedly working to get their project out to a growing competitive market; an AI team working to instill ethical values into its products needs to plan for executive decision-making in the realm of ethics. The work of early computer scientist and behavioral scientist Herbert Simon may provide helpful insight into identifying optimal stopping periods for ethical analysis, but that’s a matter for another article.

Dr. Sara R. Jordan is an Assistant Professor in the Center for Public Administration and Policy at Virginia Tech. Her research sits at the interface of ethics and high-consequence technologies research and development, such as AI and pharmaceutical R&D. Sara is also a member of The IEEE Global Initiative for Ethical Considerations in Artificial Intelligence and Autonomous Systems (PDF) and the IEEE P7000 Working Group. An avid kayaker and canoeist, Sara can be contacted at [email protected] when she is not on the water.