This file type includes high-resolution graphics and schematics when applicable.

FPGA-based prototyping allows for real-time simulation that can be used to develop and test applications on hardware that doesn’t yet exist or is hard to obtain. To find out more about the options, advantages, and trends, I spoke with Tom De Schutter, Director of Product Marketing and Physical Prototyping for Synopsys.

Wong: Could you briefly speak to why embedded software development is becoming more important, and how FPGA-based prototyping is being used to optimize the design of embedded devices?

De Schutter: To improve the power and/or performance characteristics of an SoC, customized software is often necessary to apply hardware resources rather than software to accomplish a function. This function offloading from software to hardware is one of the major ways the semiconductor vendor has to differentiate its product.

Consider a function that takes advantage of the DSP or GPU block of the SoC. The tailoring in an OS like Android may be required not only at the lowest driver levels of the Linux OS kernel, but also at higher levels like the graphics and audio elements of the hardware abstraction layer (HAL), media, or OpenGL ES API libraries—and even the activity manager of the application framework. FPGA-based prototypes uniquely are able to merge very high performance, upwards of 100 MHz with a HAPS-80 system that combines multiple Xilinx VU440 FPGAs, and system clock speeds with real-world connectivity to a PHY interface. This allows engineers to execute a full software stack, including the kernel, HAL, libraries, and application framework, at near real-time speeds while interacting with high-fidelity physical equipment.

Wong: What kind of prototyping strategies do we currently see in the market in terms of how prototyping is used in the design process, whether teams are building their own solutions versus relying on commercial solutions, and interaction with other verification technologies?

De Schutter: We have observed that the ASIC design teams realizing the most benefits from FPGA-based prototyping have a strategy to use design-for-prototyping (DFP) methods. For example, to accelerate the transition of RTL and IP from the verification context, design teams anticipate the FPGA and memory resources available on the target prototyping system and, when necessary, establish smaller-scale versions or substitutions of those blocks that will be most difficult to prototype.

Using a commercial prototyping system versus custom-built is certainly a common strategy. We hear engineering management more and more point out the superior ROI provided by commercial systems is due to several factors: the improved efficiency of using a scalable, modular system that can be adapted to a variety of prototyping projects large and small; the cost savings from eliminating equipment maintenance and support demand on internal engineering resources; and the benefit of allocating their engineering talent to the validation task itself rather than developing validation tools.

I have seen cases where the staunchest engineers, who previously lobbied to continue using in-house hardware systems, transform into prototyping methodology experts at companies that adopt a commercial system like Synopsys HAPS with ProtoCompiler. Usually the tipping point is when our application consultants are able to demonstrate faster prototype bring-up and superior performance that’s possible with our ProtoCompiler automation software. They recognize the features that can only be accomplished as a result of the integration of hardware, embedded firmware, and software.

While prototypes tend to be brought-up using mature RTL that has passed a significant (>70%) portion of the verification process, we do see a significant fraction of the user community applying FPGA-based prototypes for what they refer to as “simulation acceleration.” However, it’s not in the way that you might hear a verification specialist define acceleration. This term usually implies that the applications of an emulator host the RTL, verification IP, and a synthesizable test bench.

Acceleration for a media codec developer may really means a system capable of driving high volumes (gigabytes) of test data against the DUT and examining the results at hardware execution speeds. A prototype with one or more FPGAs, large DDR memory ICs, and a PCIe or USB control interface is a common architecture we see for this task. When coupled with a software API for control and monitoring of the prototype, it becomes a very powerful means for the verification task even with “immature” RTL blocks.

Wong: Even as a vendor, I think you would have to agree that the price point for a commercial solution is higher. But when you talk to new customers, what is behind the trend of teams moving away from doing it themselves to buying a commercial solution?

De Schutter: Indeed, commercial systems are more expensive when you only consider the pure cost of the FPGAs in the system. And I have seen how challenging it can be for engineering managers to justify the cost, especially when a component procurement manager gets involved and starts to evaluate the bill of materials of only the PCB assemblies!

Like any physical product, it’s easy to look at a “tear-down” and the equipment and estimate the COGS. What’s not visible is the tens of thousands of lines of embedded firmware running on the on-board controller, the system IP and APIs to accomplish pin sharing and workstation communication, the algorithms for partitioning, and system routing tailored for the hardware architecture. These engineering costs are easy to overlook and wind up not being accounted for. Other common factors like poor reliability, limited reuse, and low system performance push design teams to adopt a commercial system that has a better track record.

Wong: You recently introduced what you are calling the first fully integrated prototyping solution. Could you explain what is new here, why it matters, and what you mean by fully integrated?

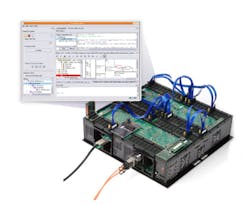

De Schutter: With the launch of the HAPS-80, we’ve reached a point now where the development environment, ProtoCompiler, is a prerequisite for the prototyping equipment. So many of the features are now co-designed and incorporate hardware, software, and firmware elements that the union of all three is required.

An example of a HAPS-80 feature resulting from this sort of integration is the latest generation of HAPS Deep Trace Debug. Signal instrumentation for watchpoints and triggers can be specified by the prototype designer prior to logic partitioning and FPGA synthesis from the RTL perspective, where logic is most familiar. The means to synchronize the debug logic across FPGAs, manage the 8-GB trace memory storage, and communicate with the host workstation at runtime is fully automated by ProtoCompiler using dedicated resources of the HAPS-80 system assembly. Traditional FPGA debug tools simply cannot offer such a high degree of automation. Partition, system routing, automation of TDM IP, payload integrity, system-level static timing analysis, and system assembly checks are other examples of areas where the features depend on more than one facet of the system.

Wong: What kind of feedback are you getting from early adopters in terms of the pros and cons of an integrated prototyping approach?

De Schutter: It’s ultimately the productivity improvements that our customers cite as the positives achieved by the features. Faster ASIC-to-FPGA migration, quicker partition results with high system clock speeds, more debug visibility, and more validation scenarios via workstation APIs are examples. We don’t really hear downsides of integration.

Wong: What impact do you expect the introduction of fully integrated prototyping solutions from vendors like yourself to have on the overall prototyping market over the next few years?

De Schutter: We expect more prototyping-specific automation tools to be offered and co-designed with the hardware equipment. The utility that results from this is going to outpace what can be accomplished with custom-built systems or by commercial firms that don’t have a significant investment in system IP and automation software for the equipment. We expect that the resulting efficiency gains from an integrated prototyping solution will continue to increase the demand for prototyping overall. In the end, this need for prototyping efficiency is driven by the need to shift the entire design cycle as hardware and software have become completely dependent on each other. Semiconductor vendors realize that they require an end-to-end prototyping strategy to accelerate software development, hardware-software integration, and system validation.

Wong: How do you see prototyping technologies and strategies evolving going forward?

We anticipate more prototyping solutions tailored for customer end-applications. These might take the form of reference designs and kits, and incorporate software stacks. Prototype reuse, module-to-module, and the task of integrating from IP blocks into subsystems will improve. Organizations that have distributed teams want easy access and administration of the prototyping systems via client-server models.

We see hybridization of prototypes opening the door to more validation scenarios and easier access to CPU subsystems using SystemC/TLM models. This allows for more realistic scenarios in which a CPU and software stack is used to validate IP operation in-context of the SoC. All of these facets will play an important role as part of an end-to-end prototyping solution tailored toward the needs of today’s software-driven SoC development.

About the Author

William G. Wong

Senior Content Director - Electronic Design and Microwaves & RF

I am Editor of Electronic Design focusing on embedded, software, and systems. As Senior Content Director, I also manage Microwaves & RF and I work with a great team of editors to provide engineers, programmers, developers and technical managers with interesting and useful articles and videos on a regular basis. Check out our free newsletters to see the latest content.

You can send press releases for new products for possible coverage on the website. I am also interested in receiving contributed articles for publishing on our website. Use our template and send to me along with a signed release form.

Check out my blog, AltEmbedded on Electronic Design, as well as his latest articles on this site that are listed below.

You can visit my social media via these links:

- AltEmbedded on Electronic Design

- Bill Wong on Facebook

- @AltEmbedded on Twitter

- Bill Wong on LinkedIn

I earned a Bachelor of Electrical Engineering at the Georgia Institute of Technology and a Masters in Computer Science from Rutgers University. I still do a bit of programming using everything from C and C++ to Rust and Ada/SPARK. I do a bit of PHP programming for Drupal websites. I have posted a few Drupal modules.

I still get a hand on software and electronic hardware. Some of this can be found on our Kit Close-Up video series. You can also see me on many of our TechXchange Talk videos. I am interested in a range of projects from robotics to artificial intelligence.