Learn how to dissect the efforts associated with evaluating wireless services.

The continued rapid deployment of high-speed third-generation mobile system (3G) wireless networks allows for a wide range of new multimedia and data type services previously available only via wire line. Some typical examples are streaming video, short message service, multimedia messaging service (MMS), and push-to-talk over cellular (PoC). From the consumer�s point of view, this translates into high-quality broadcast video service, work-group walkie-talkie service, wireless interactive gaming, and a host of applications yet to be conceived.

Given the large investments by service providers in spectrum and network equipment, these types of wireless services are critical to sustaining and increasing both their monthly minutes of use and average revenue per user. A wireless service will only be successful if it provides a satisfactory end-user experience.

One difficulty is that the perceived value of a wireless service is subjective and varies from subscriber to subscriber. However, a certain level of end-user experience is required to ensure a successful offering. The necessary quality of service performance resulting in a satisfied end user can be determined via a structured test and measurement methodology.

Recent wireless technology launches have significantly increased the data rate available to users, dramatically expanding the possibilities in wireless applications. As network operators begin to charge premium rates for higher-speed connectivity, they face increasing pressure to deliver applications that can take advantage of the new bandwidth.

At the same time, development and delivery of some wireless applications have been on hold because they require the improved data speed that only now is commercially available. All of this evidence points to the possibility of having a large number of services become available within a very short time frame, making the test process more difficult and more critical.

As the search for the killer app or more likely, multiple killer apps continues, service providers must evaluate endless numbers of 3G wireless services. And each permutation of a service needs to be tested on each flavor of the wireless devices to ensure device and service reliability within the network.

The Difficulties of Wireless Service Testing

Testing wireless services can be complex and time intensive. Some of the typical issues when tackling this test challenge are the following:

� Testing requires access to multiple distributed and complex network elements that often are inaccessible.

Solution: Comprehensive network emulation allows simulation of difficult network scenarios in the lab. A complete emulation of the wireless network can be co-located with local servers connected via a private local area network.

� The tester must have the capability to control these network elements to the level required to fully stress test a wireless device from the points of view of loading, adversarial testing, and safe-for-network operation.

Solution: While field testing is limited to actual network conditions, lab testing allows for the creation of worst-case scenarios to be configured and tested. Network scenarios that might be too dangerous to explore on a real network can be safely configured in the lab.

� Manual testing can be laborious and time consuming. However, if automation is not initially designed into the test platform, it may not be upgradeable.

Solution: An automated test platform frees valuable resources.

� Troubleshooting can be difficult as wireless services are built on layers upon layers of protocols. How do you identify which layer is broken?

Solution: By taking a layer-by-layer approach to testing, the problem is easier to identify and solve.

� Simultaneous services can impact each other.

Solution: Devices under test must be evaluated against a combination of simultaneous services.

� A baseline-acceptable end-user experience is difficult to quantify.

Solution: Each service provider must determine what this level should be and set pass-test results accordingly.

� Industry-approved test standards and methodologies simply do not exist for many wireless services.

Solution: If and when industry test stand-ards are developed, best practices from other wireless testing methodologies must be adopted. In most cases, services will evolve very quickly. Standards bodies realize that trying to produce test standards in this niche market would be irrelevant and, in most cases, futile.

� Nonstandard implementation of stand-ardized protocols.

Solution: Implementation of a flexible, customizable test platform will allow for evaluation of nonstandard implementation.

The issues associated with wireless service testing are substantial. The key to solving any significant problem is to break it down into smaller problems. Although many of the solutions are identified, most of the outlined solution aspects must be incorporated into a structured test plan.

A Layered Approach to Wireless Service Testing

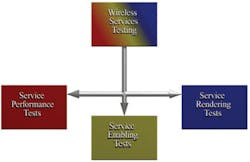

In dissecting the efforts associated with evaluating wireless services, testing can be segmented into three categories: service enabling, service rendering, and service performance (Figure 1). In addition, these different types of performance analysis are most effective when a combination of lab and field tests is used.

Service-Enabling Tests

Service-enabling tests focus on evaluating the underlying technologies needed to support the higher-level services. Examples would be testing of session initiation protocol (SIP), real-time transport protocol (RTP), and voice over IP (VoIP).

These protocols are some of the core building blocks for wireless services, so it is essential that they be tested to work reliably and repeatedly. They can and should be tested independent of the wireless service to simplify the test process. It is not until these building blocks are deemed solid that wireless-service testing should be conducted.

Generally, service enabling tests are standards-based driven by the Global Certification Forum with support from the Open Mobile Alliance and the Third-Generation Partnership Project.

Service-Rendering Tests

Service-rendering tests focus on determining whether a service works as rendered (displayed) on a wireless device display. Content that is not rendered correctly can very easily have a large negative impact on the end-user experience. Examples would be verifying that the roaming indicator is displayed, ensuring that various markup languages can be handled correctly, and determining that streaming video is displayed at the proper rate.

As implied by the name and the examples, this type of testing focuses on capturing what�s displayed on the device and comparing the captured information to expected parameters. There are several tools, technologies, and companies that excel in these types of tests.

Generally, service-rendering-based tests are not standards based. This kind of testing is driven by the actual services and is not a function of the underlying standardized technologies.

Service Performance Tests

Service performance tests focus on measuring service quality under variable conditions. Service performance can vary due to any number of conditions and quickly degrade into an unacceptable end-user experience. Some conditions that might impact service performance are poor RF conditions, inadequate data network conditions, an adversarial protocol situation, and simultaneous service interaction.

Testing service performance during simultaneous service is especially challenging. As an increasing number of services become available, the number of service interaction combinations will grow exponentially. In addition, these interactions affect all protocol layers of a wireless device, and this must be taken into account when specifying a test methodology. Some examples would be the following:

� L1 Test: What happens to the code division multiple access (CDMA) receive characteristics of a CDMA/WiFi device when wireless fidelity (WiFi) is transmitting?

� L1 Test: What happens to assisted GPS receive accuracy when a receiver tunes away from the global positioning system (GPS) to search for neighboring cells?

� L4-L7 Test: If a web page and an MMS message are received simultaneously, which service gets the display priority?

� L4-L7 Test: If a voice-mail notification is received during a voice call, does the device give an audio alert beep for the message waiting?

A balanced combination of lab and field test is required to ensure comprehensive test coverage. Lab testing provides a way to do side-by-side benchmarking of device performance given identical, repeatable test conditions. It also allows for adversarial testing through implementation of nonstandard network situations.

Field tests provide the best indication of the actual end-user experience. Field testing also verifies interoperability on actual network-equipment protocol implementations rather than on an emulated environment used in the lab.

Given the pressure to launch wireless services quickly, the test methodology must strike the right balance between traditionally lengthy lab-based trials and time-friendly user field trials. One benefit of combining both field and lab testing: By converting actual field test data into lab test suites, problems identified in the field can be isolated, analyzed, and solved in the lab.

How to Test PoC Using a Layered Approach

Let�s look at how you might approach testing a particular implementation of PoC technology on a CDMA network using the layered approach. The PoC implementation that will be analyzed builds on several standard protocols. The SIP protocol is used for PoC session management. The RTP protocol transports voice over the CDMA2000 data network. PoC service state and buddy information is conveyed to the user via audio alerts and display content.

In analyzing the PoC system architecture, the overall test methodology can be defined and partitioned as outlined in Table 1.

A PoC test methodology partitioned as described would provide the following benefits in no particular order:

� It allows a test solution to be created using a building-block approach in which the best building blocks could be sourced from different companies. Generally, it is difficult for a single company to possess all the expertise required to provide a complete solution.

� Some test functions are applicable to other services, reducing the overall test cycle and effort. For example, the packet data channel utilization tests, channel access retry tests, and system access test would be reusable in a data test system used to test HTTP/FTP services.

� It allows a structured and sequential test process in which the underlying technologies are solidified before testing the actual service. This would help reduce the overall test cycle by simplifying the debugging process. Debugging is simplified by isolating the issues to smaller building blocks.

Summary

The wireless community finds itself at a key crossroad in the history of communications. The technology is mature, higher-rate services are available, and wireless service has become a routine part of many lives. At this point, the industry is ready to deliver on the promise of wireless with the deployment of a multitude of new services. If this deployment is done well, these new services will be a boon for network operators, handset manufacturers, and subscribers.

On the other hand, problematic service deployment may haunt the industry for years. The general public rarely affords a second chance to a technology that has been deemed disappointing.

Success here depends on a workable test methodology that is both conceived and executed well. This challenge only becomes manageable by breaking the plan into building blocks such as service enabling, service rendering, and service performance. This approach allows a complex test plan to be distributed among several companies or groups.

About the Author

Gene Kientzy is director of professional services at Spirent Communications. He was co-founder of InfoSOFT Technologies, which was acquired by Spirent Communications in 2000. Previously, Mr. Kientzy served as director of development for Spirent�s UTS product line. Spirent Communications, 1701 River Run Rd., Suite 801, Fort Worth, TX 76107, 817-810-9555, e-mail: [email protected]

FOR MORE INFORMATION

on wireless testing services

at Spirent Communications

www.rsleads.com/510ee-177

October 2005