Before going to work this morning, you may have replied to a few e-mail messages, downloaded help pages related to your latest domestic appliance problem, and rearranged images for today’s design review presentation—all on your iPad™. Later, in the lab at work, you pushed buttons and turned knobs on your oscilloscope to achieve the desired signal acquisition conditions and trace display.

The scope and iPad represent just two of the many types of interfaces we work with. Fueling the range of variation is the trend toward natural user interfaces (NUIs) found in several consumer devices. Why does the iPad operate in the way it does? Are features such as scrolling momentum and gesture-controlled scaling just marketing gimmicks, or do they provide actual benefits?

According to Steve Ballmer, Microsoft CEO, NUIs represent a major step forward. “I believe we will look back on 2010,” he said, “as the year we expanded beyond the mouse and keyboard and started incorporating more natural forms of interaction such as touch, speech, gestures, handwriting, and vision—what computer scientists call NUI.”1

Mr. Ballmer stated that the goal of incorporating NUI-based technologies has long been one of the most challenging problems in computer science. Indeed, Microsoft, Apple, and many other companies and academic researchers have been working on NUI-based technology for decades.

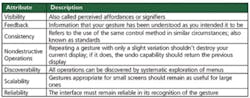

Table 1. Required Interface Characteristics

Source: Nielsen and Norman Group

The many separate approaches taken to NUIs have not all resulted in systems with operations that are discoverable, consistent, and reliable. And these are only three of several attributes listed in Table 1 that are associated with a well-designed machine interface according to Dr. Jakob Nielsen and Dr. Don Norman, cofounders of the Nielsen Norman Group (NN/g).

In their article, these experts in product usability critiqued the control strategies in today’s latest consumer products with emphasis on the iPad. They argued that “in the rush to develop gestural or natural interfaces, well-tested and understood standards of interaction design were being overthrown, ignored, and violated.”2

NUI Evolution

Today, most human-computer interaction takes place via graphical user interfaces (GUIs). Succinctly, the authors explained, “The true advantage of the GUI was that commands no longer had to be memorized. Instead, every possible action in the interface could be discovered through systematic exploration of the menus.”2

During the time GUIs were becoming popular, research into aspects of NUIs was growing, much of the work on multitouch systems originating during the mid to late ’80s. In particular, Bill Buxton, principal researcher at Microsoft Research, credited Myron Krueger’s 1983 video place/video desk work with introducing many forms of rich gestural interaction—more than a decade ahead of its time. Dr. Krueger appears to be the source of the hand motions commonly used today including the pinch gesture to scale and translate objects.3

As NUI-based consumer devices have become more widely available, NN/g’s research has found them lacking in comparison to a GUI’s visibility, discoverability, consistency, and reliability. In part, these comments were based on findings from an iPad usability study that NN/g conducted shortly after the product was launched. It found that users often didn’t know what control options existed, nor could they be certain the options would work in the same way among a group of application programs.4

The NN/g article suggests that icons describing the gestures appropriate for the current system state should be visible. Unless some indication like this is used, the authors asked, “How is anyone to know, first, that this magical gesture exists, and second, in which settings it operates?”2

Nevertheless, NN/g did not decide that a NUI was inappropriate for iPads and similar kinds of devices, but rather that the NUI implementation was incomplete. In fact, the article on usability concluded by praising the positive aspects of NUIs:

“The new interfaces can be a pleasure to use and a pleasure to see…. The new displays promise to revolutionize media: News and opinion pieces can be dynamic, with short video instead of still photographs and…figures that can be adjusted instead of static diagrams…. The new devices also are fun to use: Gestures add a welcome feeling of activity to the otherwise joyless ones of pointing and clicking.”2

Oscilloscope UI Evolution

Benchtop Scopes

Professional-grade oscilloscopes provide several means of control including conventional front-panel buttons and knobs as well as a menu-based GUI. They do not offer true NUIs, but the graphical content of the GUIs has been enhanced by many manufacturers to create a more intuitive and informative user experience.

For example, there are lots of trigger modes and several interacting conditions that determine operation in each mode. Descriptive icons unambiguously display the relationships among these elements and allow you to make the most appropriate choice. Sometimes, the icons are dynamic as well, changing in response to control settings rather than simply indicating a certain type of trigger setup.

Chris Loberg, senior technical marketing manager at Tektronix, explained, “We support both a screen-based UI with touchscreen or mouse control and knob-based methods. Users like being able to directly move cursors and trigger points. It’s intuitive and improves productivity.

“Items related to measurement analysis, such as result reporting and mask test setups, are menu-driven and similar to the familiar PC environment. For quick evaluation of signal performance, many engineers prefer the touch and feel of front-panel knobs used to adjust things such as the vertical and horizontal settings,” he concluded.

As this example illustrates, NUI replacement of existing UIs isn’t necessarily a goal shared by all test equipment manufacturers or users. Instead, effort has been concentrated on enhancing the existing parallel knob/button and GUI control functionality that has evolved through several instrument generations.

In addition to a menu-based GUI, Yokogawa has retained conventional knobs and buttons in its latest scopes primarily for three reasons, according to Joseph Ting, marketing manager, test and measurement:

- Tactile, physical feedback is important when operating benchtop hardware controls, particularly when simultaneously operating the DUT, probes, or other instruments.

- Pointing accuracy with a touchscreen is limited compared to conventional controls.

- There is a trade-off between responsiveness and sensitivity. In many cases, accidental touchscreen operation is not acceptable.

Mr. Ting gave several examples of ways in which Yokogawa has enhanced scope interfaces. Adding the jog-shuttle control enables you to perform both coarse and fine adjustments when positioning cursor or zoom windows. A four-directional joystick supports easy navigation of menus with checkboxes. And, the built-in graphical online help function has been expanded through block diagrams, graphical dialogs, and algorithm explanations.

In LeCroy’s 8 Zi Series Scopes, the display setup function could have been accomplished through a pull-down menu list but instead has been done graphically. The descriptive single, dual, and quad terminology is supplemented by small pictures showing how the traces will be displayed.

These kinds of innovations are similar to the now standard method of drawing a box around the portion of a trace that you want to zoom. They all make good use of the graphical nature of the user interface and can be controlled by a mouse or touchscreen. You’re still working with a GUI, albeit a better GUI.

According to Joel Woodward, senior product manager at Agilent Technologies, “Our customers tell us that our touchscreen- and mouse-accessible features together with conventional knobs make a good combination. Engineers and technicians love knobs, and many times using a knob is the fastest approach. [Nevertheless, with our] drag-and-drop measurements, users can add automated measurements with a single swipe of the touchscreen without having to wade through menu structures,” he explained.

Well, maybe not a swipe in the iPad sense, but Agilent’s Infiniium GUI has been graphically extended so that you can select an icon representing the kind of measurement you want to make, drag it to the relevant part of the waveform, and cause the measurement to be performed by dropping the icon. The drag-and-drop measurement functionality is a good example of using icons to accomplish a complex action that would have taken many more discrete menu steps in a conventional GUI. Selection and positioning have been combined with an action, all in a single drag-and-drop motion.

PC-Based Scopes

National Instruments (NI), Gage Applied Technologies, ZTEC, and Pico Technology are in a somewhat different position than manufacturers of benchtop instruments. These four companies provide scopes that display traces directly on the PC screen. There are no dedicated knobs and buttons so the GUI becomes the only means of control rather than an alternative.

Jeff Bronks, technical manager at Pico Technology, said, “Anyone familiar with windows can quickly start to use a PicoScope with no need for a second mouse or an on-screen keyboard. The most frequently used controls are located in toolbars at the top and bottom edges, leaving most of the window area for waveforms.”

NI’s Rebecca Suemnicht, senior product manager-digitizers, cited an example in which a customer developed his own scope interface using NI LabVIEW. “The definition of easy-to-use is heavily influenced by the application, culture, and language,” she said. “A Japanese customer I talked with developed software to define the measurement features and functionality of [his] lab oscilloscopes as well as display the signals and measurements of interest.”

Ms. Suemnicht explained that for large applications, a custom GUI can enable control of an entire system, not just a single piece of equipment. Depending on the required output from the system, postprocessing can completely bypass the display of the original signals. Further, this capability together with a custom interface can create an instrument readily accepted by specialists in fields such as medicine, where a basic oscilloscope would be completely unsuitable.

ZTEC also has enhanced the graphical aspects of its ZScope® PC-based control GUI. Christopher Ziomek, the company’s founder and CEO, said, “With ZScope, you can undock, move, resize, hide, or customize different pieces of the GUI panels. ZScope eliminates deep menu trees that are common on benchtop instruments by making features more accessible through flexible panels, tabs, and graphical icons and controls.”

Interestingly, as a result of customer feedback, the ZScope GUI evolved over time from having the look of a cell-phone application to that of a benchtop instrument. Predominant customer feedback indicated that the PC oscilloscope GUI should be intuitive to use, and for most customers that meant mimicking a benchtop scope.

Ted Briggs, chief technologist at Gage Applied Technologies, took a broader view of the user interface. “[With our software development kits, users] can create their own custom experimental environment or use GageScope, our standard scope software. [Within] the PC environment, a complete arsenal of visualization, analysis, and storage tools exists,” he continued. “The level of integration varies, with some users adding their own algorithms to customize their measurement environment.”

Hand-Held Scopes

It’s easy to take basic user interface functionality in benchtop and PC-based scopes for granted because both types have plenty of available power and space to work with. A hand-held scope presents different constraints.

The new Fluke ScopeMeter® 190 Series II Scopes display four simultaneously sampled traces in color on a 153-mm LCD with LED backlight. The scopes are not touchscreen or mouse controlled but have fully isolated, CAT III 1,000 V/CAT IV 600 V safety-rated channels and a logically arranged complement of controls that, according to the company, is easy to learn, use, and remember.

Hilton Hammond, ScopeMeter unit manager, said, “Ease of use is especially important for oscilloscopes designed for use in the field. The scope may be hand held while measuring high voltages in poorly lit, noisy, unsafe, and dirty or wet environments. The ability to display four channels enables operators to simultaneously view all three phases of the inputs and outputs of increasingly popular variable-speed motor drives.”

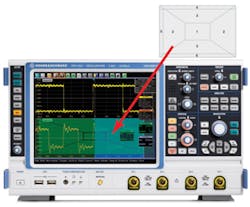

Figure 1. RTO 1024 Oscilloscope showing SmartGrid Icon Placement Guide; RTO SmartGrid Active Areas (inset)

1. Overlay of Signals, 2. New Diagram at Vertical Positioning, 3. New Diagram at Horizontal Positioning, 4. New Tab, 5. XY-Diagram, and 6. YX-Diagram

Courtesy of Rohde & Schwarz

Further Scope Innovation

In June 2010, Rohde & Schwarz (R&S) launched the RTO Series Oscilloscopes with a touchscreen interface (Figure 1). The instrument still relies on a GUI, but the overall control strategy is much more graphically oriented than in previous scopes. For example, rather than listing possible functions in menus, they are shown as a comprehensive toolbar that gives fast access to zoom, cursors, and FFT functions via familiar icons.

Similar to the GUI in R&S signal generators, block diagrams are used to show signal flow. In a visually similar approach, LeCroy scopes include what the company terms the processing web. This is a graphical interface that allows you to add and interconnect multiple math and measurement functions into a single comprehensive process flow and route it through a single math or measurement output.

LeCroy also addressed the restricted precision associated with touchscreens. The company has developed algorithms that make it easier to accurately point at a displayed feature without using a separate stylus.

Color-coding has long been used in DSOs to link groups of controls to displayed traces. In the R&S RTO scope, nondisplayed but active traces are indicated by small dynamic and color-coded icons, similar in concept to LeCroy’s histicons that represent nondisplayed measurement histograms. The R&S icons and LeCroy’s histicons are miniature real-time displays, not simply static images.

One of the major NUI failings cited by NN/g was the capability of any gesture to operate anywhere. Because gestures were unbounded, they were more likely to be misinterpreted and confusing to the user. In contrast, the R&S SmartGrid shown in Figure 1 partitions the display area into zones with different meanings.

As explained in an R&S publication, “As you drag a signal icon and hover over a diagram…the SmartGrid appears. The layout is controlled based on where the icon is dropped.”5 If you drop it in zone 2, a nonoverlapping full-size trace is displayed corresponding to the selected channel. If the icon is dropped in zone 1, traces will overlap. The datasheet notes that each zone can accommodate any number of traces.

Beyond Oscilloscopes

A number of gestures have been defined in the Synaptics Gesture Suite™ SGS 9.1 for TouchPads™. Synaptics supplies capacitive TouchPads for many computer and smartphone makers. The SGS 9.1 software supports use of one-, two-, and three-finger gestures with Microsoft windows applications on notebook PCs with TouchPads including:

- Two-finger scrolling

- Two-finger rotate

- Two-finger pinch zoom

- Three-finger flick for paging through documents

- Linear scrolling for scroll bars with short lists

- ChiralScroll™ for scroll bars with long lists

- Momentum so flicked elements coast to a stop naturally

Although certainly not exhaustive, this list represents some of the more commonly used gestures, many of which are multitouch. According to Dr. Andrew Hsu, technology strategist at Synaptics, “Touchscreens are more than just a cool way to interface with a device. They allow designers to create highly dynamic, configurable, personalizable controls for a device, which means that designers can optimize the device controls in software to suit the needs of any particular application or use scenario.”6

Figure 2. 7200 Configurable Automated Test Set UI Display

Courtesy of Aeroflex

These are precisely the characteristics that led David Poole, CTO of the Aeroflex Instrument and Wireless Divisions, and Marv Rozner, product manager for the Aeroflex 7200 Configurable Automated Test Set, to develop the new Aeroflex Common Platform (CP) touchscreen UI shown in Figure 2.

In an exclusive interview with EE-Evaluation Engineering, Mr. Rozner explained, “Our goal was to develop a user interface that scaled so we would have a common user interface across all new products. Also, we were trying to create a modular platform. We wanted to use hardware and bus standards that would scale to allow tremendous flexibility in configuring instruments and allow us to rapidly develop derivative instruments on the new platform. You can’t do that by redesigning the front panel for every new instrument—not with all the labeled and dedicated buttons and knobs. A touchscreen-based user interface allows us to use the same front panel for all the applications.”

Mr. Poole added, “We believe that the future will be with touchscreen interfaces. We want to be able to put our hands on the traces and control the instrument that way. On the other hand, it is a new paradigm, and we need to be able to see what we’re doing at the same time as controlling it.”

The CP user interface is touchscreen-based and at this stage of development implements single-touch gestures with multitouch planned for the future. A great deal of care has been taken to ensure good discoverability and feedback.

Mr. Rozner commented, “The first thing we tell people is that if they want to do something, touch it, and it probably will respond in a way that you understand. For example, if you want to recenter a trace on the screen, touch and drag it to the desired location.”

“We’ve put together a user’s group so we can get feedback on [the CP interface],” Mr. Poole elaborated. “We realize that with a new paradigm, there’s the problem of discoverability. So, we make sure you know where things go—that a trace doesn’t just zoom off somewhere but predictably goes to an edge or corner.”

Echoing NN/g’s concerns, Mr. Rozner said, “With gestures, you have to be careful. People generally can’t easily discover gestures. Alternatively, with on-screen hints, you can understand that possibly you can go to a point to do a certain thing. You have to be careful with gestures because, although they’re shortcuts, they’re not necessarily intuitive.

“For example, if you want to change the amount of zoom, you can touch the X or Y axis, and the trace will scale accordingly as you drag the point,” he continued. “We’ve gone to great pains to give people multiple ways to do things that are intuitive, so chances are that if they try something, it probably will work.”

Both managers referred to the initial resistance to developing a touchscreen-based interface. The company’s marketing and sales people felt that customers wouldn’t accept touchscreen-controlled products. With much of the interface work completed, the story has completely changed. Positive feedback has been very good, especially with regard to the interface’s versatility.

As Mr. Rozner observed, “[We needed to design] an interface that scaled in complexity and richness of data, all the way from what an 18-year-old kid in a bunker in Afghanistan is going to need vs. the guy in a lab. That aspect was considered at every step.”

Conclusion

The test and measurement industry is conservative so the influence of trends in consumer electronics can range from being irrelevant to taking several years to show up. A relatively long delay isn’t unusual between the time something is invented and becoming commonplace.

Microsoft’s Dr. Buxton commented that the mouse took 30 years to make it from a research lab device to the standard pointing tool used with computers. He was comparing that time to the delay between Dr. Krueger’s 1983 multitouch gestural innovations and their appearance in popular consumer products.3

However, the NUI-GUI debate is much larger than any one feature and already is having an influence on test equipment, as evidenced by the Aeroflex CP products like the 7200. Many questions accompany the issue of gestural interfaces in instruments:

- Can a touchscreen-based interface be as precise as one with knobs and buttons?

- Is it simply a matter of personal preference, perhaps influenced by age?

- What is the relationship between the touchscreen gestures and the commands used for programming?

- Specifically for scopes, are performance-related parameters such as bandwidth, memory length, and sample rate so important that the user interface is secondary?

- Do test instrument UIs and computer UIs have totally different requirements?

- Clearly, scopes are inching toward more graphically oriented GUIs. Will this trend eventually create a touchscreen-based, gestural interface?

Those scope users that have been frustrated by conventional UIs may agree with Aeroflex’s David Poole in saying that, “computer and test instrument [user interfaces] are somewhere between annoying and infuriating for the most part. Very little thought has been given to the user interface in terms of what we regard as modern thinking.”

What do you think? Your views on the subject are important to us. Please e-mail [email protected] and reply with your thoughts about present test instrument interfaces—the good and bad points—as well as directions you think they should take in the future. Include experiences you have had with gestural interfaces that are relevant to test instrument control.

References

1. Ballmer, S., “CES 2010: A Transforming Trend—The Natural User Interface,” The Huffington Post, Oct. 4, 2010, http://www.huffingtonpost.com/steve-ballmer/ces-2010-a-transforming-t_b_416598.html

2. Norman, D. and Nielsen, J., “Gestural Interfaces: A Step Backwards in Usability,” interactions, XVII.5 September/October 2010, http://interactions.acm.org/content/?p=1401

3. Buxton, B., “Multi-Touch Systems That I Have Known and Loved,” www.billbuxton.com/multitouchOverview.html, 2009.

4. Nielsen, J., “iPad Usability: First Findings From User Testing,” Jakob Nielsen’s Alertbox, May 10, 2010, www.useit.com/alertbox/ipad.html

5. RTO In Detail E-Book, Rohde & Schwarz, http://www.scope-of-the-art.com/en/community/downloads/

6. Hsu, A., “A Future full of Touchscreens? It’s all in the Software,” Conversations on Innovation, VentureBeat, May 25, 2010, http://venturebeat.com/2010/05/25/a-future-full-of-touchscreens-its-all-in-the-software/

| FOR MORE INFORMATION | Click below | |

| Aeroflex | 7200 Configurable Automated Test Set | Click here |

| Agilent Technologies | 9000 Series Scopes with Drag-n-Drop | Click here |

| Fluke | ScopeMeter 190 Series II | Click here |

| GaGe Applied Technologies | 1.1-GS/s 12-b CompuScope | Click here |

| LeCroy | WaveMaster 8 Zi-A | Click here |

| National Instruments | NI PXI-5154 2-GS/s Oscilloscope | Click here |

| Pico Technology | PicoScope 6000 Series USB Scopes | Click here |

| Rohde & Schwarz | RTO Series Oscilloscope | Click here |

| Synaptics | Synaptics Gesture Suite SGS 9.1 for TouchPads | Click here |

| Tektronix | DPO70000C Series Oscilloscope | Click here |

| Yokogawa Corp. of America | DLM6000 Digital and Mixed-Signal Scopes | Click here |

| ZTEC | ZT4620 Series Scopes | Click here |