Software Will Determine the Future of Industrial Automation

In the last 20 years, CPUs and networks improved by a factor of 10,000X. According to Moore’s Law, in the next 20 years, they will improve by another factor of 10,000X. During the 40-year design lifecycle of today’s industrial automation (IA) architectures, computers will be a mind-boggling 100,000,000X more powerful. It’s hard to overstate the implications.

Using such power will determine which companies, industries, and even economies win or lose. For today’s designers of tomorrow’s long-lifetime systems, enabling intelligent software is the only significant factor.

In fact, this is already happening. In industry after industry, software is becoming the most valuable part of every system. IA has been an exception to this rule. Nonetheless, like autonomous cars and intelligent medical systems, IA can use sensor fusion, fast distributed reactions, and artificial intelligence (AI) to replace rigid or manual processes with smart autonomy.

Developing architectures seek to solve the problems of the last 20 years, such as reconfiguring workcells, small lot sizes, flexible automation, and vendor interoperability. These will be far more easily solved with flexible software than rigid specifications. The future belongs to software.

The Harsh Truth

Today’s discrete automation systems use a simple hardware-focused architecture. A programmable logic controller (PLC) connects devices over a fieldbus. The PLC controls the devices and manages upstream connections to higher-level software such as human-machine interfaces (HMIs) and historians. Factory-floor software reads sensors, executes logic, and drives actuators, thereby implementing a repetitive operation in a “workcell.” The factory consists of a series of these workcells, each with a few dozen devices.

Workcells aren’t so much programmed as they are configured. Manufacturing engineers or technicians use a palette of devices to implement a function in the cell. The goal of this design is to make it easy to assemble workcells of devices with little software effort. Unfortunately, the goal of minimizing software in plants precludes using advanced computing and intelligent systems. As eloquently put by one industry leader:

“One of the harsh truths about manufacturing software is that it is not developed by software engineers or computer science majors. We would not regularly ask an electrical engineer to design a mechanical system, or a chemical engineer to design an electrical system, but we often ask mechanical, electrical, and chemical engineers to design and develop software systems.”—Brandl, Dennis L. (2012-11-13T22:58:59). Plant IT: Integrating Information Technology into Automated Manufacturing. Momentum Press.

Brandl’s “harsh truth” simply can’t continue. Superior custom software will take its place above reliability, performance, and interoperability as the key to competition. That means industrial companies will need to write their own code with competitive, professional software teams. You can’t win a software war with someone else’s software.

How can we enable this future? First, we need to understand the available industrial architectural frameworks. Then, we can put them together to enable software-driven IA.

What are OPC UA and DDS?

The top industrial architectural frameworks are the OPC Unified Architecture (OPC UA, managed by the OPC Foundation), and the Data Distribution Service (DDS, managed by the Object Management Group). Both have widespread adoption in industrial systems, although not in the same use cases (Fig. 1). DDS finds traction in applications in medical systems, transportation, autonomous vehicles, defense, power control, robotics, and oil and gas. OPC UA is also used in many of these industries, but not in the applications. Rather, OPC UA is mostly employed in discrete automation and manufacturing. In practice, there’s almost no overlap in use cases.

DDS supports publish subscribe, as does the new specification for OPC UA “PubSub.” But OPC UA does not—and will never—do what DDS does. DDS is fundamentally a software-development architecture; OPC UA is not. Thus, the question isn’t about choosing DDS or OPC UA. The question is understanding what they do and deciding which one is needed for your design, or if you need both.

That of course leaves the question: Why are they so different?

DDS evolved as a software-development framework for control systems. The first DDS applications were feedback-control systems for intelligent distributed robotics communicating over Ethernet. They used extensive custom software written by computer scientists. Almost all DDS applications integrate an AI component or very smart algorithms. DDS directly targets software teams building intelligent distributed machines.

By contrast, OPC UA grew up in the plant environment where, as Brandl points out, software engineers are rare. Its prime goal was to help PLC-centric workcell designs select different vendor hardware without writing software. The workcells endlessly repeat an operation, but they’re not truly “smart.” OPC UA seeks to minimize, rather than enable, software development.

We must differentiate integrating existing software components from writing new software. OPC UA supports software integration of modules like HMIs and historians. However, it offers no facility for composing intelligent software modules. It’s not a software-development architecture for distributed applications.

What Does OPC UA PubSub Do?

OPC UA PubSub is a simple way to send information from a publisher to many subscribers. A publisher collects a “DataSet” at regular intervals and writes it to its subscribers. A DataSet is pulled out of the OPC UA information model, essentially a list of key-value pairs. On the other end, the Subscriber unpacks it and pushes it into the UA information model. There’s also support for simple structured data types.

Most users plan to use OPC UA PubSub with the UDP transport. It has some simple options. It can “chunk” data that’s too big to fit into a network packet (usually only about 1.5 kB). It can resend every message a fixed number of times in hopes of better reliability. But it doesn’t guarantee reliability by detecting and retransmitting lost messages. And, it rigidly locks execution; every subscriber must get the same data in the same format. OPC UA PubSub also supports other non-real-time messaging middleware options, MQTT and AMQP.

Fundamentally, OPC UA PubSub offers a simple mechanism to connect variables on a set of tightly coupled devices. Every device gets the same data at the same rates at the same time. With the right companion specifications, it can ensure device interoperability. UA PubSub is very new; there are few deployed applications.

What Does DDS Do?

Unlike OPC UA, DDS supports modular software-defined systems with a simple concept: a shared “global data space.” This simply means that all data appears “as if” it lives right inside every device and algorithm in local memory. It is, of course, an illusion; all data can’t be everywhere. DDS works by keeping track of which application needs what data, knowing when it needs that data, and then delivering it. So, the data actually required by any application is present in local memory on time. Applications talk only to the “local” data space, not to each other.

This is the essence of data centricity: “Instant” local access to all data by every device and every algorithm, at every level, in the same way, at any time. It’s best to think of it as a distributed shared memory, similar to a distributed control system (DCS) sandbox RAM, implemented virtually.

Each DDS module specifies the schema (types) it can exchange in memory. DDS controls the flow into and out from this structured memory with QoS parameters that specify rate of flow of data, latencies, and reliability. There are no servers or objects or special locations. Since DDS applications interact only with the shared distributed memory, they’re independent of how other applications are written, where they live, or when they execute. It’s a simple, naturally parallel software architecture across the entire system.

DDS implements a long list of features to support software-driven distributed control, including:

- Quality-of-service (QoS) control that decouples software modules

- Redundancy management that increases parallelism

- Built-in discovery that finds the right sources and sinks of data

- Type-compatibility checking and extensibility to support system evolution

- Scoping and bridging to improve scaling

- Security that fits the data-centric architecture

- Transparent routing for top-to-bottom consistent data access

- Data persistence that enables applications to join and leave at any time

- Reader-specified content filtering for scalable efficiency

- Rate control to eliminate rate coupling.

Some Key Differences

OPC UA PubSub provides none of the data-centric features that are the core of DDS. Let’s look deeper.

Interoperability

OPC UA enables device interoperability through device models and companion specs for hundreds of situations. OPC UA devices crowd tradeshows, pinned to the wall as evidence of interoperability. The consistent message: Factory engineers and technicians can combine devices into workcells with OPC UA without writing code.

In contrast, no devices today come with DDS pre-installed. That’s because DDS doesn’t integrate devices per se. Instead, DDS integrates software modules. To add devices to a system, DDS users model devices as software.

Instead of specifications for every permutation, DDS integrates everything through a “system data model.” It maps device functions from a vendor’s native API to the system data model. DDS vendors offer very sophisticated bridging and “data routing” technologies. Thus, the popular “layered databus” architecture allows systems to grow by connecting data models between layers (Fig. 2). This also means that a DDS system can connect to devices despite different interfaces, to web technologies, and even to OPC UA.

Coupling

Coupling is a measure of interdependencies between software and system components. Coupling can be obvious, like coupling clients to servers. It can also be subtle, such as when software modules must start in a particular order or execute at the same rate.

The DDS data-centric design makes it seem like all data is local, so the application isn’t coupled to any other application. DDS controls interactions with the data through 21 different QoS policies, including deadlines, latency budgets, update frequencies, history, liveliness detection, reliability, durability, ownership, ordering, and filtering. It also converts types that evolve, as long as they are still close enough to be “compatible.”

DDS runs on hundreds of platforms and over dozens of networks transparently. There’s no dependence on language, operating system, chip architecture, or type of network used. Thus, data-centric applications work in parallel and share data transparently without interference. Coupling is only by design.

By contrast, OPC UA applications talk directly to each other. For instance, in PubSub, every subscriber gets exactly the same data from the publisher at the same rate. Each subscriber gets all of the dataflow, so the whole system also relies on having similar connecting network properties and processor speeds/loads everywhere. All subscribers must have the same understanding of the data being sent; therefore, versions must exactly match. Each added participant adds a dependency, directly coupling the system.

Fundamentally, decoupling helps applications and devices work independently. With a few subscribers in a workcell, coupling may not matter. Coupling can be good, for instance, for synchronous feedback control with minimal delay. In larger systems, coupling is bad; loosely coupled systems are easier to scale, test, build with distributed teams, deploy, understand, and maintain. Each source of coupling is a real problem, and combining many sources is debilitating. Good software architecture should avoid coupling unless absolutely required to affect the application.

Discovery

DDS automatically discovers named “topics” across the system regardless of where the application lives. Applications don’t have to do anything or have any knowledge to find the data they need.

OPC UA doesn’t support discovery in this sense. Subscribers query a server to get a configuration that includes the publisher of the data they require. They can also introspect a publisher to see what it can publish. Both are active queries; OPC UA doesn’t perform system-wide automatic discovery.

Security

OPC UA and DDS use fundamentally different approaches to security. OPC UA secures the underlying transports. The various pubsub middleware options and client-server require different security implementations (and often, certificates). It’s possible to set up connections between OPC UA clients and services securely, but there’s no generic way to indicate which data is allowed to flow to which client.

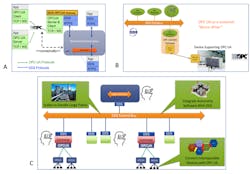

DDS also can secure the underlying transports, but the primary approach instead provides overall dataflow security regardless of transport. DDS is fundamentally a flow-control technology. So, with a signed permissions document that describes allowed read and write access, DDS can secure and control the dataflow itself (Fig. 3). This requires no code; security can be added after the entire system is running.

Scaling

DDS supports domains that separate systems, partitions within a system, and transparent routing between subsystems and networks. With transparent routing, the data sources can be very far away. DDS systems can grow to hundreds of thousands of applications. Top to bottom, across subnets, or with any pattern (pubsub, request-reply, or queuing), DDS offers a unified data model, a single security model, and consistent access to data.

Since there are few deployments, scalability for OPC UA PubSub has yet to be demonstrated. However, OPC UA PubSub over UDP isn’t designed for more than a few devices on a single network. With MQTT or AMQP, a publisher can talk to a cloud server, but not to other OPC UA PubSub subscribers. OPC UA client-server is designed to roll up workcells into a larger factory. So, OPC UA doesn’t offer unified system data access in the same way as that of DDS.

Filtering

OPC UA subscribers can select a DataSet with a filter, but only to ensure reception of the correct data. They can also limit access to a single publisher.

DDS has extensive filtering. QoS matching enables subscribers to receive information only from capable sources. The time-based filter decouples the speed of producers from that of the users of information. Content filtering analyzes the content and delivers only data that fits the specification. Together, these filters ensure delivery of the right data to the right place at the right time with minimal wasted bandwidth.

Use of TSN

TSN, or Time Synchronous Networking (IEEE 802.1), is a developing set of standards that build on the existing Ethernet design to deliver data in bounded time, aka “isochronous” networking.

With TSN, OPC UA PubSub can deliver better real-time performance. TSN can be reliable, so the lack of reliability in OPC UA over UDP isn’t as critical. However, since TSN is a flavor of Ethernet and requires central configuration, it’s limited to smaller, single-subnet systems.

DDS was initially developed for real-time control over a network. QoS settings can optimize use of the underlying network, supporting everything from slow, lossy networks like satellite links to isochronous transports like backplane buses and switched fabrics. On capable hardware, it offers predictable and fault-tolerant one-to-many delivery with bounded latencies.

Combining DDS with TSN allows more distributed determinism. A DDS over TSN system can combine high-level data access with true real-time performance. The OMG has an active standard in progress for DDS over TSN scheduled for release in Q4 2020.

When Should We Combine Capabilities?

OPC UA’s main use case is to help manufacturing engineers build workcells without writing software. It’s used in manufacturing “things,” but not as software inside the “things” being manufactured. In stark contrast, DDS users are software engineers building applications that go into things; DDS is only used to operate things. Thus, UA is about making things. DDS is about making things work.

There’s overlap coming in terms of things that make things, aka smart manufacturing systems. These will soon need sophisticated system software and custom programming.

The OMG recently passed a standard for an OPC UA/DDS gateway (Fig. 4). At its most basic level, this gateway takes the OPC UA information model and makes it available within the DDS global data space. The primary use case is to convert OPC UA-enabled devices to DDS devices. OPC UA devices can simply join the DDS network.

In a large intelligent system, the DDS-based software environment can thus coordinate and work with the OPC UA devices. This allows for both sophisticated software and interoperable devices.

Note that this design offers many opportunities to leverage AI and intelligent software. At high levels, DDS interfaces to cloud-based intelligence. Future generations of this design can also implement AIs or smart algorithms for device interoperability

The Smart Machine Future

Historians will look back on our time and wonder how we got by without smart machines. The transition will not be smooth—product lines, companies, and entire national economies are at stake. Brandl’s “harsh truth” may be the most dangerous paragraph in automation. That truth will not survive the smart machine era.

So, software excellence must join the manufacturing technology mix. In the next 20 years, manufacturing system performance will not improve by a factor of 10,000. Interoperability will not become 10,000X more valuable. But software will become 10,000X more important. That’s the inevitable result of exponential computing growth. Any architecture that doesn't target using compute power as its primary goal is already obsolete.

Does industrial automation need to adopt software development? Will future manufacturing systems compete primarily on unique user code? The software future says yes.

Stan Schneider, PhD., is CEO of Real-Time Innovations.

About the Author

Stan Schneider

CEO, Real-Time Innovations

Dr. Stan Schneider is CEO of Real-Time Innovations (RTI), the Industrial Internet of Things connectivity company. Stan serves as Vice Chair of the Industrial Internet Consortium (IIC) Steering Committee. He also serves on the advisory board for IoT Solutions World Congress, and chairs OpenFog's Fog World keynote committee. He was named Embedded Computing Design’s Top Embedded Innovator Award 2015 & IoTOne’s top-10 most influential in the IIoT 2017.He holds a PhD in EE/CS from Stanford.