These Technologies Help Speed the Adoption of Augmented-Reality Systems

What you’ll learn:

- Technologies that are important to AR and how they work: full color laser modules, inertial measurement units (IMUs) and ultrasonic time-of-flight sensors.

- How to assess sensor technology through performance, power, budget, code, and size.

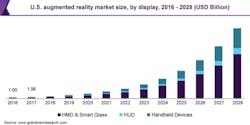

When someone mentions augmented reality (AR) systems, many people think of an immersive gaming environment. Besides gaming, AR-driven headsets, smart glasses, and other wearable devices have a bright future in training, healthcare, automotive, retail, and other solutions. Grand View Research estimates that globally, the AR market was worth $17.67 billion in 2020 and will expand at a compound annual growth rate (CAGR) of 43.8% between 2021 and 2028.

Augmented Reality Market Forecast, 2021 - 2028

The Fundamentals: Components of an AR Environment

Augmented reality creates an immersive reality by using technology to overlay digital information onto a live camera feed. An AR system is made up of hardware, software, and an application. The hardware components include a processor, graphics processing unit (GPU), and sensors. The processor coordinates and analyzes sensor inputs, stores, and retrieves data. The GPU handles the visual display and sensors ensure that your device operates correctly in its environment.

Sensors, responsible for motion tracking, are key components of the system. Sensor components include accelerometers that measure linear motion along three perpendicular axes and gyroscopes that use roll, pitch, and yaw around an axis to determine orientation. In the example below, the Z-axis is the forward direction. The roll is the rotation around the Z-axis; the pitch is around the X-axis; and the yaw is the rotation around the Y-axis.

Source: arXiv.org, Cornell University

An inertial measurement unit (IMU) is a device that combines sensor devices (accelerometers, gyroscopes, and sometimes magnetometers) to measure the acceleration and angular velocity of an object. An IMU device can help you track motion in a variety of applications, from headsets to mobile phones to industrial applications.

IMUs are useful in industrial settings for condition-based monitoring. For example, if you place an IMU on a pump or other rotating object to monitor vibration frequency, the accelerometer can use the vibration to identify problems before it becomes serious. If you have a new system, you can integrate an accelerometer that measures change with machine learning (ML) and artificial intelligence (AI). This makes it possible to establish a baseline setting and teach the system to identify changes that you need to worry about.

On that front, TDK develops a range of IMUs, such as a six-axis IMU with a three-axis acceleration sensor and three-axis gyroscope, a seven-axis IMU with a barometric pressure sensor, and a nine-axis IMU that also includes a three-axis compass.

To optimize AR performance, you want to use motion sensors with low noise and latency, good stability over temperature, and highest sensitivity accuracy. For instance, consider devices like the InvenSense 6-axis family of motion sensors with built-in 3-axis gyroscope and 3-axis accelerometer. Providing intelligent on-board processing with built-in smart power management, these high-performance sensors let you implement navigation, imaging, and AR applications for the best user experience.

Measuring Distance and Volume

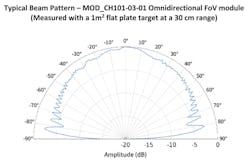

Time-of-flight (ToF) sensors measure distance by sending out an ultrasonic pulse that calculates the time it takes for an echo to return to the sensors. You can use ToF sensors in non-contact measurement applications for range finding, position and proximity sensing, and object tracking.

By calculating the time of flight, a ToF sensor can determine the location of an object relative to the device and trigger a programmed behavior. Data generated from ToF sensors help with depth perception, navigation, and gesture recognition. It can improve 3D images and enhance the immersive experience in an AR environment.

Multiple ToF sensors can also be used in a pitch-catch mode, where one ToF sensor sends (pitches) the ultrasonic pulse and one or more ToF sensors receive (catch) the pulse. By synchronizing the clocks in the ToF sensors, the distance between the sensors can be accurately determined by the pulse’s time of flight. Multiple receivers enable precise triangulation of the distance and orientation between two objects, such as a VR headset and the controllers. ToF sensors in this mode enable a new 6-DoF, inside-out VR experience without the need for motion-tracking cameras or other infrastructure.

IMU Performance Matters: Reducing Noise, Bias, and Latency

When working with vision-based applications like VR and AR, any noise, errors, and inaccuracies are easily seen. No one wants to hold their controller still in front of their system, for it not to point to where expected, or for the pointer to move without their hand moving. The same can be said about the 3D images; unexpected changes in how the image moves can be easily detected with the eyes.

Therefore, for IMUs, common performance specs like noise, biases, and latency matter in the overall immersion VR and AR systems try to achieve. Accelerometers and magnetometers are noisy sensors, which means they can have a slow response rate in fast-moving systems. Gyroscopes are fast and accurate but susceptible to drift. How fast you can sample your sensors and translate it to data that’s usable for your application is critical. Furthermore, how your system can keep sensors calibrated by removing ever-changing biases while in use is a fundamental challenge that must be addressed.

To tackle those issues, TDK developed a complete suite of SmartMotion sensor solutions for high performance and low noise. The Advanced Sensor Algorithm solutions for the company’s DK-42688-V product address many of the challenges of an AR/VR system. TDK uses a specialized gyroscope calibration system that was created specifically for head-worn devices. The MotionFusion algorithms make for a fast response rate and the ability to track small, quick movements quickly. All this while leveraging the sensor hardware of the ICM-42688-V.

Downsizing While Maintaining Performance

The challenge for many AR and VR applications is that users seldom want to walk around wearing big devices. The size, weight, and performance of early sensors made it difficult to create lightweight devices, which limited the user experience. Traditional sensors often require complex signal processing and are too large to embed in consumer or industrial applications. Ideally, you want to decrease the size of the sensors while maintaining or even improving performance.

Microelectromechanical systems (MEMS) is a process technology that combines silicon-based microelectronics with micromachining technology. Mechanical and electrical components are fabricated using integrated-circuit (IC) batch processing techniques. MEMS devices can sense, control, and actuate on a micro level while ranging in size from a few micrometers to millimeters.

MEMS technology devices are well-suited for a variety of applications, including obstacle avoidance, presence detection, robotics, security and surveillance, AR/VR, drones, liquid level sensing, smart home/building and general internet of things (IoT).

A variety of MEMS-based devices, such as accelerometers and gyroscopes for navigation, motion tracking and motion control, and optical stabilization are available from TDK. Recently, the company launched the Chirp CH-101, an ultrasonic MEMS-based ToF sensor for high-performance range and distance sensing.

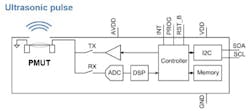

The CH-101 combines a piezoelectric micromachined ultrasonic transducer (PMUT), power-efficient DSP (digital signal processor), and low-power CMOS ASIC in a 3.5- x 3.5- x 1.25-mm package. The sensor supports a maximum sensing range of 1 meter, providing range-sensing with millimeter precision while consuming very little power.

Block diagram of TDK Chirp Microsystems’s MEMS ultrasonic ToF sensor.

The CH-101 sensor can handle various ultrasonic signal-processing functions, enabling flexible industrial design options. Scenarios for implementing the CH-101 sensor include AR systems, smart home, drones and robotics, connected IoT devices, mobile and wearable devices, and automotive solutions.

The Next-Gen Laser Module

As video display technologies and software continue to evolve, new devices are being used in new ways. Smart glass, for example, is a wearable device that overlays information on real environments. This and other display devices have applications in medical, factory, and other industries.

The only limitation for use has been the size of the laser module: conventional displays work by reflecting the three RGB primary colors from laser elements onto a lens and mirror and then projecting as a single beam of light to display an image. This approach uses multiple components, increasing the size of the space-optics module.

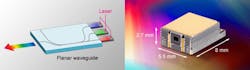

To solve this problem, TDK employs Planar Waveguide Technology, a technology that forms optical waveguides on a planar substrate. As a result, the laser element for displaying images on AR smart glasses is significantly reduced in size.

Combining Planar Waveguide Technology with the company’s high-precision manufacturing techniques reduces the final module to one-tenth the typical size of a space-optics module. It also displays images in full color depth, to a maximum of approximately 16.2 million colors. This tiny laser module can be integrated in new solutions such as vision aids that project clear images directed into retinas.

New optics module based on planar waveguide technology.

Size, Performance, and the User Experience

As the market for AR systems expands, sensors and other AR technology will determine if your solutions can deliver the power and performance to create solutions beyond existing large form factors. Building smaller, energy-efficient AR systems relies on reducing component size, using high-performance sensing technology, and focusing on the user experience.

Designing with sophisticated, accurate location and motion detectors will provide a realistic experience in a virtual space and expand the market for AR solutions wearables and other AR systems.

About the Author

Peter Hartwell

Chief Technology Officer and VP Sensor Solutions, InvenSense

Peter Hartwell has a strong background in innovation and strategy development with over 40 worldwide patents. He’s passionate about the on-going sensor revolution and was an early visionary in the development and application of the Internet of Things (IoT) and has diverse experience from industrial research to consumer electronics to silicon sensor design and manufacturing with over 25 years in the MEMS and sensors field. He’s also an experienced public speaker who particularly enjoys presenting very technical subjects to a broad audience with an interactive and approachable style.