Here's the bad news: The Environmental Protection Agency expects the nation's data centers to consume more than 100 billion Kilowatt-hours by 2011, costing about $7.4 billion annually. That's about twice what they consumed in 2006, when data centers already drew more electricity than 5.8 million U.S. households.

Now here's the worse news: Data centers waste most of the energy they draw. That's because they must be ready to handle peak processing demands though they typically idle along at a 20 to 30% utilization rate. All in all, experts figure data centers draw 60% of their peak power demand even when they're doing nothing.

This sort of energy inefficiency hasn't gone unnoticed. The EPA's Energy Star rating program for data center servers went into effect last May. But experts point out that it isn't comprehensive — It does not address all types of servers and excludes blade servers which are the latest rage. It is also so specific for certain operating conditions that many servers can't qualify for certification under its rating system. But it is nevertheless a good beginning.

“This is a great first step” claims Subodh Bapat, vice president and distinguished engineer in the sustainability office of Sun Microsystems. “It's been important for some time, given the power issues of the data center, to give transparency to the energy use of servers” he adds.

Even so, market analysts at IDC figure we now spend roughly fifty cents on energy for every dollar spent on computer hardware. And this ratio is expected to get worse over the next four years. Despite a lot of recent talk about green data centers and server farms, server energy use has largely been considered a nonproblem. That's because all too often, bills for energy use by computers were lumped into the utility bill of the company operating them, which treated the expense as merely a cost of doing business.

But that attitude is changing as data center operators realize the staggering nature of electricity costs from data center inefficiency. To get an even better feel for the scale of the problem, consider one example from the nationsencyclopedia.com Web site. It reports that annual energy consumption for the entire country of Sweden currently stands as about 150 billion kWh — only about 50% more than what U.S. data servers by themselves are expected to consume two years from now.

Worldwide, estimates are that more than 60 million servers are in operation today, consuming a whopping 60 GW of energy. No surprise that operators of large data centers and server farms are trying to reduce energy on two fronts: by having their servers generate less heat, which in turn reduces air-conditioning costs, and by lowering power consumption of uninterruptible power supplies (UPSs) that back up operations.

There are other things a data center enterprise can do to consume less energy. Surprisingly, just turning up the thermostat can help. The American Society of Refrigeration and Air Conditioning Engineers (ASHRAE) room temperature guidelines for computer and communication centers range as high as 77°F. Yet many a data center sits at a cool 70°F.

Compounding the problem is that most data centers and server farms are getting old. A recent survey by IDC reported that the average age of U.S. data centers is 12 years, making many of them relics of the pre dot-com era. In many cases this also means pre dot-com A/C and power supplies handling at least some parts of the facilities.

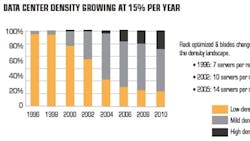

And, of course, the number of servers per rack enclosure is increasing rapidly. According to the Cherokee International unit of Lineage Power Holdings, a maker of switch-mode power supplies for data centers, the average rack enclosure housed just seven servers in 1996. Next year, equipment racks will begin being crammed with 20 servers each as new high-density blade-type units become available.

Worrying Statistics

In a 2007 report called “Report on Server and Data Center Energy Efficiency,” the EPA projects that electricity use will continue to climb, in terms of billions of kWh per year over the next few years, if data centers continue consuming electricity at their historical rate. The Agency figures that if current trends continue, the increased demand for power by data centers will require an additional 10 power plants in North America by 2011.

The EPA report considers various scenarios that could save 23 to 74 billion kilowatt-hours of electricity by 2011, depending on how well energy consumption is managed. The EPA figures such savings could reduce peak loads from data centers and server farms to such a degree that the country would need perhaps 15 fewer power plants and could cut data server electricity costs by $1.6 billion.

To improve data center energy efficiency, the EPA is collaborating with The Green Grid, a global consortium of IT concerns trying to improve energy efficiency in data centers by developing a common set of metrics, processes, and new technologies. Green Grid members include AT&T, Emerson Network Power, American Power Conversion Corp. (APC) and IBM. One of their first tasks it to develop an Energy Star data center infrastructure rating. The rating will help data center operators assess the energy performance of their infrastructures and identify areas with the greatest opportunity of improvement.

Indications are that the IT community is ready to embrace such metrics. In a small survey by data center software maker Cassatt Corp., nearly two-thirds of the IT personnel polled considered their data center energy efficiency average or worse. And 63% were either conducting a data center energy efficiency project or expected to do so shortly.

Another study performed by Lawrence Berkeley National Laboratories (LBNL) for the American Council for an Energy-Efficient Economy has shown that data centers can be as high as 40 times more energy intensive than conventional office buildings. One reason: Data centers and server farms operate continuously, whereas office dwellers generally work 9-to-5. Conducted in 2006, it found that typical state-of-the-art servers consumed more than $17,000 per year in electricity, at 10 cents per kilowatt-hour.

The study proposes a metric to gauge the energy efficiency of a data center, the computer power consumption index. This is the fraction of the total data center's power consumption (including the computers, power conditioning, HVAC, lighting, and so forth) to the amount of energy used by the computers themselves (the higher the number the better). The study of 22 data centers showed that the index varied by more than a factor of two from the worst centers to the best. But even the best data centers had room for improvement.

Data center operators are beginning to get the message. Says LBNL scientist and former End-Use Forecasting Group leader Jonathan Koomey, “Many large companies are starting to make the institutional and technological changes needed to gain energy efficiencies in their data center facilities.” Among the helpful strategies he points are virtualization and power, asset and workload management initiatives.

Software companies such as Microsoft Corp. are also joining in the effort. According to Bill Laing, general manager of Windows Server 2008 development, the new Windows Server release with energy savings enabled could reduce energy consumption by as much as 20%.

Virtualization of data center operation could prove helpful as well in that it can potentially drive up server utilization rates from the typical 5 to 15% to 70 or 80%. The resulting consolidation can reduce power, cooling, and real estate costs. One of the most common software virtualization tools is Microsoft's Application Virtualization (MS App-V) platform. It lets applications be deployed in real-time to any client from a virtual application server. In so doing, it removes the need for applications to be run locally on client computers. Instead, only the App-v client sits on the client machines. All application data is permanently stored on the virtual application server.

Similarly, Aperture Technologies Corp. offers Vista software packages for data center service management issues. These packages include tools for data center planning and management, operational control, monitoring and automation, and integrated information and delivery. They're designed to significantly lower data center operational costs and improve energy efficiency levels.

A Time For Action

As one might expect, IBM is among the IT service providers taking a hard look at energy use. According to Steve Sams, vice president for IBM's Global Site & Facilities Services, one IBM client has hundreds of data centers throughout the U.S. that consume as much energy as the entire state of Connecticut. “We found that 60% of a data center's energy use is consumed by the data center's infrastructure like cooling and power supplies,” he says. No wonder, then, that IBM recommends companies address both the IT and physical infrastructure uses of energy to address energy efficiency.

Sams says that there is progress on reducing this energy consumption and that many companies have been paying more attention to this in the last couple of years. Initiatives such as ClimateSaversComputing.org and LessWatts.org already promote energy savings and ways of lowering electric bills.

Through its “Smarter Planet” initiative, IBM is working with Syracuse University and the state of New York to build and operate a new computer data center on the University's campus. The data center will incorporate advanced infrastructure and smarter computing technologies to make it one of the most energy-efficient data centers in the world. It is expected to use 50% less energy than a typical data center today, making it one of the “greenest” computer centers in operation. The project is expected to finish by the end of this year.

Efficiency efforts will focus on the infrastructure of the data center itself, not just the computer hardware and software. The $12.4 million, 6,000-ft2 data center will feature its own electrical tri-generation system. A key element will be an on-site natural-gas-fueled micro-turbine generators designed to generate all the center's electricity and provide cooling for the computer servers. The site will also house IBM's latest energy-efficient computers and computer-cooling technology. The university will manage and analyze the performance of the center, as well as research and develop new data center energy efficiency analysis and modeling tools.

As with many server farm operators, IBM has employed visualization and consolidation in its Lexington, Ky. data center that was built in the 1980s. “We were at 98% of our capacity, so essentially the data center was full,” explains Boyd Novak, IBM director the Americas Data Centers. “We needed to support our business growth but were constrained in every area. So we had to look at a number of improvements to meet our capacity growth. We didn't have to wait to build a new facility” he adds.

Novak and his team identified that more than 70% of the initial workloads of the Lexington data center's five major clients could move to a virtualized environment. Since the project's beginning, there has been a ten-fold improvement in the Unix server utilization for mission-critical production workloads-from 3 to 5% up to 30 to 50%. Today, only 12% of the center's servers are less than 5% utilized.

The Climate Savers Computing Initiative, a group co-founded by Google Inc., is also at work reducing energy consumption. Last year Google, as part of its Renewable Energy Cheaper than Coal (RECC) initiative, created an internal engineering group dedicated to exploring clean energy.

Google, of course, has a tremendous number of data centers. By all accounts, each of its facilities accounts for tens of megawatts of power use. So it is probably not surprising that the firm is keenly interested in efficiency and consumption. One of its initiatives is to sponsor development of utility-scale solar power generation to help make it more economical. Google green energy czar Bill Weihl says that its efforts to develop renewable energy, which would presumably be used to power its data centers, are making progress.

“We've been looking at unusual materials for the mirrors both for the reflective surface as well as the substrate that the mirror mounts on,” he says. “We're not there yet, but I'm hopeful we will have mirrors that are cheaper than what companies in the space are using.”

Weihl claims results so far are quite promising. “In two to three years we could be demonstrating a significant scale pilot system that would generate a lot of power and would be clearly mass manufacturable at a levelized cost of electricity that would be in the 5 cents or sub 5 cents per kilowatt range,” he adds. Power generated at these levels would be competitive with the price of coal-powered electrical generation.

Even so, Google claims its operations are already energy efficient. It cites the fact that a typical query (together with other work before a search begins) consumes just 0.0003 kWh of energy per search or 1 kJ. For comparison, the average adult needs about 8,000 kJ a day of energy from food. Put that way, a Google search uses about the same amount of energy a human body burns in 10 sec.

Semiconductor Technology: More bang for the kilowatt

There was a time when developers of computer processors viewed processing speed as trumping almost every other design consideration. Not anymore. Processor designers increasingly try to think up novel ways of reducing CPU power. For example, adding an event handler and direct-memory access (DMA) can greatly reduce a processor's number of operating cycles used for interrupt functions, which leads to greater power savings.

For efficiency purposes, chip maker Atmel Corp. recommends the use of an 8/16-bit single-cycle reduced-instruction-set computer (RISC) microcontroller unit (MCU) with an 8-channel event system and DMAs to offload interrupts from the CPU. Atmel says in applications handling a large number of interrupts, this lets designers construct processors that consume 90% less power.

The trend toward multi-core processing has energy implications as well. Intel and AMD have been major players in this arena. Intel multi-core processors include the Xeon 5500 series based on Intel's Xeon Micro-architecture formerly code-named Nehalum. Intel's Intelligent Power Node Manager technology uses 10 W of power while idling, consumption that's half the amount used by previous-generation processors. The firm has demonstrated an eight-month payback in cost savings compared to previous-generation single-core processors. “Where you once had two to four processor cores, you now have 8 to 10 within the same thermal envelope,” says Todd Christ, a digital enterprise senior systems integration engineer at Intel.

Intel is active in energy savings both at the chip and systems level. Its Advanced Cooling Environment (ACE) program has shown that potential energy savings for data center and server farm cooling centers can be as high as 90%, by using variable-speed fans in the cooling process, rather than the constant-speed fans used in the past.

“We look at a data center's efficiency in three tiers,” says Allyson Klein, Intel's director of server technology marketing leadership. “We tackle energy savings at the processor-chip level via multi-core technologies; our Power Node Manager delivers only as much power as needed for the server or rack processor and I/Os; and our ACE program manages the overall data center environment, optimizing cooling and other equipment placement for energy savings.”

Intel is also integrating computing with storage as a means of cutting power demand. It recently showed off its Jasper Forest project which should be available next year. The Jasper Forest 3420 chip set integrates a PCIe Gen 2.0 I/O port, DMA, non-transparent PCI-E bridging and hardware RAID acceleration functions. When used with Intel 5500 Xeon processors, it saves 27 W of system power.

Klein also points to the work Intel is doing with the T-Systems arm of Deutsche Telekom in Germany on a project called T-Lab. It involves a data center efficiency lab outside Munich dubbed Data Center 2020. The lab will focus on testing various cooling and design scenarios to analyze the factors affecting the total cost of data centers and is expected to serve as a blueprint for building the data center of the future.

The 750-ft2 test laboratory is equipped with 180 servers in racks as well as the latest tools for measuring energy and climate conditions. More than 50 sensors will measure air humidity, ambient temperature, temperature difference between inlet and exhaust air, and processor load. The laboratory is equipped with a smoke generator to visualize air flows. Intel supplies the servers, Deutsche Telekom the infrastructure to run them.

Finally, AMD is also boosting the number of physical devices available in a single package. The company has shown off a 12-core server processor code named Magny-Cours (after a French Formula One Grand Prix car racing site) which will be available next year. It boosts memory speed by a factor of 3.3 and provides 1.9× the bandwidth of the HyperTransport bus. One of AMD's latest multi-core processors is the Optron 6-core Istanbul chip for servers. According to AMD, it delivers 30% more performance than the previous-generation quad-line processor while staying within the same power envelope of 55 W, 75 W, or 105 W, depending on the processor speed grade.

Useful resources

EPA Energy Star for computer servers, www.energystar.gov/ia/partners/product_specs/program_reqs/servers_prog_req.pdf

Lawrence Berkeley National Laboratories, Environmental Energy Technologies Div., http://eetd.lbl.gov/

Lineage Power Holdings, www.lineagepower.com/Landing.aspx

About the Author

Roger Allan

Roger Allan is an electronics journalism veteran, and served as Electronic Design's Executive Editor for 15 of those years. He has covered just about every technology beat from semiconductors, components, packaging and power devices, to communications, test and measurement, automotive electronics, robotics, medical electronics, military electronics, robotics, and industrial electronics. His specialties include MEMS and nanoelectronics technologies. He is a contributor to the McGraw Hill Annual Encyclopedia of Science and Technology. He is also a Life Senior Member of the IEEE and holds a BSEE from New York University's School of Engineering and Science. Roger has worked for major electronics magazines besides Electronic Design, including the IEEE Spectrum, Electronics, EDN, Electronic Products, and the British New Scientist. He also has working experience in the electronics industry as a design engineer in filters, power supplies and control systems.

After his retirement from Electronic Design Magazine, He has been extensively contributing articles for Penton’s Electronic Design, Power Electronics Technology, Energy Efficiency and Technology (EE&T) and Microwaves RF Magazine, covering all of the aforementioned electronics segments as well as energy efficiency, harvesting and related technologies. He has also contributed articles to other electronics technology magazines worldwide.

He is a “jack of all trades and a master in leading-edge technologies” like MEMS, nanolectronics, autonomous vehicles, artificial intelligence, military electronics, biometrics, implantable medical devices, and energy harvesting and related technologies.