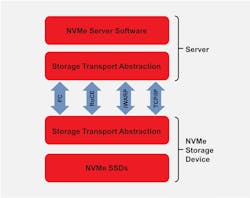

Non-volatile memory express (NVMe) presents a streamlined means for delivering low-latency operation, and it’s being adopted by all major server and storage vendors (see figure). The architecture is replacing Small Computer System Interface (SCSI) in modern solid-state-disk (SSD) resources.

As a result of its adoption, NVMe-enabled SSDs are now eliminating the bottlenecks that were inherent in traditional storage implementations—leading to substantial improvements in the access speeds for a range of applications from mobile platforms to enterprise data centers. This article answers critical questions concerning NVMe-enabled SSDs deployment and use at scale.

Why is NVMe Seeing Such Widespread Proliferation?

NVMe is designed from the ground up to communicate at high speed with flash storage, and only requires 30 commands that are specific to dealing with SSDs. In addition, this architecture supports multiple deep command queues in order to take advantage of the parallel-processing capabilities of the latest multicore processors. With up to 64K commands per queue and support of up to 64K queues, NVMe represents a major advance over traditional SCSI, SAS, and SATA technologies, which were originally developed for spinning hard-disk drives (HDDs).

Global sales in NVMe-based SSD drives are now overtaking those relating to SAS and SATA SSD storage.1 This is due to the dramatically improved performance offered by NVMe, for both current and next-generation SSD technologies (such as 3D XPoint and non-volatile DIMMs, or NVDIMMs).

Why Do You Need to Consider NVMe-over-Fabrics?

When conceived, NVMe’s primary objective was to allow central processing units (CPUs) to access NVMe-based SSDs within the server using the PCIe bus. However, as storage administrators are aware, local server storage provides major administration headaches—particularly in terms of having to over-provision expensive SSD storage resources (so that there’s adequate headroom factored in to cope with any excess demand).

Different servers require different amounts of high-performance NVMe SSD storage depending on their application workloads. These applications can migrate to different physical servers but still require the same amount of SSD storage. To stop the practice of overpopulating every server with expensive SSD storage, it’s more economical and efficient to create a pool of shared NVMe SSD storage, which can be dynamically allocated depending on workloads.

With local storage, it’s paramount that all data is backed up, in case the server fails. Furthermore, there are serious security implications, and replication between sites can become difficult to manage. Through shared storage, administrators can avoid these issues. In other words, CIOs can utilize high-performance flash storage to its full degree across servers—with all of the elevated availability and security capabilities of modern storage arrays, plus similar performance and latency advantages associated with local NVMe SSD storage.

How Far Down the Road is NVMe-over-Fabrics?

To help describe this, let’s compare shared storage arrays to automobile engines. Specifically, traditional Fibre Channel (FC)/iSCSI shared storage arrays can be equated to conventional combustion engines. They have been used for many years, are reliable, and will provide a good method of transportation for a prolonged period of time.

Hybrid cars are becoming more commonplace and provide some of the benefits of electric and gas. In a similar manner, newer NVMe arrays use a mix of NVMe internally inside the array, but they connect to the host via SCSI commands over either FC or Ethernet transport protocols.

Though most people agree that electric cars will be the future, currently they’re not mainstream due to their cost versus traditional alternatives and the infrastructure isn't broadly available yet to support electric charging. Native NVMe arrays can be thought of in the same way as all-electric vehicles. The infrastructure needed to make them a reality is NVMe-over-Fabrics. In time, this will become the dominant communication standard for connecting shared storage arrays to servers. Still, it will take time before NVMe-over-Fabrics gains widespread proliferation and all of the related teething problems are resolved.

What NVMe-over-Fabrics Option Should You Choose?

The biggest dilemma for storage administrators is deciding on the right technology to invest in. As with any new technology when it first emerges, there are multiple ways to implement the overall solution. In this respect, NVMe-over-Fabrics is no different. NVMe commands can be passed across FC, RDMA-enabled Ethernet, or standard Ethernet using TCP/IP. Let’s look at the key differences in these approaches:

NVMe-over-FC (FC-NVMe)

FC-NVMe is a great choice for those who already have FC storage-attached-network (SAN) infrastructure in place. The NVMe protocol can be encapsulated in FC frames using 16GFC or 32GFC host bus adapters and switches. Support for FC-NVMe on Linux servers can be gained by upgrading to the latest FC firmware and drivers. Therefore, investing in modern 16- or 32-Gb FC host bus adapters and SAN infrastructure provides future-proofing for FC-NVMe arrays when they’re released. It’s also worth noting that both SCSI (FCP) and NVMe (FC-NVMe) can coexist on the same fabric. Thus, legacy FC-SCSI-based arrays can run concurrently with new NVMe native arrays.

NVMe-over-Ethernet Fabrics using RDMA (NVMe/RDMA)

For this RDMA-capable Ethernet, adapters are mandated. There are two different types of RDMA implementation: RDMA-over-converged-Ethernet (RoCE) and Internet wide-area RDMA protocol (iWARP). Unfortunately, these protocols aren’t interoperable with one another. Their benefits include:

- NVMe-over-RoCE (NVMe/RoCE): When using Ethernet only networks, NVMe-over-RoCE is a good choice for shared storage or hyper-converged infrastructure (HCI) connectivity. Consequently, many storage array vendors have announced plans to support NVMe-over-RoCE connectivity. RoCE provides the lowest Ethernet latency and works well when the storage network involved is small scale, with no more than two hops. As the name implies, RoCE requires a converged or lossless Ethernet network to function. This approach enables extra network capabilities, including data-center bridging, priority flow control, plus more complex setup and network-management mechanisms. If low latency is the overriding goal, then NVMe-over-RoCE is likely to be the best option, despite the heightened network complexity

- NVMe-over-iWARP (NVMe/iWARP): The iWARP RDMA protocol runs on standard TCP/IP networks, and is therefore much more straightforward to deploy. Though its latency isn’t quite as good as that of RoCE, its greater ease of use and much lower administration overheads are very appealing. At this stage, storage array vendors aren’t designing arrays to support iWARP, so iWARP is for the moment best suited for software-defined or HCI solutions like Microsoft Azure Stack HCI/Storage Spaces Direct (S2D).

NVMe-over-TCP (NVMe/TCP)

NVMe-over-TCP is the new kid on the block. Ratified in November of 2018, it runs on existing Ethernet infrastructure with no changes necessary (taking advantage of the incredible pervasiveness of TCP/IP). The performance that NVMe-over-TCP delivers may not be quite as fast as NVMe-over-RDMA or FC-NVMe, but it can be easily implemented on standard network interface cards (NICs) and network switches. The key benefits of NVMe SSD storage can still be derived without requiring significant hardware investment. Some NICs like the Marvell FastLinQ 10/25/50/100GbE have the potential to offload and accelerate NVMe/TCP by leveraging built-in capabilities of offloading the TCP/IP stack in the NIC.

Summary

Whichever NVMe-over-Fabrics route you decide to undertake, Marvell can offer assistance in the implementation process, and with its product, particularly the QLogic 16- and 32-Gb FC host bus adapters that support FC-NVMe. In addition, the company’s FastLinQ 41000 and 45000 series of 10/25/40/50/100Gb Ethernet NICs and converged network adapters support both NVMe-over-RoCE and NVMe-over-iWARP functionality, as well as NVMe-over-TCP (thanks to the Universal RDMA feature incorporated).

Ian Sagan is a Field Applications Engineer at Marvell.

Reference

1. According to figures from Research and Markets https://www.prnewswire.com/news-releases/global-data-center-flash-storage-market-to-2024---nvme-flash-storage-to-replace-sassata-flash-solutions--emergence-of-qlc-nand-flash-drives-300854343.html

About the Author

Ian Sagan

Field Applications Engineer, Marvell

Based in Swindon, U.K., Ian Sagan is a Field Applications Engineer for Marvell’s EMEA operations. Having previously held commercial and technical roles with Brocade, QLogic, and Hewlett-Packard, Ian became part of the Marvell team in 2018, following its acquisition of Cavium. He has over 25 years of industry experience behind him, most of which has been focused on enterprise storage and Ethernet connectivity. He studied Industrial Electronics at Hounslow College in London.