Image processing is a demanding task and one reason is that it normally involves processing all of the pixels in an image. Multiple frames must be processed when dealing with video streams. Determining what changes from one frame to another is useful in detecting objects and other alterations, but it requires lots of horsepower and bandwidth to do that. This approach is needed because the image capture devices deliver a frame at a time.

But what if that wasn’t the only way to get image information?

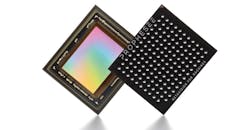

Prophesee’s Metavision image sensor (Fig. 1) takes a different approach—it’s an event-based sensor. Aim it at a solid color image and it generates almost no data because the pixels aren’t changing. Move a hand in front of the background and the sensor will start sending a stream of events that indicate what pixels change. This tends to be a fraction of the overall number of pixels, which is typical of most scenes.

The sensor actually works on a programmable threshold for each pixel, allowing the system to have a wide dynamic range of over 120 dB. This is significantly higher than most image sensors. As a result, the sensor can detect changes even with a wide dynamic range, which would cause problems with a conventional sensor.

For example, sun glare or low light can a problem because the typical image sensor has a more limited range for each pixel. The sensor can be set up for a low-light situation, but then it would deliver a maximum white value if a bright light is in the scene. A typical color sensor may have RGB values, though only 8 or 16 bits per pixel.

Figure 2 highlights the area where data would be generated by the Metavision sensor. In this case, the video is of a person swinging a golf club. The highlighted area is where pixels are changing.

There’s a downside to the sensor as it doesn’t report the color of each pixel—only the changes. An application may combine a conventional image sensor with the Metavision sensor if this type of information is needed, but the Metavision sensor changes the way your application analyzes video. It may only be interested in following the changes in an image.

For instance, an application may be tracking a hand gesture. It doesn’t matter whether a person is wearing a blue glove or not. The application simply wants to recognize the gesture and the Metavision sensor can provide that information more economically.

Frame-Based vs. Event-Based

Figure 3 attempts to highlight the difference between using a frame-based imaging system and event-based system. Imagine that there’s a rotating disk with a dot on the periphery. The spiral is a mapping of the blue dot’s position over time. Orange and red dots are highlighting the blue dot’s position for particular points in time. The frames to the right would be how a conventional imaging system would report the data, although the actual dot is all we’re concerned with. The circles and other dots are to provide a perspective.

The frame-based system would essentially show the dot jumping from one point to another. A sufficiently high frame rate would reveal the rotating nature of the system. Essentially, we have a Nyquist sampling issue.

Of course, a sufficiently fast frame-based solution will provide enough information for the video to be analyzed. However, this also means that a lot of data must be processed. If a machine-learning algorithm is being applied, then even more processing power is necessary.

The event-based system would deliver a significantly lower amount of information even though it could easily track the rotation. In fact, a microcontroller could easily handle the amount of data from this type of imaging system with a more complex scene and scenario. Of course, it could be overwhelmed by massive changes with a multitude of changing light conditions, reflections, etc., but that would be unusual in most cases.

Likewise, many applications have a more controlled environment, or the areas within the scene may be partitioned or managed in some fashion. The threshold that the Metavision sensor has for each pixel can be adjusted as well, allowing areas to essentially be ignored.

Prophesee is now delivering its sensor. “This is a major milestone for Prophesee and underscores the progress in commercializing our pioneering Event-Based Vision sensing technology. After several years of testing and prototyping, we can now offer product developers an off-the-shelf means to take advantage of the benefits of our machine-vision inventions that move the industry out of the traditional frame-based paradigm for image capture,” said Luca Verre, co-founder and CEO of Prophesee.

Frame-based video processing remains a useful paradigm. However, event-based video processing opens up a whole new area, potentially providing very-low-end platforms with the ability to handle image-processing chores that they couldn’t handle if a frame-based input stream was used.

Development hardware and software available from Prophesee gives developers the ability to implement the Metavision sensor right away. The current sensor has a 640-×-480 resolution with a 15-µm pixel size in a 0.75-in. format. It has a 0.04-lux low-light cutoff and under 1-mHz background noise activity. The sensor comes in a 13- × 15-mm PBGA package.

About the Author

William G. Wong

Senior Content Director - Electronic Design and Microwaves & RF

I am Editor of Electronic Design focusing on embedded, software, and systems. As Senior Content Director, I also manage Microwaves & RF and I work with a great team of editors to provide engineers, programmers, developers and technical managers with interesting and useful articles and videos on a regular basis. Check out our free newsletters to see the latest content.

You can send press releases for new products for possible coverage on the website. I am also interested in receiving contributed articles for publishing on our website. Use our template and send to me along with a signed release form.

Check out my blog, AltEmbedded on Electronic Design, as well as his latest articles on this site that are listed below.

You can visit my social media via these links:

- AltEmbedded on Electronic Design

- Bill Wong on Facebook

- @AltEmbedded on Twitter

- Bill Wong on LinkedIn

I earned a Bachelor of Electrical Engineering at the Georgia Institute of Technology and a Masters in Computer Science from Rutgers University. I still do a bit of programming using everything from C and C++ to Rust and Ada/SPARK. I do a bit of PHP programming for Drupal websites. I have posted a few Drupal modules.

I still get a hand on software and electronic hardware. Some of this can be found on our Kit Close-Up video series. You can also see me on many of our TechXchange Talk videos. I am interested in a range of projects from robotics to artificial intelligence.