What you’ll learn:

- What’s driving the expanding landscape for machine vision?

- The role of low-power connectivity in advancing vision technology.

- Color and event-triggered image capture.

Machine-vision systems have been used in industrial processes for some time. They’re often used to monitor products as they move around a facility on conveyor belts, for example. Relatively simple pattern recognition extended the capability of these machine-vision systems, but the introduction of artificial intelligence (AI) and machine learning is helping machine vision move into new applications.

An industrial production line is relatively uniform, making it easier for machine-vision systems to identify anything that falls outside a well-defined envelope. In the real world, things aren’t so uniform, and this is where the power of AI and machine learning will bring real benefits. An AI-enabled machine-vision system will be able to identify much more than a missing product or stalled conveyor belt.

These systems will pervade all verticals and augment multiple roles. Maintenance is one area where AI will support engineers servicing complex machinery. Other sectors, such as farming, will use machine vision to monitor the health of crops, remotely, and use this information to adjust irrigation cycles, ventilation, and more.

There’s significant growth in the commercial availability of cloud platforms providing the AI backend to these applications. Access to these platforms is increasing thanks to lower costs, while higher accuracy is driving demand. This is creating a virtuous cycle of investment that will make it simpler to connect machine-vision solutions to AI cloud platforms. It’s now possible to use machine vision for so much more and its use is expected to drive a new evolutionary step in the IoT.

Ultra-Low-Power Sensing

Part of the key to this growth will be the availability of ultra-low-power sensor solutions. These new applications will require an “always-on” presence, ready to capture images whenever needed. This will push the demand for solutions that combine high performance with low power.

Connectivity also will be critical, of course. With the heavy lifting of AI analysis being carried out in the cloud, the sensor platforms can be smaller and more power-efficient. However, they will need to include high-speed and reliable connectivity for the backhaul. This is where the ultra-low-power credentials of the Bluetooth Low Energy (Bluetooth LE) wireless protocol play an important and enabling role.

Adding Bluetooth LE technology to a sensor platform provides a simple yet comprehensive method of wirelessly connecting a machine-vision sensor platform to cloud services. By using an IoT gateway, or even a smartphone, the sensor platform is able to transfer image data as well as other sensor information to a cloud platform, where it can be analyzed using AI. Actions can then be sent back to the sensor platform or other connected smart actuator.

Image Capture in Color

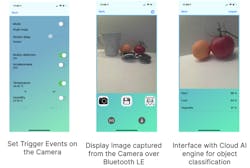

Although machine-vision systems are capable of using simple monochrome images to identify basic objects, color images convey a lot more information (Fig. 1).

When AI is employed to analyze scenes, color becomes even more relevant. Adding color to machine vision will bring a new dimension to automatic recognition in the emerging applications mentioned earlier. Color provides greater contrast and better differentiation between objects in a scene. AI systems are now able to exploit these features to provide better accuracy.

In this case, managing the overall power budget becomes even more important, especially for a battery-powered always-on device. This might include devices expected to operate for five years or more from a single coin cell. In terms of system power, the most critical design criteria become the image sensor, the control system, and the communications interface.

The RSL10 Smart Shot Camera was developed to provide engineers with access to a complete low-power image-capture platform that uses Bluetooth LE for connectivity (Fig. 2). The latest version of the platform adds support for color image capture using a camera module based on the ARX3A0 CMOS image sensor. The module conforms with the onsemi Image Access System (IAS) design format, which uses standardized connector and layout configurations to make the modules interchangeable.

The camera now supports the color version of the ARX3A0. The new platform also is smaller and even more optimized for power. After evaluating the system’s performance, customers can use the design files, including software, to develop their own smart machine-vision IoT sensor.

Power management is important to ensure longevity when being operated from a battery. The system employs a dedicated FAN53880 power-management IC (PMIC) on the color camera as well as hardware-based smart power-management modes. When continuous image capture is connected to the color camera, it consumes just 136.3 mW. While waiting for a trigger event, this drops to 88.77 μW, and in power-down mode, just 30.36 μW is consumed.

As a result, when capturing one image per day, the color camera platform can operate for over 11 years using a single 2,000-mAh battery.

Event-Triggered Machine Vision

The camera has been designed to provide event-triggered image capture. This means that, rather than streaming image data constantly, images are captured based on predefined events. The conditions for the events are monitored using highly advanced sensors, which are integrated into the camera platform.

The conditions that can be monitored with these sensors include motion, temperature, time, humidity, and acceleration. Developers can use the sensors’ outputs to create complex conditions for events. If these conditions are met, the camera triggers an image capture. The captured image is then transferred over Bluetooth LE to the smartphone or gateway.

Although the move to color image capture means more data being transferred over Bluetooth, onsemi engineers managed to accomplish the task with barely any increase in system power requirements. Part of the key to this is the RSL10 SIP, an ultra-low-power system-in-package (SiP). This small SiP acts as the hub of the entire system, controlling the image sensor processor, driving the environmental sensors, and managing the Bluetooth LE communications.

Interfacing to Cloud AI Platforms

onsemi provides a custom mobile app available on Android and iOS (Fig. 3). This allows for the use of Amazon Rekognition via a connected smartphone and a valid AWS account. Once the AWS account is connected to the RSL10 Smart Shot mobile app, images can be uploaded and analyzed. After analysis is complete, Amazon Rekognition returns a list of all objects recognized in the image with a percentage accuracy figure.

In addition, onsemi has collaborated with Avnet to integrate the RSL10 Smart Shot Camera within the IoTConnect Platform, a cloud-based solution powered by Microsoft Azure (Fig. 4). Designed to reduce as much complexity from the IoT design process as possible, IoTConnect provides the means to link information from the camera platform to the cloud so that data can be interpreted and manipulated, and learning (AI) can be performed.

Subsequently, customers are able to take this recipe and customize it for their own proof of value (POV) where vision is required, such as detecting objects, reading analog meters, or checking inventory levels. This validates IoT projects more quickly and helps get customers to market faster.

Conclusion

Vision sensing is an exciting technology that has applications in many areas, including factory automation and agriculture to name just two. While the benefit of having an “inspector” that can never become bored or tired or make a mistake is significant, the technology really comes into its own when AI and machine learning are added to the mix.

About the Author

Guy Nicholson

Senior Director, Industrial and Commercial Sensing Division, onsemi

Guy Nicholson is the marketing director of the Industrial and Consumer Solutions Division within the Image Sensor Group (ISG) at onsemi.

Guy has over 30 years of experience in the semiconductor industry. In his current role, he drives the strategy, product definition, ecosystem, and customer project plans within the Industrial and Commercial Solutions Division within the CMOS IMG.

Previously, Guy has worked at Texas Instruments and National Semiconductor for 28 years in various roles in product marketing and product line management. This includes the development of the first FPDLink LVDS solutions in Automotive/Industrial, initiating the Bus LVDS standardization, MIPI’s initial DSI/CSI solutions, and the first iPhone LTPS display driver plus serializer solution. He’s also been responsible for the market development of emerging innovations in the mobile market including OIS and ultra-low-power voice wake-up solutions.

Guy has a BSc degree in physics from Imperial College London.