What you’ll learn:

- What is Spatial AI?

- Detailed descriptions of different Spatial AI models.

Why not just say “AI”? Why “Spatial AI”? The answer is very simple: True perception requires a sense of space. We don’t work with AI in the abstract or confined to a computer. Rather, we work with it to provide vision to devices, like robots, that would otherwise have none. And for that vision to have any meaning, it must be given context.

It would be simple enough to hook up a video feed to a robot and call it a day, but that’s not sufficient for the kind of functionality we need. A video feed alone won’t allow a robot to derive meaning from the world around it. What we want is for this new input to allow our robot to engage, recognize, and learn about the information provided by this new sense. And the first step in that process is spatial.

First, a brief interlude to define DepthAI. DepthAI is an application programming interface (API) developed by Luxonis to facilitate use of its OAK cameras, and, consequently, Spatial AI. DepthAI is a comprehensive ecosystem that includes numerous repositories, tools, and documentation that form the foundation of robotic vision from Luxonis. In other words, DepthAI is the software interface that maximizes the capabilities of hardware and firmware.

As we proceed to discuss all of the various functionalities of Spatial AI as a concept, know that the DepthAI API running on OAK cameras is making it happen.

Detection and Inference

What is an object? Does that object have a name? How far away is that object from us? These are all questions people can answer long before we have the words to do so. These kinds of spatial understandings are just innate parts of the human experience. But not so for robots—it’s up to us to define what’s significant for them.

One of the basic building blocks of Spatial AI is object detection. With this technique, targeted areas of interest called “bounding boxes” are placed around objects so that robots can understand where they are in physical space.

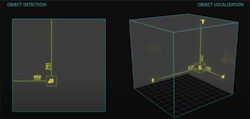

By running inference on the color and/or mono frames, a trained detector places the bounding box around the object’s pixel space, and assigns X (horizontal) and Y (vertical) coordinates to the object (Fig. 1). This 2D version of detection is a basic but essential first step of Spatial AI.

1. This demonstrates how AI models find the bounding box of an object of interest in an image’s pixel space, otherwise known as object detection.

Landmarks and Depth

But 2D object detection is relatively standard. To achieve true Spatial AI we need to go 3D, which means we need to sense depth as well. Object detection in 3D, also referred to as “object localization,” thus requires a third, “Z” dimension, allowing a robot to not only know where something exists on a flat plane (or screen), but also how close or how far away it is (Fig. 2).

2. With a third “Z” dimension, object localization is possible.

Once depth is added—once the full spatial location of objects is known—it opens up a whole new world. With this complete form of Spatial AI, many additional techniques come into play, enabling robots to classify and categorize objects and then employ that new understanding to tackle a wide range of use cases.

That brings us to 3D landmark detection. Landmark detection is the method of applying object localization to a pre-defined (trained) model. For example, landmark detection could be applied to models of human pose estimation or human hand estimation (Fig. 3). In these cases, the on-device neural network identifies the key structures and joints that make up the human body or human hand, respectively, assigns them coordinates, and links them together, as seen in the following example.

3. This visualization illustrates the difference between object detection and 3D object localization.

By creating this association between landmarks, more complex inference can be done. This includes performing a visual search to detect pedestrians walking across the street or reading the movements of someone’s hands to interpret American Sign Language (Fig. 4).

4. Shown here is hand landmark with X, Y, and Z coordinates.

Semantic Depth

Spatial AI also can be applied in a broader sense. Instead of picking out specific landmarks, it could be used to identify areas of coverage. This is a process known as “segmentation,” and takes two main forms: semantic segmentation and instance segmentation.

In both cases, segmentation happens by first training the system to recognize objects in a specified group and objects outside of that group. Objects in the specified group are identified by shading/coloring each of their pixels, while objects outside the group are shaded/colored differently to classify them as excluded (Fig. 5).

5. The Spatial AI model shown has identified an area of coverage, also known as segmentation.

The difference between semantic segmentation and instance segmentation is that, for semantic segmentation, all objects of the included group are considered the same. For example, a pile of apples on a table would all be assigned the same color and seen collectively as “apples.” If we instead wanted an instance segmentation of our apples, each one would be assigned a unique color to be able to differentiate between “apple 1” and “apple 2.” Instance segmentation is essentially a combination of object detection and semantic segmentation.

One of the primary use cases for segmentation comes in the form of navigation and obstacle avoidance. For AI to successfully take a vehicle from point A to point B, for example, it must be able to identify every object around it as well as understand which of those objects are safe and which must be avoided.

Taking the case of an automatic car, it first must be able to see both the road and lane lines so that it doesn’t create a hazard for other drivers. These would be semantically segmented as permitted areas. Secondly, it must be able to see other vehicles and any other obstacles that could result in a collision or damage. It may be beneficial in these cases to perform instance segmentation to better track independent positions and velocities, while still universally recognizing these objects as hazards.

Next Steps

While the development of Spatial AI and its various functionalities can be complicated, the takeaway is simple. Before anything else, robots need to be able to identify an object and understand where it is in space. From there, they can take on more nuanced tasks by adding layers of meaning or associations between those objects.

As we speak, Spatial AI—along with OAK cameras and the DepthAI API—is being utilized all over the world. Agricultural businesses are using it in tandem with unmanned drones to identify weeds and reduce the use of pesticides; industrial and construction businesses are applying it to improve employee safety by ensuring protective gear is worn and vehicles are operated responsibly; and doctors are using it to monitor newborns to ensure essential vital functions like breath and heart rate are at appropriate levels.

Spatial AI is the key to those applications and so much more. It helps provide the eyes and the brain for robots, and allows them to do jobs that people don’t want to do, or help people to do their jobs better and more safely. As robots increasingly integrate into our everyday lives, they will do so in large part thanks to the Spatial AI at their core.

About the Author

Brandon Gilles

CEO, Luxonis

As CEO of Luxonis, Brandon Gilles’ goal is to create groundbreaking products that not only advance robotic vision but make the technology accessible for anyone to use. A system engineering expert with over 20 years of experience, Brandon previously worked as a director at UniFi before starting Luxonis.

Through his determination and entrepreneurial drive, Luxonis is now the #1 robotic perception platform in the U.S., an achievement capped by winning back-to-back Edge AI and Vision Alliance Product of the Year Awards in 2021 and 2022 for the OAK-D and OAK-D-Lite cameras.