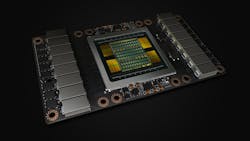

NVIDIA’s Tesla V100 (Fig. 1) is now available with 32 GB of high bandwidth memory (HBM2), double the original amount. This allows it to handle even larger applications while improving performance by as much as 50% versus the original version. This latest version will be available in systems from Cray, Hewlett-Packard Enterprise, IBM, Lenovo, Supermicro, and Tyan. Oracle Cloud Infrastructure will be rolling it out later this year.

1. NVIDIA’s Tesla V100 has six NVLinks that can now be connected via the company’s NVLink switch, which provides crossbar connections between 16 GPUs.

“We evaluated DGX-1 with the new Tesla V100 32GB for our SAP Brand Impact application, which automatically analyzes brand exposure in videos in near real-time,” said Michael Kemelmakher, vice-president, SAP Innovation Center, Israel. “The additional memory improved our ability to handle higher-definition images on a ResNet-152 model, reducing error rate by 40% on average. This results in accurate, timely, and auditable services at scale.”

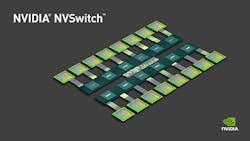

One of the other big announcements at NVIDIA’s Graphics Technology Conference (GTC) was about its new 16-port NVLink NVSwitch switch (Fig. 2). The NVSwitch has a bandwidth of 2.4 TB/s. The switch uses a crossbar architecture and is scalable whereby multiple switches can be used to link very large collections of GPGPUs.

The Tesla V100 is equipped with half-a-dozen, high-speed, NVLink ports designed to connect multiple GPUs together in a larger computing network. The built-in connections allow GPUs to be linked in a hypercube without additional hardware, but this limits the overall number of GPUs that can be directly connected. The NVIDIA DGX1 rack-mount system incorporates eight Tesla V100 GPUs with a total of 28,672 cores.

2. The NVSwitch supplies 16 high-speed, bidirectional links between NVIDIA GPGPUs.

The newest DGX2 incorporates more GPUs by using multiple NVLink switch chips to support the new 32-GB Tesla GPUs. This allows all 16 GPUs to share a common address space. The combination delivers over two petaFLOPS of performance.

NVIDIA updated its deep-learning and HPC stacks to take advantage of the switch and the additional memory available in the Tesla V100. There are new versions of NVIDIA CUDA, TensorRT, NCCL, and cuDNN.

NVIDIA’s systems are being employed in a range of applications from analyzing data for gas and oil exploration to machine learning. The latter can take advantage of the GPU’s small flowing point support as well as refinements in the architecture to support deep-neural-network training. The combination of new hardware, connectivity, and improved software stacks will be key to the success of other NVIDIA initiatives like the DRIVE Constellation Simulation System for testing self-driving car applications in a world simulation environment.

About the Author

William G. Wong

Senior Content Director - Electronic Design and Microwaves & RF

I am Editor of Electronic Design focusing on embedded, software, and systems. As Senior Content Director, I also manage Microwaves & RF and I work with a great team of editors to provide engineers, programmers, developers and technical managers with interesting and useful articles and videos on a regular basis. Check out our free newsletters to see the latest content.

You can send press releases for new products for possible coverage on the website. I am also interested in receiving contributed articles for publishing on our website. Use our template and send to me along with a signed release form.

Check out my blog, AltEmbedded on Electronic Design, as well as his latest articles on this site that are listed below.

You can visit my social media via these links:

- AltEmbedded on Electronic Design

- Bill Wong on Facebook

- @AltEmbedded on Twitter

- Bill Wong on LinkedIn

I earned a Bachelor of Electrical Engineering at the Georgia Institute of Technology and a Masters in Computer Science from Rutgers University. I still do a bit of programming using everything from C and C++ to Rust and Ada/SPARK. I do a bit of PHP programming for Drupal websites. I have posted a few Drupal modules.

I still get a hand on software and electronic hardware. Some of this can be found on our Kit Close-Up video series. You can also see me on many of our TechXchange Talk videos. I am interested in a range of projects from robotics to artificial intelligence.