BrainChip Takes Spiking Neural Networks to the Next Level

Neural networks rank as the hottest machine-learning (ML) trends in artificial-intelligence work these days. Actually, many different forms and implementations of neural networks exist, with convolutional neural networks (CNN) being one of the most common (Fig. 1). CNNs and other deep neural networks (DNN) usually involve a training process that generates a model that can be used for inference. Training requires lots of time and data, while the inference part uses much less. Thus, it can be deployed on lower-end systems, where training is often done in the cloud.

1. Machine learning encompasses a range of algorithms.

Spiking neural networks (SNNs) are a different form of neural networks that more closely matches biological neurons. SNNs, which use feed-forward training, have low computational and power requirements (Fig. 2) compared to CNNs.

2. Leveraging feed-forward training, spiking neural networks have low computational and power requirements compared to CNNs.

CNNs and SNNs are similar in that they take input, analyze the input using their neural-network model, and generate responses. Developers convert input data into a form that’s usable by the algorithms, and that often means preprocessing the raw data. For SNNs, data-to-spike converters are needed as well. BrainChip provides a number of data-to-spike converters for developers to start with, but more can be created depending on the application and source data format. For example, it has a pixel-to-spike converter for information coming from Dynamic Vision Sensors (DVS).

SNN models also work differently from CNNs because of their spiking nature. Information flows through CNN models in a wavelike fashion; information is modified by weights associated with the nodes in each network layer. SNNs emit spikes in a somewhat similar fashion, but spikes aren’t always generated at each point, depending on the data.

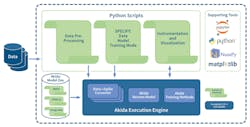

BrainChip’s new Akida Development Environment (ADE) is designed to support SNN model creation (Fig. 3). It can generate executables for the Akida execution engine that will run on most CPUs. Of course, the algorithms will be much faster with hardware acceleration.

3. BrainChip’s Akida uses Python scripts to control workflow and presentation of results.

The software implementation is a precursor to a new spiking neural network: Akida Neuromorphic System-on-Chip (NSoC) hardware that Brainchip will announce in the future. SNNs developed with Akida will be able to run on the hardware when it becomes available, although some applications may work well without resorting to specialized hardware.

The ADE is built around Python and the open-source Jupyter Notebook platform, which is used with other machine learning tools. The development tools are integrated with the NumPy Python numerical package for massaging input and output data as well as matplotlib, a Python 2D plotting library. The ADE model zoo includes preconfigured models that developers can choose from or add to.

The Akida development process (Fig. 4) uses an iterative cycle akin to CNN development. Developers start or create a new model that’s then trained and tested. Progressive refinement through iteration is done until a model meets performance requirements.

4. The Akida development process is an iterative cycle similar to CNN development; there’s progressive refinement until a model meets performance requirements.

SNN training and hardware requirements are significantly different from CNNs, so it behooves developers to become familiar with the differences and advantages. There are applications where one is much better than the other and areas where they overlap. SNNs training in the field is another advantage for many applications. However, developers will need to understand and experiment with SNNs to make sure that their particular application is suitable. ADE provides this capability.

About the Author

William G. Wong

Senior Content Director - Electronic Design and Microwaves & RF

I am Editor of Electronic Design focusing on embedded, software, and systems. As Senior Content Director, I also manage Microwaves & RF and I work with a great team of editors to provide engineers, programmers, developers and technical managers with interesting and useful articles and videos on a regular basis. Check out our free newsletters to see the latest content.

You can send press releases for new products for possible coverage on the website. I am also interested in receiving contributed articles for publishing on our website. Use our template and send to me along with a signed release form.

Check out my blog, AltEmbedded on Electronic Design, as well as his latest articles on this site that are listed below.

You can visit my social media via these links:

- AltEmbedded on Electronic Design

- Bill Wong on Facebook

- @AltEmbedded on Twitter

- Bill Wong on LinkedIn

I earned a Bachelor of Electrical Engineering at the Georgia Institute of Technology and a Masters in Computer Science from Rutgers University. I still do a bit of programming using everything from C and C++ to Rust and Ada/SPARK. I do a bit of PHP programming for Drupal websites. I have posted a few Drupal modules.

I still get a hand on software and electronic hardware. Some of this can be found on our Kit Close-Up video series. You can also see me on many of our TechXchange Talk videos. I am interested in a range of projects from robotics to artificial intelligence.