Industry 4.0 Finds New Synergies with a Combination of Diverse Technologies

Download this article in PDF format.

When talking about the history of technology, it’s common to group manufacturing operations into four loosely defined “industrial revolutions” that gradually replaced human- and horse-power with increasing levels of mechanization. The first stage of mechanization relied on power from water and steam; stage two introduced electrical power, mass production, and the assembly line; and computers and automation characterized stage three.

We’re now well into the fourth stage, often called Industry 4.0. It builds on stage three by adding robotic technologies, massive data-gathering courtesy of Internet of Things (IoT) technology, and cloud computing with machine learning and artificial intelligence (AI) (Fig. 1).

1. Typical Industry 4.0 elements include a robot, an HMI, machine vision, and a sophisticated communication network. (Source: TI Applications: Factory Automation & Control)

Sponsored Resources:

- Making factories smarter, more productive through predictive maintenance

- Selecting the right industrial communications standard for sensors

- Using dynamic headroom control for industrial lighting applications

In manufacturing, Industry 4.0 is synonymous with the smart factory, in which automated systems make decisions about local processes, communicate and cooperate with each other, and interact with human operators in real-time both in the factory and in the cloud.

Figure 2 shows some of the systems that come together in an Industry 4.0 installation. Many have been manufacturing staples for decades, but others have only recently begun to appear on the factory floor.

2. Industry 4.0 requires the integration of multiple systems into a seamless whole. (Source: TI Applications: Industry 4.0 Solutions)

On the shop floor, an autonomous guided vehicle (AGV) is a relatively new arrival. Robots have been around since the 1960s in fixed-location work cells; an AGV is a portable robot that’s used to move materials around a warehouse or factory. The first examples navigated by following markers or wires embedded in the floor, but later versions include vision and wireless communication to avoid collisions with other vehicles or humans and find the shortest route for a given set of tasks.

Equipped with a robot arm and vision system, AGVs can take over complex logistical tasks such as stacking, loading, and material handling. At the factory level, a supervisory controller can coordinate multiple AGVs for the highest overall operating efficiency.

Industry 4.0 has also introduced some new underlying technologies that benefit new and old machines alike. Machine vision, for example, is being added to many operations that were formerly “blind.” Robots and CNC machines use machine vision for safety checks of the motion envelope, the correct positioning of workpieces, and perform precision measurements. At final assembly, vision systems check tolerances and check quality. Multiple cameras or scanners are combined to provide a real-time view of the production process for human overseers.

Gain more insight into AGVs, machine vision, and other elements of Industry 4.0 operations, as well as browse a large selection of reference designs, by visiting Texas Instruments’ Industry 4.0 portal.

The Architecture of Industry 4.0

At the system level, Industry 4.0 can be thought of as a hierarchical architecture. Typically, this architecture has three levels, also called tiers or nodes.

- At the factory floor level, edge nodes provide connectivity to individual industrial machines: robots, CNC machines, furnaces, etc. A programmable logic controller (PLC), embedded microcontroller, or similar device receives the data from machine-mounted sensors, processes them, and controls operation via valves, actuators, or motors. The PLC communicates information to higher levels over a wired or wireless local-area network (LAN).

- Gateway nodes communicate with multiple edge nodes, which may be using a variety of protocols, combine the information into a wide-area network (WAN), and relay it to the enterprise node.

- The enterprise node, which may be located in the factory or in the cloud, receives the gateway data. It hosts application programs for data analytics and modeling, and communicates relevant results to both the process owners and back down to the gateways and edge nodes.

A Distributed Communications Network Ties It All Together

None of this would be possible without a distributed communication network. It contains multiple communication protocols optimized for their required tasks (Fig. 3).

3. A factory 4.0 installation requires a varied mix of communication schemes. (Source: TI: “Selecting the right industrial communications standard for sensors” PDF, p. 4)

The machines that perform the industrial operations include numerous end-node components such as sensors, actuators, and motors. These connect to PLCs that run task-specific programs, perform diagnostics, and communicate with other PLCs.

The simplest level of control may not require a communication protocol at all—the PLC interfaces with the sensor or actuators via an input/output (I/O) port. The I/O signal can be either digital or analog. A pressure sensor, for example, typically generates an analog voltage, and a limit switch input is a digital on/off. New sensors or actuators require manual intervention, and the PLC is responsible for diagnostics and fault detection.

The simplest network protocol is point-to-point communication in a master-slave arrangement. A single master device, typically the PLC, controls multiple slaves. The slaves transmit and receive data when commanded by the master, but don’t initiate communication themselves.

IO-Link (IEC 61131-9), for example, is an example of this type of arrangement: master-slave communication protocol for small sensors and actuators. The standard includes aspects such as data exchange, diagnostics, and system configuration.

Ethernet Variants Dominate Fieldbus Communications

The next higher level connects PLCs, robot work cells, and similar nodes in a factory-wide multi-master network. It also includes connections to a gateway, and then to the cloud.

IEC 61158 defines this level: It’s a set of industrial computer network protocols generically named fieldbus. Although there are many competing protocols—controller area network (CAN) and simple sensor interface (SSI), for example—some variant of Ethernet is the dominant player in fieldbus-level factory networking.

Standard Ethernet is widely used in commercial installations. It’s high-speed and cost-effective, with a common physical layer across the network. The protocol allows for a flexible network topology and a flexible number of nodes, but standard Ethernet is non-deterministic. The delay between a message leaving its origin and arriving at its destination is variable and can be up to 100 ms.

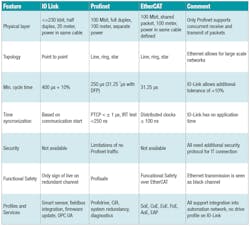

A variable delay is a serious issue in a control application, so designers have developed several Ethernet versions for Industry 4.0 use. Popular industrial Ethernet (IE) implementations include Profinet, EtherCAT, Ethernet/IP, Sercos III, and CC-Link IE Field; they offer high bandwidth, long physical connections, low latency, and deterministic operation, among other features. Figure 4 compares some popular I4.0 protocols.

4. A comparison between IO-link and two Ethernet protocols shows the distinctive features of each. (Source: TI: “Selecting the right industrial communications standard for sensors” PDF, p. 7)

A deterministic network—one in which no random variations in propagation times occur between messages—is important for automated applications. Consequently, IE protocols with deterministic data delivery have been developed for use on the factory floor.

Time-sensitive networking (TSN) extends Ethernet for real-time applications by adding guaranteed latency to deterministic operation. The Institute of Electrical and Electronic Engineers (IEEE) has added several sections to IEEE 802.1 to improve Ethernet determinism and quality of service without compromising its strengths, such as interoperability. Not all TSN extensions have been fully implemented.

Sitara Offers Multiprotocol Capability

Interfacing to multiple networks and responding to messages in a timely manner is a challenge for the hardware. Texas Instruments offers a broad range of microcontrollers for Industry 4.0 functions. Sitara, for example, is a scalable family of microcontrollers that combines Arm Cortex-A cores with flexible peripheral sets. Industry 4.0 applications include industrial communications, factory automation, grid infrastructure, human-machine interface (HMI), and industrial motor drives.

Figure 5 shows a multiprotocol reference design based around the Sitara AM437x. It includes an IO-Link master device and support for several IE protocols.

5. This IO-Link Master reference design with a Sitara AM437x processor can also accommodate multiple Ethernet protocols (Source: TI: “Selecting the right industrial communications standard for sensors” PDF, p. 6)

The Sitara AM437x supports multiprotocol industrial Ethernet with an ARM Cortex-A9 processor and an integrated programmable real-time unit and industrial communication subsystem (PRU-ICSS). Each PRU subsystem includes a pair of 200-MHz real-time cores, each with a 5-ns per-instruction cycle time. The real-time cores have no instruction pipeline, which ensures single-cycle instruction execution.

The PRU architecture gives the Sitara core direct and fast access to the outside world since each PRU also has its own single-cycle I/O. In addition, local memory and peripherals dedicated to each real-time engine guarantee low-latency responsiveness. An incoming interrupt can access a real-time processing engine directly without encountering CPU-induced delays.

The Sitara AM437’s PRU-ICSS handles real-time tasks that would otherwise require an application-specific integrated circuit (ASIC) or field-programmable gate array (FPGA). It gives designers an upgradable software-based solution that can adapt to new features or protocols.

Bringing It All Together: Predictive Maintenance

Industry 4.0 isn’t just about communicating between different machines and eking out incremental efficiency improvements—it enables completely new ways of thinking about industrial operations.

Predictive maintenance (Fig. 6) is a good example. The early mass-production assembly lines simply ran the machines until something broke; production then stopped until the offending part was replaced. As reliability engineers accumulated data on failure rates, they could estimate the likely operating life of components. Armed with this knowledge, maintenance staff could add a margin of safety and then replace components long before their expected failure.

6. Predictive maintenance can save maintenance costs, but it may require an expansion of network bandwidth. (Source: TI: “Making factories smarter, more productive through predictive maintenance” PDF)

The replacement would be carried out as part of a scheduled maintenance procedure. In remote locations, a technician might even replace a part earlier than recommended if it saved a later visit.

This approach reduces the likelihood of an unexpected failure, but it does increase costs because parts are retired with a portion of their useful life remaining. Engineers must have a clear understanding of these costs versus the costs associated with a stopped production line.

There’s a better way. Many parts don’t fail without some indication of impending doom. A part may draw more (or less) current, operate at a higher temperature, vibrate excessively, or otherwise give notice that it’s nearing end of life (EOL). For example, a battery cell voltage will decline when it’s near EOL, and a gas-detecting sensor will change resistance.

With the correct sensing in place, the predictive-maintenance system can monitor these parameters and transfer the data to the cloud. Here, complex algorithms are able to compare the performance of the part to data gathered on thousands of other units operating in a wide range of conditions. The result is a much more accurate forecast of upcoming failure, and even a prediction of time-to-failure.

The probability of failure of a part often depends on the operating conditions over its lifetime—repeated stresses or temperature excursions, for example. The state of the art in predictive maintenance for complex systems requires a digital twin: The data from the real-world system is input to a software model that simulates the system operation in real time and predicts many parameters, including EOL.

Adding Predictive Maintenance Poses System Challenges

Implementing this function on multiple systems in a factory vastly increases the amount of information that must be processed. A digital twin requires a constant stream of high-quality, real-world data to validate the performance of the virtual machine against its physical counterpart. Otherwise, the real and virtual worlds will gradually diverge, and the calculations and predictions of the digital twin will be of little value.

The challenges begin with the factory machine itself—adding the required sensors and a wired or wireless communication link. Once the raw data is available, the designer must determine the best way to handle it. In the I4.0 architecture discussed above, there are three possible levels to process raw data: the edge node close to the machine; the gateway; and the enterprise (cloud).

Processing raw data at the edge minimizes the strain on the upstream network, but risks losing information and reducing DT accuracy. Conversely, if the data are sent to the cloud for processing, no information will be lost; however, the network bandwidth may not be able to handle the increased load while still maintaining the required levels of determinism.

Even if the fieldbus level is adequate, the designer must evaluate communication system performance at lower levels: Must a remote node be upgraded from the existing low-power, low-bandwidth network to a higher—and more expensive—level of performance? One option is to leave the existing network in place and graft on a parallel system dedicated to predictive maintenance and DT applications.

Adding or upgrading communications, and especially adding new IP connectivity, increases the risk of security breaches. Therefore, designers must also incorporate appropriate security technologies such as encryption, secure hardware designs, application keys, etc.

Conclusion

Industry 4.0 adds new technologies to factory operations, notably sophisticated multi-protocol communications and cloud computing, that improve operational efficiency and allow for previously unattainable functions. Predictive maintenance is one example, but adding a predictive-maintenance capability requires careful attention to system-level issues such as network bandwidth and system security.

Texas Instruments can help in many aspects of an Industry 4.0 design with a broad portfolio of application-specific products and reference designs.

Sponsored Resources:

About the Author

Paul Pickering

Paul Pickering has over 35 years of engineering and marketing experience, including stints in automotive electronics, precision analog, power semiconductors, flight simulation and robotics. Originally from the North-East of England, he has lived and worked in Europe, the US, and Japan. He has a B.Sc. (Hons) in Physics & Electronics from Royal Holloway College, University of London, and has done graduate work at Tulsa University. In his spare time, he plays and teaches the guitar in the Phoenix, Ariz. area