Life at the Sensor Edge: The Increasing Demands of Autonomy

This article is part of the Autonomous Vehicles: Technology and Trends series and is in the Automotive topic of our Library: Article Series.

As the automotive industry rapidly evolves its autonomous-driving capabilities, some interesting trends are emerging that help propel the electronics and sensor technologies to enable full self-driving functionality.

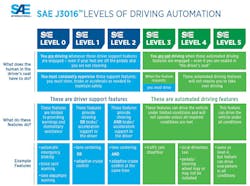

The autonomous world generally refers to the SAE international J3016 diagram (Fig. 1) when looking at the autonomous capabilities of vehicles and then establishing a level of autonomy for that vehicle.

A trend we’re seeing is that production vehicles now include more sophisticated advanced driver-assistance system (ADAS) functionality (SAE level 1/2) as standard equipment. In addition, some level 3 features (such as automated driving functions) are starting to become available, leading to blurring of the levels and the use of new level descriptors such as level 2+.

The other trend in autonomy is around the mobility-as-a-service companies (such as ride-hailing, last-mile delivery, and shuttles) that are creating technology to enable fully driverless vehicles (SAE level 5). However, as most of them will actually operate in a specific Operational Design Domain (ODD) that will have restricted usage patterns such as specific speeds, routes, and weather conditions, they’re not level 5 as to the letter of the SAE law. Instead, they’re classified as level 4, even though they’re fully self-driving.

Both trends have a profound effect on both the sensor and compute technology to enable them. They require a very detailed view of the environment around the vehicle and need to predict what’s going to happen in that environment as the vehicle plans its routes and maneuvers. This increase in awareness required for automated-driving functions typically needs more, better, and different sensors compared to the traditional driver-assistance functions. It also demands much more computing power to understand all of the information coming from the sensors and make the correct driving decisions.

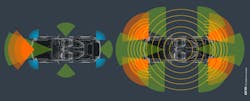

Before moving to look at the challenges with the sensor arrays, a quick example of how an additional automated driving function will suddenly require a much broader set of sensors. Level 2 “lane-level” capabilities, such as adaptive cruise control, lane keeping, and automatic emergency braking, rely very heavily on front-facing sensors to tell the system what’s happening in front of the car (e.g., lane markings, speed and distance of the car in front).

However, when a function such as automated lane change is added, even if initiated by the driver, the automation system now needs to understand what’s happening around the car, especially in the lane that it needs to move into. Thus, it requires sensors with a 360-degree view rather than just front-facing, and it needs to understand any vehicles in the new lane, including their speed and trajectory, so that the maneuver can be completed safely.

More Autonomy = More Sensors = More Raw Data to Process

The key technology aspects to think about as the capabilities advance from driver assistance to automated driving really center around what data is needed, the technology to collect the data, and how that data is processed. This article will focus on the latter two.

For a vehicle to perform the level of autonomy required for level 4, it must have a far better perception of its direct environment compared to what’s typical of level 2 (or even level 3) vehicles. A substantially greater number of higher-precision sensors, both in terms of volume and assortment, are required for that to happen. Figure 2 shows not just an increase in the number of sensors typically used for level 2 driver assistance (left), but also additional sensor modalities to provide a more accurate depiction of the surrounding environment (right).

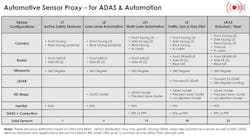

Right now, several sensor modalities are available to AV manufacturers, with radar, LiDAR, camera, audio, and thermal being the common options. As you’d expect, each modality offers its own unique strengths and weaknesses, as well as applicability for certain autonomous functions.

Cameras, for example, are one of the key sensors for autonomy because they can identify objects, lane markings, and traffic signs similarly to how our eyes are able to recognize those objects. But, like our eyes, cameras don’t function as well without good lighting and in poor weather conditions. The key to a successful understanding of the environment surrounding the vehicle—and to being able to predict what will happen in that environment—typically relies on having different sensor modalities either working in tandem to produce a comprehensive view of the world around the car, or for redundancy if a sensor fails (even for a split second).

Figure 3, courtesy of VSI Labs, shows examples of different types and numbers of sensors required as vehicles climb the ladder from driver assistance to automated driving. VSI Labs maintains its own automated demonstration vehicles used to evaluate common components, including sensors, processors and algorithms, that it shares with industry partners. As such, the company has collated a wealth of information on sensor usage.

Before examining some of the challenges around sensor technology and the processing of information that they produce, it’s valuable to review the different sensor modalities and how they’re used in autonomous vehicles:

Ultrasonic sensors

These sensors use ultrasound waves for ranging and simple object detection, and for many years have been incorporated into traditional vehicles for functions like parking assistance. They’re typically located all around an autonomous vehicle for close range object detection, such as other vehicles entering your lane and autonomous parking functions.

Radar (Radio Detection and Ranging)

Based in low-frequency, long wavelength radio waves, radar sensors detect objects with higher precision and distance than ultrasonic sensors. They have been used in modern ADAS systems to complement camera systems for functions such as adaptive cruise control (ACC) and automatic emergency braking (AEB).

Autonomous vehicles typically implement two types of radar systems: short/medium range radar for near-field object detection for functions such as side- and rear-collision avoidance, and long range or imaging radar, which is front-facing for distant object detection, including objects beyond the car in front. Such long-range radar is also effective in poor weather conditions like rain and fog, where other sensor modalities struggle.

LiDAR (Light Detection and Ranging)

LiDAR sensors are based around light waves that are projected using a pulsed laser. By sending out tens of thousands of pulses per second, these sensors can build up a 3D point cloud and cover a wide area at long range. This sensor, which is able to detect objects with greater precision than radar, can also be used to detect movement in the objects—a key property required for autonomous vehicles to understand their environment. LiDAR hasn’t been traditionally used in ADAS systems. It really comes into play in level 3 and above systems where its 3D environment mapping is vital for the self-driving system to be able to understand, and therefore plan, its maneuvers.

Camera

Camera sensors are the most versatile of all sensor modalities, as they, like human eyes, can “see” objects and the environment surrounding the vehicle. Cameras can be linked to machine-learning algorithms to not just detect, but also identify, objects that are both static (e.g., lane markings or traffic signs) and dynamic (e.g., pedestrians, cyclists, and other vehicles). They can also detect color, which is useful for identifying warning signs and traffic signals. Cameras are employed across all levels of autonomy, with more cameras (360-degree view) and higher resolutions being common as level 2+ and beyond are implemented.

A camera sensor in an AV can really benefit from working with other sensor modalities, including radar for short and long-range detection, and LiDAR for its 360-degree 3D environment-mapping and object-tracking capabilities. In fact, it’s become increasingly apparent that a diversity of them all—one’s strength complementing another’s weakness, others to step in if one fails—is the leading option within a vehicle. No single sensor modality will ever be a viable solution for all conditions on the road, nor will there ever be a perfect mix for all vehicles. For example, an autonomous long-haul truck will require different sensors to an autonomous valet service.

The quality of both the sensor technology and the quality of the data that it produces is dramatically increasing. For example, higher-resolution cameras are resulting in a greater volume of higher-quality imagery. As you can imagine, cost becomes a factor here, especially across a vehicle’s complete sensor modality mix.

Many different types of sensors for every vehicle isn’t cheap, nor is the computational power required to process all of the data being gathered. After all, a greater number of sensors across a greater number of sensor modalities equals a significant increase in raw data to process. Not only that, computational processing must keep pace with this growth without compromising power consumption, thermal properties, size and cost, safety, and security.

This is driving us toward a consolidation of much more powerful clusters of application processors and accelerators into fit-for-purpose heterogeneous multicore SoCs, rather than discrete CPUs. Here, the processing capabilities provided by Arm technology are readily adapting to processing the higher-precision sensor modality mix.

Sensor Edge Processing

One trend we see is extra processing closer to the sensor—what we call the “sensor edge”—that’s done by the sensor SoC. The increase in data volume has created a demand for more data processing to be done at the sensor before it’s sent. This could be achieved using Cortex-M processors for lower-complexity sensors, such as ultrasonic and short-range radar, moving into Cortex-R and Cortex-A processors for more sophisticated sensors like imaging radar, LiDAR, and cameras.

For example, an image signal processor (ISP), such as Arm’s Mali-C71AE, can be inserted into a camera sensor to enhance the camera’s data at the sensor edge and ultimately improve the value it’s able to provide to the system. Going one step further, machine learning (ML) could be added to the processor at the sensor edge, such as the Arm Ethos-N77. This means the image isn’t only being enhanced, it’s also being scanned to detect important things like oncoming vehicles or objects in the road. If the processor is taught to only pass on data that the system needs to know and filter out the rest, it enables huge performance and power efficiencies to be made further down the process.

Higher-resolution sensors are integral to successfully achieve the degree of perception required for level 4+ autonomy. But the sensors only fulfill a part of the processing required by autonomy—computing power does the rest.

As we journey toward the true self-driving of level 4 autonomy and its augmented demands, car manufacturers and their suppliers are increasingly coming to terms with the level of processing power required. Arm is investing in bringing its range of processing options to market, including new high-performance automotive enhanced processors that integrate functional safety.

The company is also working closely with automotive OEMs, Tier-1s, autonomous technology providers, and its extended automotive ecosystem, including key silicon partners, which are capable of meeting those computing requirements. This industry collaboration, which was recently demonstrated by the formation of the Autonomous Vehicle Computing Consortium, is what’s needed to help take autonomous vehicles from prototype to mass deployment.

Robert Day is the Director of Autonomous Vehicles at Arm.

Read more from the Autonomous Vehicles: Technology and Trends series is in the Automotive topic of our Library: Article Series.

About the Author

Robert Day

Director of Autonomous Vehicles, Arm

Based in San Jose, Calif., Robert Day is the director of autonomous vehicles at Arm, responsible for the definition of Arm-based solutions for the next generation of autonomous vehicle applications.

In this role, Robert is dedicated to understanding the requirements for future autonomous innovation and helping put together solutions and platforms to meet those requirements. By immersing himself in the autonomous-vehicle world, he has accumulated a wealth of knowledge around the technology, issues and potential solutions that will make self-driving vehicles a reality.

His passion for the safe deployment of autonomous vehicles has made him a popular speaker at automotive conferences worldwide, and even hosted a panel of autonomous experts at Arm’s own TechCon conference where the reality of true autonomous vehicles was hotly debated.

Prior to Arm, Robert was VP of Marketing at Lynx Software Technologies, where he was responsible for the Lynx portfolio of safety and security solutions, focusing on avionics and automotive applications. Robert started his career as a SCADA engineer, and later wrote processor simulators for the popular XRAY debugger with Mentor Graphics. Robert has a BSc in Computer Science from the University of Brighton, UK.