What’s the Difference Between Frame- and Event-Based LiDAR?

What you’ll learn:

- Shortcomings of frame-based sensors like LiDAR.

- How event-based sensors outperform their frame-based cousins thanks to their ultra-low latency.

- What is VoxelFlow?

Leading autonomous-vehicle (AV) companies have mostly been relying on frame-based sensors, such as LiDAR. However, the tech has historically struggled to detect, track, and classify objects fast and reliably enough to prevent many corner-case collisions, like a pedestrian suddenly appearing from behind a parked vehicle.

Frame-based systems certainly play a part in the advancement of AVs. But considering the harrowing statistics that indicate 80% of all crashes and 65% of all near-crashes involve driver inattention within the last three seconds of the crash, the industry needs to be working toward a faster, more reliable system that addresses safety concerns within short distances, when we’re most likely to see a collision.

Framed-base sensors have a role, but it shouldn’t be a one man show when it comes to AV technology. Enter event-based sensors, technology being developed within autonomous-driving systems offering advanced technology to provide vehicles with enhanced safety measures where LiDAR falls short.

How Does LiDAR Fall Short?

LiDAR, or light detection and ranging, is the most prominent frame-based technology within the AV space. It uses invisible laser beams to scan objects. LiDAR’s ability to scan and detect objects is extremely fast when you’re comparing it to the human eye. However, in the grand scheme of AVs, when it’s a matter of life or death, LiDAR systems that feature an advanced perceptual processing algorithm and a state-of-the-art perceptual process are adequate at distances beyond 30 to 40 meters. Still, they don’t act nearly fast enough within that range, when a driver is most likely to crash.

Generally, automotive cameras operate at 30 frames per second (fps), which introduces a 33-ms process delay per frame. To accurately detect a pedestrian and predict his or her path, it takes multiple passes per frame. This means resulting systems can take hundreds of milliseconds to act, but a vehicle driving 60 km/hr will have traveled 3.4 meters in only 200 ms. In an especially dense urban setting, the danger of the delay is heightened.

LiDAR, along with today’s camera-based computer-vision and artificial-intelligence navigation systems, are subject to fundamental speed limits of perception because they use this frame-based approach. To put it simply, a frame-based approach is too slow!

Many existing systems actually use cameras and sensors that aren’t much stronger than what comes standard in iPhones, which produce a mere 33,000 light points per frame. A faster sensor modality is needed if we want to significantly minimize process delay and more robustly and reliably support the “downstream” algorithm processing made up of path prediction, object classification, and threat assessment. What’s needed is a new, complementary sensor system that’s 10X better at target generating an accurate 3D depth map within 1 ms—not tens of milliseconds.

Aside from LiDAR’s limitations to react fast enough, the camera systems are often very costly. Ranging from a couple thousand to hundreds of thousands of dollars, the cost of a LiDAR system often falls on the end user. In addition, because the technology is constantly being developed and updated, new sensors are constantly hitting the market at premium prices.

LiDAR systems also aren’t the most aesthetically pleasing pieces of equipment, a major concern when you consider that they’re often applied to luxury vehicles. The system needs to be packaged somewhere with a free line of sight to the front of the vehicle, which means it’s typically placed in a box on top of the vehicle's roof. Do you really want to be driving around in your sleek, new sports car, only to have a bulky box attached to the top of it?

Cost and aesthetics are certainly drawbacks to LiDAR systems, Ultimately, though, the underlying issue is that these systems have limited resolution and slow scanning rates that make it impossible to distinguish between a fixed lamp post and a running child. What’s needed is a system with a point count that’s denser than the tens of thousands provided by LiDAR. In actuality, the point density should be in the tens of millions.

Event-Based Sensors are Driving the Future of AVs

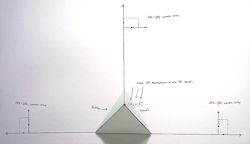

LiDAR struggles within 30 to 40 meters—event-based sensors thrive in that range. Event-based sensors, such as the VoxelFlow technology currently being developed by Terranet, will be able to classify dynamic moving objects at extremely low latency using very low computational power. This produces 10 million 3D points per second, as opposed to only 33,000, and the result is rapid edge detection without motion blur (see figure). Event-based sensors’ ultra-low latency ensures that a vehicle can solve corner cases to brake, accelerate, or steer around objects that appear suddenly in front of a vehicle.

Event-based sensors will be a critical piece of technology that enables next generation level 1-3 advanced driver-assistance systems (ADAS) safety performance while also realizing the promise of truly autonomous L4-L5 AV systems. Event-based sensors, like VoxelFlow, are made up of three event image sensors distributed in the vehicle and a centrally located continuous laser scanner that provides a dense 3D map with a significantly reduced process delay to meet the real time constraints of autonomous systems.

Event-based sensor systems are automatically and continuously calibrated to handle shock, vibration, and blur resistance while also providing the required angular and range resolution needed for advanced driver-assistance systems (ADAS) and AV systems. It’s also shown to perform well in heavy rain, snow, and fog compared to LiDAR systems, which degrade in these conditions due to excessive backscatter.

Because LiDAR is the industry standard and has been proven to work well at ranges beyond 40 meters—even though it costs a fortune—event-based sensors will be able to complement the technology, as well as other radar and camera systems. It will enable the raw sensor data from these sensors to efficiently be overlaid with event-based sensor’s 3D mesh map. This will significantly improve detection and most importantly, enhance safety and reduce collisions.

In different stages of development, we expect to see a prototype of event-based sensors on vehicles by next year. From there, we’ll need to industrialize the system and make it smaller so that it meets the ascetic expectations of today’s drivers. However, there’s no doubt that event-based sensors will soon be a necessary component of AV systems.

About the Author

Pär-Olof Johannesson

CEO, Terranet

Pär-Olof Johannesson led the IPO on Nasdaq First North in 2017 and has been a seasoned entrepreneur and tech executive for 20+ years. Pär-Olof’s previous positions include Operating Venture Partner at Mankato Investments, BA Director at Flextronics, Area Manager at ABB, Project Manager at Ericsson, and Attaché Swedish Ministry for Foreign Affairs. He’s been an instructor at Stanford, MIT, and Lund School of Economics and Management and is an avid skier and tennis player (amateur level).