NTSB Report Sheds Light on Fatal Crash Involving Tesla Driver Assistance System

On Saturday afternoon, May 7, 2016, a 2015 Tesla Model S 70D passenger car, traveling eastbound on US Highway 27A near Williston, Fla., struck the right side of a 53-foot refrigerated semitrailer. Before the collision, the truck was making a left turn from westbound US 27A across the two eastbound travel lanes onto a local paved road. After the passenger car struck and crossed underneath the semitrailer, it traveled toward the right roadside, departing the roadway about 326 feet from the area of impact. The vehicle continued through a drainage culvert and two wire fences, then struck and broke a utility pole, rotated counterclockwise and came to rest perpendicular to the highway in the front yard of a private residence. Impact with the right side of the semitrailer sheared off the roof of the passenger car. The driver and sole occupant of the passenger car died in the crash.

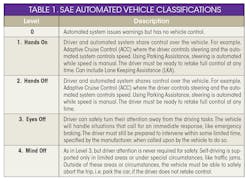

The cause of the accident hinges on the level of autonomous operation provided by the Tesla Model S. Table 1 lists the various levels of autonomous operation as defined by the Society of Automotive Engineers (SAE).

NTSB doesn’t speculate why the car failed to spot a tractor-trailer crossing the highway. But it appears that drivers have mistaken Tesla’s Driver Assistance System for a Level 5 autonomous system, when it's actually closer to Level 2 or even Level 1. This conclusion is based on the NTSB’s crash investigation.

The NTSB report explained that the Tesla Model S is equipped with a suite of driver assistance system (DAS) features that includes adaptive cruise control (ACC), lane keeping assistance, and automatic emergency braking (AEB). The Tesla Autopilot feature combines data obtained from optical, radar, and ultrasonic sensors on the vehicle to build an internal model of the nearby surroundings including both stationary and non-stationary objects.

Using this information, together with parametric data concerning the current position1 and motion of the Tesla vehicle, Autopilot’s Autosteer and Traffic-Aware Cruise Control features work together to compute the wheel torque, wheel braking, and steering inputs required to keep the vehicle in its driving lane when cruising at a set speed on highways that have a center divider and clear lane markings.

The Tesla Model S driver assistance system contains three main subsystems:

- Sensor suite to assess the nearby environment

- Servo suite to provide thrust, braking, and steering inputs

- Data processing suite to collect input data from the sensors and compute instructions to deliver to the servos.

Information travels between these three subsystems using multiple CAN buses. These subsystems provide the functions that implement Tesla’s Autopilot DAS, including:

- Performance monitoring including vehicle speed, acceleration, and direction of travel.

- Control including steering angle and motor torque.

- Processing to build a model of the external environment and, together with servo commands specified by the driver, deriving servo instructions used to control the vehicle.

The Tesla Model S uses three types of sensors for measuring the relative position of objects in the nearby environment: two electromagnetic-based (radar and visible light) and one sound-based (ultrasonic). CAN busses are used to move data between the various ECUs servicing these and other automobile subsystems (such as motor control, airbag, etc.). Each ECU forms a ‘node’ on the CAN bus, and messages can be sent asynchronously from any node to any other node. A central node, the Gateway ECU, is a communications hub that distributes messages between the various CAN buses.

Radar System

The vehicle employs a Bosch active radar system using a multi-channel radar transceiver mounted behind the vehicle’s front grille. Time of flight between the broadcast of a series of electromagnetic impulses and the reception of the reflected energy from nearby objects provides a range vector. Radar sensing is limited to a fan-shaped region forward of the vehicle with a specified range of 525 ft. under ideal conditions.

The Bosch radar system also performs fault analysis and reports certain faults to the Autopilot DAS, including:

- Sensor adjustment/alignment

- ECU failure

- CAN bus failure

- Message handshaking problems

- Invalid external system/sensor data

- Parameter values out-of-range

The Tesla Model S optical system is a passive system that employs a single 1-Megapixel monochromatic camera mounted in the rear-view-mirror area of the front windshield and is designed to accept light information from a region ahead of the vehicle. This data is routed through a dedicated camera ECU that can store images in volatile memory and pass this data on to a visual processing unit produced by MobilEye that performs preprocessing of the incoming optical data and fusion with data received from the Bosch radar system. The results of this data fusion, together with image processing performed to extract features corresponding to objects in the local environment, is communicated to other components of the Tesla Model S Autopilot system including the Gateway ECU.

The MobilEye system performs fault analysis and reports certain faults to the Autopilot system. These include messages related to: radar low visibility or misalignment, image quality issues (focus, contrast, etc.), image obstruction issues (blockages, sun blindness, etc.), and image calibration issues.

The Tesla Model S ultrasonic system is an active system that uses high-frequency sound waves broadcast and sensed using an array of 12 ultrasonic transducers arrayed about the front and rear bumpers of the vehicle. Time of flight between the broadcast of a sound impulse and the reception of its return reflected from a nearby object is used to compute short range distance. Data from the ultrasonic sensors are used primarily to track objects travelling at low relative speeds in close proximity to the vehicle.

Event Data Recorder

Federal regulation 49 CFR 563 specifies the data collection, storage, and retrievability

requirements for vehicles equipped with event data recorders. The regulation does not require that vehicles be equipped with event data recorders, which is voluntary. The regulation also specifies vehicle manufacturer requirements for providing commercially available tools and/or methods for accomplishing data retrieval from an event data recorder in the event of a crash. The Tesla Model S involved in this crash did not, nor was it required to by regulation, contain an event data recorder. As a result, the data recorded by the ECU was not recorded in accordance with this regulation. Further, there is no commercially available tool for data retrieval and review of the ECU data. NTSB investigators had to rely on Tesla to provide the data in engineering units using proprietary manufacturer software.

The car stores non-geo-located data on the vehicle in non-volatile memory using a removable SD (Secure Digital) card in the Gateway ECU. This SD card maintains a complete record of all stored data for the lifetime of the vehicle, which includes general vehicle state information. This data is continually written to the Gateway ECU as long as the car is on. Typical parameters include:

- Steering angle

- Accelerator pedal position

- Driver applied brake pedal application

- Vehicle speed

- Autopilot feature states

- Lead vehicle distance

Some of these parameters are recorded at a 1Hz rate. Other parameters are only recorded when a change of state occurs. All parameters are timestamped with the time of arrival at the Gateway ECU using a GPS derived clock time.

The vehicle also supports the acquisition and storage of data related to forward collision

warning (FCW) and automatic emergency braking (AEB) events. Typical parameters include information documenting vehicle, radar, camera, and the internal DAS-state associated with a triggering FCW/AEB event. This data is recorded as a snapshot triggered by a specific event and GPS timestamped with the time of arrival at the Gateway ECU.

As part of FCW and AEB event data, the vehicle supports the acquisition and storage of image data captured by the forward-facing camera. This system can buffer eight frames of image data sampled at one second intervals centered upon a triggering FCW/AEB event. These frames are initially captured in volatile memory in the Camera ECU and then stored in non-volatile memory at the end of the drive. From there it is transferred via CAN bus to the Gateway ECU where it is stored on the internal SD card. This image data is not time stamped.

Data from the SD card is episodically data-linked to Tesla servers. Camera data will only be available after it has been transferred to the Gateway ECU via CAN bus. This process can take over 40 minutes. In general, data stored on-board the vehicle will contain information additional to that contained on Tesla servers. Specifically, any data stored since the last auto-upload event will exist only on the vehicle itself and must be recovered by forcing an over-the-air upload.

Besides the aforementioned data, the vehicle supports the upload of anonymized geo-location data to Tesla for mapping and Autopilot feature development efforts. This data is not stored on-board the Tesla vehicle.

Data Recovery

Approximately 510 MB of data was recovered from the vehicle by removal and duplication of data stored on the GTW internal SD card. This data was composed of 87 files organized in eight folders and stored on the SD card in a MS Windows readable format. The data included eight image files representing data from the forward facing camera. A small subset of this data was stored in ASCII format. But the vast majority, including the vehicle log files containing all of the parametric data discussed in this report, was stored in a proprietary binary format that required the use of in-house manufacturer software tools for conversion into engineering units.

The Tesla model S involved in this crash was capable of recording hundreds of parameters. Of these, Tesla converted the subset determined by the NTSB to be relevant to this investigation into engineering units for the investigation. This was accomplished by performing a database query on vehicle data on Tesla servers that mirrored the information that was recovered from the SD card. The result of this query yielded parametric data from 53 distinct variables, and text-based information related to 42 distinct error messages, covering a 42 hour period on May 7, 2016.

In the following discussion, parameter names will be given in bold italics. For discrete parameters – those only taking on a finite list of possible states – the parameter state will be given in italics.

Certain parameters, such as VEHICLE SPEED, are intended to represent a physical measurement; these are referred to as continuous parameters. Other parameters, such as CRUISE STATE, are intended to represent one out of a finite number of possible states; these are referred to as discrete parameters.

For the vast majority of the trip, the AUTOPILOT HANDS ON STATE remained at HANDS REQUIRED NOT DETECTED. Seven times during the course of the trip, the AUTOPILOT HANDS ON STATE transitioned to VISUAL WARNING. During six of these times, the AUTOPILOT HANDS ON STATE transitioned further to CHIME 1 before briefly transitioning to HANDS REQUIRED DETECTED for 1 to 3 seconds. During the course of the trip, approximately 37 minutes passed during which the Autopilot system was actively controlling the automobile in both lane assist and adaptive cruise control.

Twice during the course of the trip the data showed indications consistent with the vehicle coming to a stop or near stop. At these times vehicle speed slowed to below 7 mph, CRUISE STATE transitioned from Enabled to Standby, AUTOPILOT HANDS ON STATE transitioned to HANDS NOT REQUIRED, and AUTOPILOT STATE oscillated between AVAILABLE and UNAVAILABLE. In both cases, the driver applied accelerator pedal input to manually accelerate the vehicle before the AUTOPILOT STATE and AUTOPILOT HANDS ON STATE transitioned to states consistent with Autopilot operation with lane assist.

After the second stop or near stop, the CRUISE SET parameter increased in steps from 47 mph, to 65 mph, to 70 mph, and finally to 74 mph, where it remained for approximately two minutes up to and just after the crash. The VEHICLE SPEED parameter reported 74 mph at the time of the crash. The Lead Vehicle Distance parameter indicated the presence of a lead vehicle on six separate occasions during the accident trip. The last occasion approximately two minutes prior to the crash, where the lead vehicle distance dropped from a constant of 204.6 (indicating no vehicle detected) to 18.6 m (61 feet) and then increased and transitioned back to no vehicle detected over the course of 30 seconds.

Converted data indicates that the headlights were not on at the time of the collision. The HEADLIGHT STATUS parameter is a discrete parameter that changes state when an activation or deactivation event occurs, and assumes the last known state at all other times. HEADLIGHT STATUS was previously reported as OFF approximately six hours prior to the beginning of the accident trip.

Tesla performed a supplemental database query at NTSB request to determine the presence of any status messages indicating that AEB or FCW were disabled during the accident drive; no such messages were identified. Tesla reported that there was no indication in the recorded data of an FCW event, AEB event, or any other event indicating detection of an in-path stationary object at or just prior to the time of the crash.

Glossary

ACC, Adaptive Cruise Control

AEB, Automatic Emergency Braking

CAN, Controller Area Network

DAS, Driver Assistance System

ECU, Electronic Control Unit

FCW, Forward Collision Warning

GTW, Gateway ECU

About the Author

Sam Davis

Sam Davis was the editor-in-chief of Power Electronics Technology magazine and website that is now part of Electronic Design. He has 18 years experience in electronic engineering design and management, six years in public relations and 25 years as a trade press editor. He holds a BSEE from Case-Western Reserve University, and did graduate work at the same school and UCLA. Sam was the editor for PCIM, the predecessor to Power Electronics Technology, from 1984 to 2004. His engineering experience includes circuit and system design for Litton Systems, Bunker-Ramo, Rocketdyne, and Clevite Corporation.. Design tasks included analog circuits, display systems, power supplies, underwater ordnance systems, and test systems. He also served as a program manager for a Litton Systems Navy program.

Sam is the author of Computer Data Displays, a book published by Prentice-Hall in the U.S. and Japan in 1969. He is also a recipient of the Jesse Neal Award for trade press editorial excellence, and has one patent for naval ship construction that simplifies electronic system integration.

You can also check out his Power Electronics blog.