Thermal Imaging Will Make Autonomous Vehicles Safer and More Affordable

For advanced driver-assistance systems (ADAS) and autonomous-vehicle (AV) platforms to become the future of driving, those systems must be prepared for all driving conditions. The road is full of complex, unpredictable situations, and cars must be equipped with effective, intelligent sensor systems that are not only affordable for mass production, but capable of collecting and interpreting as much information as possible to ensure the artificial intelligence controlling a vehicle makes the right decision every time.

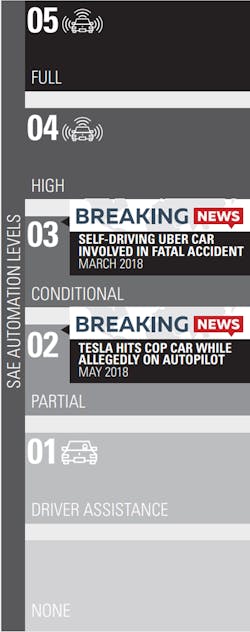

However, the existing sensor technology currently deployed on test roads across the world doesn’t completely address the requirements for SAE level 3 and greater. Truly safe ADAS and AV require sensors to deliver scene data adequate for the detection and classification algorithms to autonomously navigate under all conditions for SAE automation level 5 (Fig. 1).1 This is a challenging requirement for engineers and developers to address.

1. The SAE automation levels provide a classification system for self-driving cars.

Visible cameras, sonar, and radar are already in use on production vehicles today at SAE automation level 2, and SAE automation levels 3 and 4 test platforms have added light detection and ranging (LiDAR) to their sensor suite, but that’s not enough. These technologies are unable to detect all important roadside data in all conditions, nor provide the data redundancy required to ensure total safety.

Thermal is the Missing Link

Events like last year’s tragic Uber accident in Arizona show the challenges for AV systems to “see” and react to pedestrians in all conditions, be it on a dark country road or a cluttered city environment—especially in inclement weather like thick fog or blinding sun glare. In these uncommon, but very real scenarios, thermal cameras can be the most effective in quickly identifying and classifying potential hazards both near and far to help the vehicle react accordingly.

Especially for visible cameras, classification is challenging in poor lighting conditions, nighttime driving, sun glare, and poor weather (Fig. 2). As thermal sensors detect a longer wavelength of the electromagnetic spectrum versus visible cameras, the technology is unaffected by night or daylight in detecting and reliably classifying potential road hazards such as vehicles, people, bicyclists, animals, and other objects, even from up to 200 meters away.

2. A wide-FOV FLIR ADK classified a person at 280 feet, twice the needed stopping distance, in the recreation of an Uber accident in Tempe, Arizona.

Furthermore, thermal cameras offer redundant but separate data for a visible camera, LiDAR, or radar systems. For example, a radar or LiDAR signal from a pedestrian can be camouflaged by a nearby vehicle’s competing signal or other nearby objects in a cluttered environment. If a pedestrian is crossing between two cars or partially obstructed by foliage, there will be little to no reflected signal to detect the pedestrian, or the reflected data could confuse the prevailing intelligent systems designed to inform the vehicle’s movements.

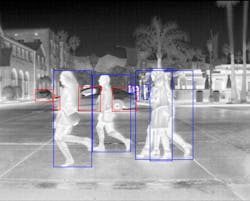

In such cases, thermal cameras can see through light foliage by detecting the heat of a person or animal versus the surrounding environment. This distinct advantage, with the addition of machine-learning classification, enables a person or animal to literally stand out from the background, enabling the vehicle to behave appropriately (Fig. 3).

3. ADAS and AV sensor suite.

Thermal Cameras are Much More than “Night Vision”

Thermal cameras are also distinct from low-light visible cameras, or what’s known as “near-IR” cameras, that require some visible light to operate. Often, these cameras have IR LEDs that illuminate the immediate area in front of the camera. Although this is effective for up to 165 feet (50 meters), roughly the range of a typical vehicle’s headlights, this drastically limits the available reaction time a typical passenger car may need to fully stop and prevent an accident.

For example, a typical passenger car traveling 65 miles per hour down a rural highway on a rainy night will need more than 50 meters to stop, even one controlled by AI with a nearly instant reaction time. Combining thermal imaging with LiDAR and radar can significantly outperform low-light visible cameras while providing additional redundant and consistent data in all lighting conditions (Fig. 4).

4. Thermal cameras see heat, reducing the impact of occlusion on classification of pedestrians.

Bringing Thermal to the Masses with Affordable Cameras

Mass adoption of SAE automation level 3 (conditional automation) and above depends on affordable sensor technologies, the proper computing power required to process the incoming sensor data, and the artificial intelligence needed to execute driving commands that deliver safe and reliable transportation in real-world conditions.

A common misconception is that thermal sensors, with their background in military use, are too expensive for automotive integration on a mass scale. Thanks to advances in thermal-imaging technology, improved manufacturing techniques, and increased manufacturing volume, it’s becoming possible to mass-produce affordable thermal sensors for SAE automation level 2 and higher.

FLIR Systems has already solved the problem of mass-producing thermal sensors through its Lepton thermal camera, having produced nearly two million units in the last few years alone. These lessons can help the industry quickly ramp up production on a global scale. Moreover, FLIR has delivered more than 500,000 automotive-qualified thermal sensors through its tier-one auto supplier for nighttime detection systems on luxury automobiles.

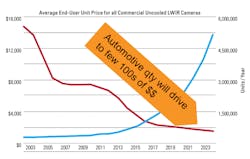

Until recently, thermal cameras were measured in thousands of dollars each for VGA resolution or higher—the baseline resolution required for ADAS and AV scenarios. Now they’re an order of magnitude lower in price due to volume and technology improvements.

Continuous improvements in smaller pixel design and yield improvements promise to lower costs still further (Fig. 5). Based on current development plans, it’s forecasted that an additional 2X reduction in cost can be accomplished over the next several years. This compares favorably with the required 10X cost reduction of LiDAR systems necessary to meet OEM cost targets.

5. Estimated global average end-user price and volume of thermal cameras. (Courtesy of Maxtech International Inc.)

Adoption of significant volumes of thermal cameras for SAE automation levels 2 and 3 will likely start in 2022 or 2023, with annual growth rates of 200% to 300% through 2030. With the planned improvements and automotive manufacturing scale, thermal cameras will become an affordable component for the ADAS and AV sensor suites.

Lowering the Barriers of Entry for Thermal-Camera Adoption

To enable ADAS developers to rapidly integrate and understand the benefits of thermal cameras in rounding out their sensor suite, FLIR’s team within the OEM division recently released a free machine-learning starter thermal dataset (click here). The dataset features a compilation of more than 14,000 annotated thermal images of people, cars, other vehicles, bicycles, and dogs in day and nighttime environments, enabling developers to begin testing and evolving convolutional neural networks (CNNs). By utilizing the dataset, they can quickly see the comprehensive and redundant system benefits of thermal detection (Fig. 6).

6. FLIR’s thermal starter dataset is available free of charge.

With its unique capabilities to improve safety for vehicles with automation levels from 1 to 5, plus a clear path forward for mass adoption, it’s a matter of when, not if, thermal cameras become an integral part of the ADAS and AV ecosystem.

Reference

About the Author

Paul Clayton

Vice President & General Manager, OEM and Emerging Markets Segment

Paul Clayton is responsible for FLIR’s OEM and Emerging division, which supplies multiple COTS and custom thermal cameras cores for FLIR Systems, the world’s highest-volume thermal camera supplier. Some of these camera cores are into the automotive market arena, including FLIR's commercially available PathFindIR camera and FLIR ADK (Automotive Development Kit) thermal sensor. Mr. Clayton leads the team that develops strategies to meet the market needs of automakers and automotive engineers and increase the integration of thermal imaging in the transportation sector.