Developing Vision Systems with Dissimilar Sensors

Drones, intelligent cars and augmented- or virtual-reality (AR/VR) headsets all use multiple image sensors, often of different types, to capture data about their operating environment. To supply the image data the system needs, each sensor requires a connection to the system’s application processor (AP), which presents design challenges for embedded engineers.

The first challenge is that APs have a finite number of I/O ports available for connecting with sensors, so I/O ports must be carefully allocated to ensure all discrete components requiring a connection to the AP have one. Second, drone and AR/VR headsets have small form factors and use batteries for power. Therefore, components used in these applications must be as small and power efficient as possible.

One solution to the AP’s shortage of I/O ports is the use of Virtual Channels, as defined in the MIPI Camera Serial Interface-2 (CSI-2) specification. They can consolidate up to 16 different sensor streams into a single stream that can then be sent to the AP over just one I/O port.

The hardware platform of choice for a Virtual Channel implementation is the field-programmable gate array (FPGA). Alternative hardware platforms take a long time to design and may not have the low-power performance needed for applications like drones or AR/VR headsets. Some would argue that FPGAs have too large a footprint and consume too much power to be a feasible platform for Virtual Channel support. But advances in semiconductor design and manufacturing are enabling a new generation of smaller, more power-efficient FPGAs.

Situational Overview

The growing demand among consumers for drones, intelligent cars, and AR/VR headsets are driving tremendous growth in the sensor market. Semico Research sees automotive (27% CAGR), drone (27% CAGR), and AR/VR headset (166% CAGR) applications as the primary demand drivers for sensors, and forecasts semiconductor OEMs will be shipping over 1.5 billion image sensors a year by 2022.

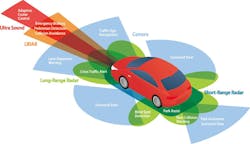

The applications mentioned above require multiple sensors to capture data about the application’s operating environment. For example, an intelligent car could use several high-definition image sensors for the rearview and surround cameras, a LiDAR sensor for object detection, and a radar sensor for blind-spot monitoring (Fig. 1).

1. In today’s intelligent cars, sensors (radar/LiDAR, image, time-of-flight, etc.) enable applications like emergency braking, rearview cameras, and collision avoidance.

This proliferation of sensors presents a problem as all of these sensors need to send data to the car’s AP, and the AP has a finite number of I/O ports available. More sensors also increase the density of wired connections to the AP on the device’s circuit board, which creates design footprint challenges in smaller devices like headsets.

One solution to the AP’s shortage of I/O ports is the use of Virtual Channels. Virtual Channels consolidate video streams from different sensors into a single stream that can be sent to the AP over a single I/O port. A popular current standard for connecting camera sensors to an AP is the MIPI Camera Serial Interface-2 (CSI-2) specification developed by the MIPI Alliance. CSI-2 can combine up to 16 different data streams into one by using the CSI-2 Virtual Channel function. However, combining streams from different images sensors into one video stream presents several challenges.

Challenges of Enabling Virtual Channels

Combining sensor data from the same type of sensor into one channel isn’t a complicated proposition. In one approach, the sensors can be synchronized and their data streams concatenated so that they’re able to be sent to the AP as one image with twice the width. The challenge arises from the need to combine the data streams of different sensors.

For example, a drone could use a high-resolution image sensor for object detection during daylight operation, and a lower-resolution IR sensor to capture heat patterns for object detection at night. These sensors have different frame rates, resolutions, and bandwidths that can’t be synchronized. To keep track of the different video streams, every CSI-2 data packet needs to be tagged with a Virtual Channel identifier so that the AP can process each packet as needed (Fig. 2).

2. Virtual Channels combine data streams from multiple sensors to conserve I/O ports. Data streams from different sensors require processing to synchronize clock rates and output frequencies.

In addition to packet tagging, combining data streams from different types of sensors also requires the sensor data payload be synchronized. If the sensors operate at different clock speeds, separate clock domains need to be maintained for each sensor. These domains are then synchronized before being output to the AP.

A Dedicated Hardware Bridge is Needed for Processing

Implementing a bridge solution to support Virtual Channels in hardware can address the issues described above. With a dedicated Virtual Channel bridge, all image sensors connect to the bridge’s I/O port so that the bridge can connect to the AP over a single port, freeing up valuable AP ports to support other peripherals (Fig. 3). This also addresses the design footprint challenge created by tracing multiple connections between sensors and the AP on the device’s circuit board; the bridge consolidates those multiple traces to the AP.

3. To minimize the I/O ports used to connect sensors and the AP, a hardware bridge with Virtual Channel support consolidates multiple sensor streams for delivery over a single I/O port.

FPGAs allow for the implementation of parallel data paths for each sensor input, with each path in its own clock domain. These domains are synchronized in the Virtual Channel Merge stage as seen in Figure 3, removing the processing burden from the AP.

Benefits of PLD-Based Virtual Channel Hardware

When it comes to implementing Virtual Channel support in hardware, the most compelling integrated-circuit (IC) platform choice is the FPGA. FPGAs are ICs with flexible I/O ports that can support a wide variety of interfaces. They also have large logic arrays that can be programmed with hardware description languages such as Verilog.

Unlike ASICs, which require lengthy design and quality-assurance (QA) processes, FPGAs have already been QA-qualified for manufacturing and can be designed in days or weeks. However, traditional FPGAs are typically viewed as physically large, power-hungry devices that aren’t well-suited for power-constrained embedded applications.

That script flips, however, with the CrossLink family of FPGAs from Lattice Semiconductor. The family provides the combination of performance, size, and power consumption that’s needed for video-bridge applications utilizing Virtual Channels. The FPGAs offer two 4-lane MIPI D-PHY transceivers operating at up to 6 Gb/s per PHY and a form factor as small as 6 mm2. They support up to 15 programmable source synchronous differential I/O pairs such as MIPI-D-PHY, LVDS, sub-LVDS, and even single-ended parallel CMOS, yet consume less than 100 mW in many applications. Sleep mode is supported to reduce power usage in standby. Lattice also has an extended software IP library to help speed up implementation of different bridging solutions.

Summary

Virtual Channels enabled by the MIPI Camera Serial Interface-2 (CSI-2) specification help embedded engineers consolidate multiple sensor data streams over a single I/O port, reducing overall design footprint and power consumption for applications using large numbers of images sensors. By virtue of their reprogrammability, performance, and size, low-power FPGAs allow customers to add support for Virtual Channels to their device designs quickly and easily.

Tom Watzka is Technical Mobile Solutions Architect at Lattice Semiconductor.

About the Author

Tom Watzka

Technical Mobile Solutions Architect

Tom Watzka, Technical Mobile Solutions Architect at Lattice Semiconductor, has over 20 years of experience in developing embedded products, including seven years developing consumer mobile solutions. He received his BS from the Rochester Institute of Technology and MS from Pennsylvania State University, and conducted his Master’s Thesis on FFT algorithms.