A Standard for Haptics-Enabled Media Files

>> Electronic Design Resources

.. >> Library: Article Series

.. .. >> Topic: System Design

.. .. .. >> Series: Touching on Haptics

In a previous article on the need for standardization in haptics, we reviewed the different standards organizations that would be ideal forums for haptic standards. In this article, we take a deeper dive into the MPEG and Immersion’s proposal to the SDO for the inclusion of haptics in media files.

In April 2020, the MPEG Systems File Format sub-group accepted a proposal from Immersion for the addition of haptic tracks to audio/video files in the ISO Base Media File Format (ISOBMFF). The proposal will proceed to the next stage in the standardization process.

The ISOBMFF is a foundational MPEG standard (a.k.a. ISO/IEC 14496 Part 12) that forms the basis of most media files being created and consumed in the world today. All MP4 files (with suffix .mp4) are based on the ISOBMFF. MP4 files are the predominant way of transporting media content across almost all digital platforms. The 3GPP (.3gp) and 3GPP2 (.3g2) file formats—popular transports for audio/video files on mobile phones—are also extensions of the ISOBMFF.

The rationale for adding haptics functionality to such a ubiquitous standard is clear. Haptic signals provide an additional layer of entertainment and sensory immersion for the user and have emerged as a standalone experience associated with touch-based interfaces. Providing a standardized mechanism to add haptic tracks to the ISOBMFF opens the door to several positive developments:

- Content creators will be incentivized to add haptic tracks to their media files (since there will be a standardized way to do so).

- Media-player developers will issue updated versions of their players that can play such haptics-enabled files on mobile devices.

- Finally, consumers will benefit from a richer overall experience.

We’d like to share with you some details from our proposal to MPEG. If the standardization process (that has just begun) progresses to its logical conclusion, this proposal will be issued as an amendment to the ISOBMFF standard. The exact timeline is still to be determined and depends on several factors in the MPEG standardization process that are outside our control. In the descriptions below, we assume that the reader is familiar with the terminology and hierarchy of different box types in the ISBOMFF standard.

The proposal to embed haptic tracks in the ISOBMFF has the following key features:

- Haptics is treated as a first-order media type, just like audio and video. This reflects the fact that haptics isn’t metadata (defined as “data about other data”), and a haptics track can exist by itself within an ISOBMFF container, without accompanying audio or video data.

- A new handler type “hapt” is proposed, along the lines of the “soun” handler type for audio and the “vide” handler type for video tracks.

- The haptic track uses the NullMediaHeader box—there’s really no haptics-specific information that needs to be defined at this level in the box hierarchy within the ISOBMFF.

- Haptic tracks use a type-specific sample entry called HapticSampleEntry that extends the SampleEntry class defined in the ISOBMFF. The HapticSampleEntry contains configuration information on the haptic track, like the types of actuators the content in the file is designed to be played on. There’s support for both vibrotactile as well as kinesthetic actuators, reflecting the fact that haptic tracks could be played on devices with both types of actuators. Further, the proposal accounts for the fact that a device may have more than one of the above actuator types—this is done by specifying an array of VibeGeneralConfig and KinestheticGeneralConfig boxes in the HapticSampleEntry. These boxes contain actuator-specific configuration information such as frequency, motor type, waveform data format (all for vibrotactile actuators), and control frequency (for kinesthetic actuators). For example, if the content is intended for single vibrotactile actuator devices (e.g., basic phones, laptops, tablets), then the sample entry will have a single VibeGeneralConfig box (array of one). If the track is intended for multiple vibrotactile actuators, the sample entry will have an array of VibeGeneralConfig boxes, each of different frequency and motor type. A similar structure can be used for kinesthetic actuators. Finally, it’s possible to create a haptic track that can be played on both vibrotactile and kinesthetic actuators. The sample entry for such a track will have non-zero arrays of both VibeGeneralConfig and KinestheticGeneralConfig boxes.

- All of this is independent of the actual signal encoding, which is handled by specific haptic codecs. Each haptic codec will extend the HapticSampleEntry class and contain codec-specific configuration information.

General and Codec Configurations

In this article, we will not go into the details of the C++ language structures defined in the proposal. However, it’s worth highlighting the general (non-codec) and codec-specific configurations proposed. These configurations will ensure that media files with haptic tracks in them can be played on a wide variety of devices and account for different haptic codecs used (or likely to be used) in the haptics industry.

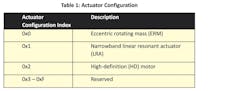

Table 1 shows the different actuator configurations (motor types) supported in the proposal. Note that “HD Motor” includes Voice Coil Motors and Wideband LRAs.

Table 2 shows the waveform data format (or endpoint configuration) to be used by the codec to interpret the haptic media in the file. It’s worth noting that the same codec can interpret the haptic media in different ways depending on the actuator type. This configuration helps the player on the device configure the codec appropriately.

In both tables, the “Reserved” value ranges provide room for expansion, for new actuator motors, and new endpoint configurations, respectively.

The proposal also introduces four haptic codecs and their associated FourCC codes, as an informational annex since codecs are outside the purview of this specific MPEG subgroup. However, the codec information shows how haptic tracks will interact with them. Table 3 gives an overview of these codecs.

The key to the values in the VibeGeneralConfig and KinestheticGeneralConfig columns is as follows:

- Mandatory: Haptic encoding REQUIRES this actuator type.

- Optional: Haptic encoding COULD use this actuator type.

- N/A: Haptic encoding WILL NOT play on this actuator type.

Note: The “hstr” coding format is Immersion’s proprietary format. Discussions are underway to determine the feasibility of standardizing it within MPEG. More information on the ongoing IEEE haptic codec standard can be found here. The Enumerated Effects codec is MIDI-based and is best used for media streams that are augmented with haptic effects derived from a table of pre-stored effects. The Audio-to-Vibe codec refers to an automatic audio to vibrotactile haptics conversion algorithm that examines the audio track and adds haptic effects based on changes in the audio characteristics.

Informative Use Cases

The standardization proposal also includes the following use cases to illustrate how adding haptic tracks to the ISOBMFF would work in the real world:

Haptic Encoded Stream Media File Playback Use Case

- File with two haptic tracks (both vibrotactile; one for SD actuator, one for HD actuator)

- Designed for single-actuator devices

- Haptic media interleaved with audio/video data

Haptic Encoded Stream Game Controller Use Case

- File with three haptic tracks (one vibrotactile, two kinesthetic tracks)

- Designed for four-actuator devices (two vibrotactile actuators—SD and HD—and two kinesthetic actuators)

- Kinesthetic actuators would be the left and right triggers on a game controller

- No audio/video data

Enumerated Effects Use Case

- Media stream augmented with haptic effects derived from a table of pre-stored haptic effects

- Haptic data is formatted like MIDI; as a MThd chunk followed by one or more MTrk chunks

- Individual effects can be stored as PCM data in MIDI DLS patches

Tracking Implementation

The proof of the pudding is in the eating, so we’re currently in the midst of implementing this proposal to ensure that it works. The objective is to release an app to selected users on both iOS and Android platforms, in time for the next MPEG meeting in early July. The app would show how MP4 files with haptic tracks embedded in them can be played back on mobile devices to enhance the overall user experience. The app will have the ability to turn off the haptic effects to further accentuate the difference between playing back media files with and without haptics. Stay tuned for an article on that subject when it happens.

Summary and Next Steps

The ISOBMFF is a foundational MPEG standard that’s the basis of an overwhelming majority of media files consumed in the world today. We have taken the first step toward adding haptic tracks to ISOBMFF-based files in a standardized way. Once the proposal is adopted into the ISOBMFF standard, we expect they will open the door to a whole slew of positive developments for the haptics industry.

For instance, content creators will be incentivized to add haptic tracks to their media files (since there’s a standardized way to do so), and media player developers will issue updated versions of their players that can play such haptics-enabled files on mobile devices. Users will be able to enjoy an additional level of entertainment and sensory immersion provided by haptics.

Yeshwant Muthusamy is Senior Director, Standards, at Immersion Corp.

>> Electronic Design Resources

.. >> Library: Article Series

.. .. >> Topic: System Design

.. .. .. >> Series: Touching on Haptics

About the Author

Yeshwant Muthusamy

Senior Director, Standards, Immersion Corp.

Yeshwant Muthusamy is a technologist by trade and geek by nature. He has spent the last 26+ years in the fields of speech recognition, natural language processing, and automatic language identification, among other areas of AI, with IoT and AR/VR being more recent areas of interest. Close to 20 years of that has been spent in various international SDOs (like MPEG, ATSC, Khronos, 3GPP, ITU-T, JCP, ETSI) on media and speech standardization efforts. The transition from speech and audio to haptics has been a relatively seamless and natural one (just another one of the human senses), although at times it feels like he is drinking from multiple firehoses as he gets up to speed on the intricacies of the haptic technology stack. Yeshwant is excited to be leading Immersion’s standardization and ecosystem strategy and he looks forward to working with the haptics community.