Arm Clashes With Intel and AMD With N2 Server CPU Core

Arm is turning up the pressure on Intel and AMD in the data center market with the launch of a new Neoverse CPU core that will serve as a blueprint for a new class of server processors.

Arm said the Neoverse N2 core, previously code-named Perseus, pumps out up to 40% more performance in terms of instructions per clock due to microarchitecture upgrades and the move to the 5-nanometer process. Arm said it offers more performance within the same area and power budget as its previous generation N1 core, tuned for the 7-nanometer node.

The company claims that it delivers industry-leading performance per watt at a lesser cost than rival chips from Intel and AMD, both of which use the X86 instruction set architecture. "No more choosing between power efficiency or performance; we want you to have both," Chris Bergey, vice president and general manager of Arm's infrastructure business, said.

The company also rolled out the V1 core that promises the best performance per thread of any of its CPU cores. Arm said the V1 core, based on its Zeus microarchitecture and part of its V-series family of CPU cores, runs 50% faster than the N1 at the same process node and clock speed, giving it an upper hand in supercomputers, machine learning, and other areas.

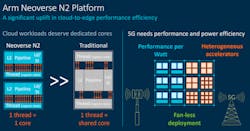

But with the N2 core, the company is striking at the heart of the data center server market. Arm said the N2 core is focused on server processors with large clusters of CPU cores, giving it more general-purpose performance from cloud data centers out to the edge of the network. “The power efficiency profile of the N2 core allows it to be competitive on threads per socket while at the same time offering dedicated cores instead of threads,” Bergey said (Fig. 1).

Arm updated its server CPU roadmap with the V1 and N2 cores last year.

The N2 core is also the first in its family of server processors using Arm's v9 architecture that brings with it major improvements in performance, power efficiency, and security. The CPU also supports its SVE2 technology, giving it more vector processing performance for AI, DSP, and 5G workloads (Fig. 2). The 64-bit CPU core includes dual 128-bit SVE processing pipelines. Upgrading to the 5-nm node opens the door for a 10% boost in clock frequency.

The N2 CPU core can also be equipped with DDR5 and HBM memory interfaces and other industry-standard protocols, including CCIX, CXL, and PCIe Gen 5, to attach accelerators.

Arm licenses the blueprints to its chips to Qualcomm, Apple, and other companies that use them to develop chips better suited to their demands. The power efficiency of its cores has transformed it into the gold standard in smartphones, and it is gaining ground in personal computers with Apple's M1. Arm is also trying to apply the principles of performance per watt and flexibility to data centers with its Neoverse CPU family.

While Arm does not compete directly with Intel or AMD, it is trying to convince technology giants—the Amazons, Microsofts, and Googles of the world—to licenses its blueprints and use them to create server-grade processors that can replace Intel's Xeon Scalable CPUs in the cloud. Arm is also rolling out its semiconductor templates to startups, such as Ampere Computing, aiming to take the performance crown from AMD's EPYC chips.

Arm said the N2 also stands out for being scalable (Fig. 3). Its customers can use the N2 core in server-grade chips in networking gear in data centers and 5G base stations, with up to 36 cores consuming up to 80 W. The N2 is also suited for server processors in cloud data centers that contain up to 192 cores clocked at faster speeds and devouring up to 350 W.“We are showing superior per-socket throughput, and this is very important for both cloud and hyperscale operators from a system and a total cost of ownership [TCO] standpoint," Bergey said. "We are also superior in per-thread performance, and that is important if you are a customer running VMs [virtual machines] from a cloud services provider."

With the N2 core, Arm is trying to expand on its wins with the N1 introduced in 2019, which serves as the template for most Arm server chips on the market, most famously Amazon's.

Amazon Web Services, the world's largest cloud service provider, has started renting out access to servers on its cloud, running on its internally designed Graviton2 CPU, a 64-core monolithic processor design based on the N1 core. The company said that it delivers 40% more performance over Intel CPUs, depending on the exact workload, at a 20% lower cost.

One of Arm's other silicon partners is Ampere, which also takes advantage of the N1 CPU core in its Altra series of server processors that scale up to 128 single-threaded cores per die, the most of any merchant server processor on the market. Last year, the Silicon Valley startup unveiled its 80-core Altra CPU, which TSMC fabs on the 7-nanometer process node.

Arm said that software giant Oracle would deploy Ampere’s Altra CPUs in its growing cloud computing business. Alibaba, one of the leading cloud computing vendors in China, is also prepping to roll out Arm-based cloud services. Arm said the N1 CPU architecture is used by four of the world's seven largest so-called hyperscale data center operators, counting AWS.

Arm said the N2 could also serve as a building block for server-class networking chips in data centers and 5G base stations. Marvell Technology revealed its new Octeon family of DPUs would use the N2 and said it plans to supply the first samples by the end of the year. Marvell said the chips would offer three times the performance of its previous generation.

“This is the just tip of the iceberg," Bergen said. Arm said its partners are on pace to start rolling out N2-based server chips by the end of 2021, with production ramping up in 2022.

Arm said the new N2 core comes with microarchitecture improvements across the board. Instead of pushing performance limits at any cost—its philosophy for the V1—the company said it only added improvements to the N2 that could pay for themselves in terms of power efficiency and area. Every feature aims to preserve “the balanced nature” of the N2 (Fig. 4)

While Arm enlarged the processing pipeline in the V1 core to accommodate many more instructions in flight, Arm said that it tapered the pipeline in the N2 to prevent penalties to power efficiency and die area. The company said that it is also reduced its dependence on speculation, where the CPU performs computations out of order to speed up performance.

But the N2 cores preserve other microarchitecture improvements from the V1, including improved branch prediction, data-prefetching, and cache management at the front end of the CPU core. Arm said that it loaded all of these improvements in a footprint 25% smaller than the V1 core so that Arm's silicon partners can bundle more of them on a single die.

The CPU core also incorporates the same micro-operation cache as the V1 core to keep it pre-loaded with instructions to run. The cache results in major performance gains on small kernels frequently used in infrastructure workloads.

Another area of focus was in the power management sphere. Dispatch throttling (DT) is used to throttle features as necessary to keep the CPU from overstepping the operator's desired power budget. Its maximum power mitigation mechanism (MPMM) smooths out the power consumed by the N2 to sustain clock frequencies. Power efficiency is a major priority in cloud data centers, which now account for around 1% of global electricity use.

A unique power-saving feature is performance-defined power management or PDPM. This is used to scale the microarchitecture to bolster the power efficiency of the CPU, depending on its workload. The feature works by adapting the width, depth, or speculation of the N2 to match the microarchitecture to its current workload. In turn, that improves power efficiency.

For scalability, it uses memory partitioning and monitoring (MPAM) to prevent any single process running in the CPU core from hogging system cache—or other shared resources.

Based on internal performance tests, Arm said a 64-core N1 processor already has more performance per thread than Intel's Cascade Lake Xeon Scalable CPUs and AMD's Rome EPYC server processors that contain up to 28 cores and 64 cores, respectively. But with a 128-core N2 server processor, Arm said these performance gains would increase sharply.

But the company is going to have a tough time gaining ground on rivals. Intel rolled out its new generation of Xeon Scalable server chips last month, codenamed Ice Lake, loading up to 40 X86 cores based on its Sunny Cove architecture, bolstered by its 10-nanometer node. Intel is struggling to defend its 90% market share in data center chips from AMD, which is pressuring it with its new Epyc Milan server CPUs that lash together up to 64 Zen 3 cores.

“Our competitors are not standing still,” Bergey said. "[But] we are confident our per-core performance will stack up against anything you can expect from traditional architectures."

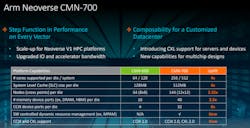

Along with its CPU improvements, it also announced a more advanced mesh interconnect to arrange the cores on a processor die, serving as connective tissue inside future server chips based on Arm's blueprints. Arm said the coherent mesh network interconnect, the CMN-700, brings "a step function increase” in performance than the existing CMN-600.

Arm is trying to give its partners more ways to customize the CPU, surround it with on-die accelerators and other intellectual property (IP) in a system-on-a-chip (SoC), or supplement it with memory, accelerators, or other server appendages to wring out more performance at the system level. “We will help you deliver solutions, heterogeneously or homogeneously, on a single die or within a multi-chip package, whatever is best for the use case," Bergey said.

The interconnect also supports PCIe Gen 5 to connect accelerators to the server to unload artificial intelligence and other workloads taxing for the CPU, doubling the rate of PCIe Gen 4 ports in the process. Arm also upgraded from DDR4 to the DDR5 DRAM interface. The IP also brings HBM3 for high-bandwidth memory into the fold.

Also new is support for the second-generation compute express link (CXL), which uses the PCIe Gen 5 protocol to anchor larger pools of memory or accelerators to the server with cache coherency. CXL gives the CPU and accelerators "coherent" access to each other’s memory, giving a performance boost to AI and other workloads.

It also includes ports based on the cache coherent interconnect for accelerators, or CCIX, standard. Arm said that while it would use CXL to link accelerators and memory, it would use CCIX as the primary CPU-to-CPU interconnect within a socket. It also caters to system-in-package (SiP) or other chips assembled out of smaller disaggregated die (chiplets). Bergey said CCIX doubles as a die-to-die interconnect.

"The demands of data center workloads and internet traffic are growing exponentially," he said, adding that "the traditional one-size-fits-all approach to computing is not the answer." He said that its customer base wants the "flexibility [and] freedom to achieve the right level of compute for the right application."

"As Moore’s Law comes to an end, solutions providers are seeking specialized processing," he said.

About the Author

James Morra

Senior Editor

James Morra is the senior editor for Electronic Design, covering the semiconductor industry and new technology trends, with a focus on power electronics and power management. He also reports on the business behind electrical engineering, including the electronics supply chain. He joined Electronic Design in 2015 and is based in Chicago, Illinois.