AI and the Virtuous Cycle of Unprecedented Data Management

This article is part of our Library Series: System Design: Memory for AI

What you’ll learn:

- Domain-specific AI has changed the game in computer architecture.

- Why there’s such a demand for neural networks.

While the general-purpose processor has long dominated market share, there’s been a steady shift toward spending computing cycles on newer domain-specific processors in applications like scientific computing and artificial intelligence (AI). While lacking the ability to run more general applications well, they more than make up for it by dramatically improving the performance and power-efficiency of market-specific applications by multiple orders of magnitude. The use of domain-specific silicon for AI has ushered in a new Golden Age of computer architecture, reinvigorating the semiconductor industry along the way.

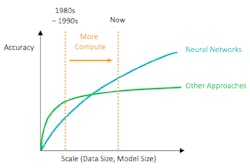

While many of the techniques used in modern AI chips and applications have been around for decades, the use of neural networks didn’t really take off until recently (see figure).

The chart provides some interesting insights into why AI technology failed to take off during the last period of intense interest back in the 1980s and 1990s. Transistor densities weren’t high enough to accommodate massive levels of parallelism, and memory technology didn’t provide the speed and capacity necessary to adequately train neural-network models. Consequently, more conventional approaches outperformed neural networks.

Need for Neural Networks

Fast forward to today, and we see that processing power has improved by about five orders of magnitude and memory capabilities have improved three to four orders of magnitude. Coupled with large amounts of readily available labeled digital data that can be used to train machine-learning systems, neural networks have displaced the conventional approaches of the past.

The world’s digital data continues to grow exponentially, with conservative estimates showing a doubling of data every two to three years. This rapid growth in data volume has resulted in a virtuous cycle for AI: More digital data enables more accurate neural networks, and the need for neural networks continues to grow as they’re one of the only viable methods that can make sense of all this data. New algorithms are also born out of the sea of information available to us, adding to the pace of innovation in the field.

While nearly 40 years have passed since the last wave of interest in neural networks, it’s clear that we’ve begun a new chapter in the history of AI and machine learning, fueled by advances in computer architecture, semiconductor technology, and readily available data.

As we look toward the future, more performance is needed to address new problems and ever-growing data sets. For decades, the semiconductor industry has relied on Moore’s Law and manufacturing improvements to deliver more transistors and faster clock speeds, and on Dennard Scaling to improve power efficiency. The irony of the situation is that just when we need them most, Moore’s Law is slowing and Dennard Scaling has all but stopped. Domain-specific silicon and the use of many compute engines in parallel have provided a much-needed boost in performance, and the challenge will be to continue innovating to maintain the historic rate of improvement we’ve enjoyed in recent years.

In this memory systems for AI series, we’ll explore how the resurgence of interest in new AI architectures is serving as a catalyst for the design of domain-specific silicon, which in turn is driving a renaissance in computer architecture. And with memory bandwidth being a critical resource for AI applications, memory systems have once again become a focal point, making it an extremely exciting time to be a part of the semiconductor industry.

Read more articles from the Library Series: System Design: Memory for AI

About the Author

Steven Woo

Steven Woo is a Fellow and Distinguished Inventor at Rambus Inc., working on technology and business development efforts across the company. He is currently leading research work within Rambus Labs on advanced memory systems for data centers and AI/ML accelerators, and manages a team of senior technologists.

Since joining Rambus, Steve has worked in various roles leading architecture, technology, and performance analysis efforts, and in marketing and product planning roles leading strategy and customer programs. Steve received his PhD and MS degrees in Electrical Engineering from Stanford University, and Master of Engineering and BS Engineering degrees from Harvey Mudd College.