CXL Ushers in a New Era of Data-Center Architecture

What you’ll learn:

- The vital relationship between compute and memory.

- The improvements of CXL 2.0 over CXL 1.1.

- Utility of memory pooling.

Exponential growth in the world’s digital data is driving change in how this data is being processed. Server and data-center architectures are on the threshold of groundbreaking architectural shifts that will enable these systems to achieve higher performance and improve operating costs. The architecture of servers has remained largely unchanged for the past two decades. However, it’s now being revolutionized to keep up with the growing demands of artificial intelligence (AI), machine learning, cloud computing, and data analytics.

The computing industry has long desired solutions for expanding memory capacity and bandwidth, as well as eliminating the tight coupling between resources. Today, data centers are moving from the traditional model with tightly bound and dedicated resources toward a disaggregated model. The move to resource disaggregation will allow data centers to employ pools of processing and memory resources that can be efficiently provisioned to meet a wide range of needs—offering higher performance and better throughput brought about by greater resource utilization.

The recent development and introduction of the Compute Express Link (CXL) standard, an advanced interconnect for processors, memory, and accelerators, is a critical enabler for memory expansion and resource pooling, enabling this transformation to become a reality. CXL builds off the widely adopted PCIe infrastructure to offer features that can increase the performance of many workloads compared to existing architectures, while still utilizing current hardware specifications and compatibilities.

CXL 2.0

The CXL standard, now at 2.0, supports a broad range of use cases through three protocols: CXL.io, CXL.cache, and CXL.memory. CXL.io is functionally equivalent to the PCIe 5.0 protocol, leveraging the broad industry adoption and familiarity of PCIe. As the foundational communication protocol, it allows anyone to take advantage of CXL in a form applicable to all use cases. CXL.cache is designed for more specific applications, enabling accelerators to efficiently access and cache host memory for improved performance. And with CXL.memory, a host, such as a processor, can access device-attached memory using load/store commands.

All together, these three protocols enable coherent sharing of memory resources between computing devices, e.g., a CPU host and an AI accelerator. This simplifies programming by allowing communication through shared memory.

The improvement in low-latency connectivity and memory coherency directly leads to improved computing performance, efficiency, and lowered cost of operation. CXL memory expansion allows for significant additional capacity and bandwidth above and beyond the tightly bound DIMM slots in today’s server—it makes it possible to add more memory to a CPU host processor through a CXL-attached device. And when paired with persistent memory, the low-latency CXL link allows the CPU host to use this additional memory in conjunction with DRAM memory.

The performance of numerous emerging high-capacity workloads depends on large memory capacities such as AI. Considering that these are the kinds of workloads most businesses and data-center operators are investing in, the advantages of CXL are clear.

Into the Pool

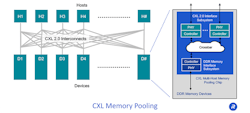

Without the capacity expansion benefits of CXL, processors must access additional memory remotely, incurring significant latency and bandwidth penalties. CXL gets around these performance bottlenecks, while also enabling higher overall memory bandwidth for the host processor. The greater amount of CXL connections provide more lanes over which data can travel between the processor and memory, adding to the bandwidth provided by the DIMM slots connected directly to the host processor. CXL 2.0 also introduced new switching capabilities (like those supported by PCIe), enabling memory disaggregation and making CXL memory pooling possible.

This capability for memory pooling shouldn’t be underestimated. Thanks to pooling, the host is able to access a much larger amount of memory than can fit in an existing server chassis today, while making this memory capacity available to other servers as well (see figure). When pooled memory is no longer needed by a server, it can be released back to the pool for use by other servers.

Many server architectures are likely to move to a CXL 2.0 direct connect architecture because of the performance and cost benefits from main memory expansion, which opens the door to pooled memory in the future. Enabling multiple hosts to share memory through a switch gives tremendous flexibility to end users—memory can be provisioned to different servers as needed, while keeping the latency low.

Low-latency connections enable CXL memory devices to use DDR memory to provide expanded capacity and bandwidth that augments the host’s main memory. A host can access all or portions of the capacity of as many devices as needed to tackle its workload on an as-needed basis. Provisioned memory is returned to the pool so that it can be provisioned to other servers when future workloads arrive.

A further benefit is that CXL has been designed with security in mind—all three protocols are secured via Integrity and Data Encryption (IDE). This provides confidentiality, integrity, and replay protection without introducing added latency. In today’s world, where we’re seeing a constant increase in the amount and value of people’s and businesses’ private and confidential data, an added layer of security is a key consideration for future data centers.

Looking Forward

More work still needs to be done, though. CXL is an emerging technology and the realization of chip solutions that support these new memory expansion and pooling capabilities will require the synthesis of multiple critical technologies. Thankfully, the industry is converging and investing in developing CXL to make this a possibility in the next few years. Upcoming server architectures will include CXL interfaces, opening up the ability for memory expansion through CXL interconnects.

CXL provides an extensible interconnect that allows critical resources such as memory to be less tightly bound to processors as in the past, paving the way for fully disaggregated architectures in the years to come.

About the Author

Steven Woo

Steven Woo is a Fellow and Distinguished Inventor at Rambus Inc., working on technology and business development efforts across the company. He is currently leading research work within Rambus Labs on advanced memory systems for data centers and AI/ML accelerators, and manages a team of senior technologists.

Since joining Rambus, Steve has worked in various roles leading architecture, technology, and performance analysis efforts, and in marketing and product planning roles leading strategy and customer programs. Steve received his PhD and MS degrees in Electrical Engineering from Stanford University, and Master of Engineering and BS Engineering degrees from Harvey Mudd College.