Members can download this article in PDF format.

What you'll learn:

- How to build an ML accelerator chip using PCI Express.

- What is the training process for a machine-learning model?

- The emergence of complex models that need multiple accelerators.

Machine-learning (ML), especially deep-learning (DL)-based solutions, are penetrating all aspects of our personal and business lives. ML-based solutions can be found in agriculture, media and advertising, healthcare, defense, law, finance, manufacturing, and e-commerce. On a personal basis, ML touches our lives when we read Google news, play music from our Spotify playlists, in our Amazon recommendations, and when we speak to Alexa or Siri.

Due to the wide usage of machine-learning techniques in business and consumer use cases, it’s evident that systems offering high performance with low total cost of operation for ML applications will be quite attractive to customers deploying such applications. Consequently, there’s a rapidly growing market for chips that efficiently process ML workloads.

For some markets such as the data center, these chips can be discrete ML accelerator chips. Given the addressable market, it’s no surprise that the market for discrete ML accelerators is highly competitive. In this article, we will outline how PCIe technology can be leveraged by discrete ML accelerator-chip vendors to make their product stand out in such a hyper-competitive market.

Leveraging PCIe to Make an ML Accelerator Chip that Meets Customer Demands

In addition to offering the best performance per watt per dollar for the widest possible set of machine-learning use cases, several capabilities serve as table stakes in the highly competitive ML accelerator market. First, the accelerator solution must be able to attach to as many compute chips as possible from different compute-chip vendors. Choosing a widely adopted chip-to-chip interconnect protocol such as the PCI Express (PCIe) as the accelerator/compute-chip interconnect solution will automatically ensure that the accelerator can attach to almost all available compute chips.

Next, the accelerator must be easily discoverable, programmable, and manageable using standard OSes like Linux and Windows. Making the accelerator a PCIe device automatically enables it to be discovered using the enumeration flow defined for PCIe devices, configured and managed using well-known programming models by standard OSes. Typically, a vendor would have to supply a device driver for their accelerator product. Developing drivers for PCIe devices is well-understood and there’s a vast body of open-source code and information that vendors can use for driver development. This cuts down development costs and time to market for the vendor’s product.

Application software must be able to use the accelerator with minimum software development effort and cost. By turning the accelerator into a PCIe device, well-known robust software methods for accessing and using PCIe devices can be instantly deployed.

With the proliferation of cloud computing, a significant proportion of ML-based applications are hosted on the cloud as virtual-machine instances or containers. The performance of these virtual machines or containers can be improved by having access to an ML inference or training accelerator. Therefore, a ML accelerator’s attractiveness in the market is improved if it can be virtualized so that its acceleration capability is usable by multiple VMs or containers.

The industry standard for device virtualization is PCIe technology-based: SR-IOV. In addition, direct assignment of PCIe device functions to VMs is widely supported and used due to the high performance offered. Hence, by implementing PCIe architecture for their accelerator, vendors can address market segments that need high-performance virtualized accelerators.

Training

Before machine-learning models can be deployed in production, they must be trained. The training process for ML, especially deep learning, involves feeding in a huge number of training samples to the model that’s being trained.

In most cases, these samples need to be fetched or streamed from storage systems or the network. Therefore, the time to train to an acceptable level of accuracy will be affected by the bandwidth and latency properties of the link between the ML accelerator and storage systems or networking interfaces. The lower the time to train, the better the accelerator solution will be for customers.

An accelerator can potentially lower the time to train by using PCIe technology’s peer-to-peer traffic capability to stream data directly from the storage device or the network. Using the peer-to-peer capability this way improves performance by avoiding the round trip through the host compute system’s memory for the training samples.

In addition, most high-performance storage nodes and network interface cards (NICs) use PCIe protocol-based links to connect to other components in the system. Hence, by choosing to be a PCIe-compliant device, the accelerator can enable native peer-to-peer traffic with most of the storage and NICs.

The peer-to-peer capability of PCIe architecture can be useful in the inference and generation side of machine learning as well. For example, in applications like object detection in autonomous driving, a constant stream of camera outputs needs to be fed to the inference accelerator with the lowest latency as possible. In this case, peer-to-peer capability can be used to stream camera data to the inference accelerator with minimal latency.

High-bandwidth requirements for the connections between the ML accelerator chip and compute chips, storage cards, switches, and NICs necessitate high-data-rate serial transmission. As data rates increase and the distance between the chips expands or stays the same, advanced PCB materials and/or reach extension solutions, such as retimers, are required to stay within the channel insertion loss budget.

Retimers are fully defined in the PCIe specifications and can support a wide range of complex board designs and system topologies. Therefore, making the accelerator a PCIe device enables the accelerator vendor to take advantage of the PCIe technology ecosystem to achieve the necessary high-data-rate serial transmission in a variety of customer board designs and system topologies.

Multiple Accelerators for Complex Models

The leading edge in machine-learning fields like natural language processing is moving toward extremely large and complex models like GPT-3 (which has 175 billion parameters). Due to the parameter storage requirements and computational requirements of such large models, training or even prediction or generation (e.g., in language translation) using these models can be beyond the computational and memory capacity of a single accelerator die.

Therefore, a system with multiple accelerators becomes necessary when large models like GPT-3 are the preferred choice for the use case. In such multi-accelerator systems, the interconnect between system components needs to offer high bandwidth, be scalable, and be capable of accommodating heterogenous nodes attached to the interconnect fabric.

PCIe technology is a great option for system component interconnect due to its high bandwidth, and its ability to scale by deploying switches. As mentioned previously, the ubiquity of PCIe-based devices allows the same fabric to have NICs, storage devices, and the accelerators. This allows efficient peer-to-peer communication leading to lower time to train, lower inference latency, or higher inference throughput. For multi-accelerator use cases where a low-latency and high-bandwidth inter-accelerator interconnect is desired, the accelerator vendor can take advantage of the PCIe specification’s alternate protocol support to create a custom inter-accelerator interconnect.

Another important aspect to consider while designing accelerators to train large models like GPT-3, or to conduct inference using such models, is the significant amount of power that must be delivered to the accelerator for it to process these models at maximum performance level. PCIe specifications offer standardized methods for a system to deliver significant amounts of power to an accelerator card. By using PCIe technology, an accelerator vendor can safely design an accelerator card that consumes the maximum allowed by the PCIe architecture standard for a card without worrying about system interoperability from a wide variety of system vendors.

Power Efficiency

The performance per dollar of an ML solution depends in part on its power efficiency. For example, an inference accelerator might use its link to the compute SoCs only when a new inference request is passed from the compute SoC to the accelerator. For the rest of time, the link is essentially idle. Unless the link has low-power idle states, it will be consuming power unnecessarily by remaining in a high-performance active state.

For maximum efficiency, it’s important that the power consumption of the accelerator’s links to the rest of system scales linearly with the utilization of those links. PCIe offers link power states like L1 and L0p to modulate the power consumption of the link based on idleness and bandwidth usage.

In addition, PCIe specifications have device idle power states (D-states) that standard OSes can take advantage of to reduce the system’s power by putting the accelerator to sleep when not needed. PCIe technology also offers the ability to control the active power consumption of the accelerator. Therefore, PCIe specifications enable the accelerator to contribute positively to the system’s overall power efficiency.

PCIe and RAS

For data-center deployments of AI accelerators, reliability, availability, and serviceability (RAS) features are necessary in all system components, including accelerators. In addition, to be usable in practice, such RAS features must comply to what standard OSes and platform firmware expects. The PCIe architecture offers a rich suite of OS-friendly RAS features, including advanced error reporting, the ability to do hot add and removal of PCIe devices, etc. Therefore, choosing PCIe-based links as the means to connect to other system components helps the accelerator product meet the data-center market’s RAS needs.

An important advantage that PCIe specifications offer to an AI accelerator vendor is the ability to retarget the same solution to several different market segments. This is done by taking advantage of two features of PCIe technology: the availability of different form factors and the capability of a PCIe specification interface to have different link widths. This allows the vendor to scale the interface bandwidth, power consumption, and form factor in proportion to the acceleration capability needed for the market segment.

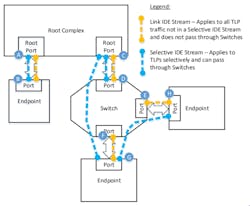

Confidentiality and integrity of the data that’s transferred to and from the accelerator is important for most customers. The PCIe specification has recently introduced integrity protection and data encryption (IDE) for data transfers over PCIe links. An accelerator vendor can leverage PCIe IDE to provide end-to-end confidentiality and integrity for data.

Both training and inference workflows can involve the CPU cores in the compute SoC and the accelerator executing the ML application in a collaborative fashion. This needs a high-bandwidth, low-latency communication channel between compute SoC and the accelerator. A PCIe-based link is an ideal fit for this application. By leveraging the PCIe specification’s alternate protocol support for custom chip-to-chip communication protocols, even lower latency and higher bandwidth efficiency can potentially be achieved.

Due to the competitive nature of the ML accelerator market, minimizing time to market is important for an accelerator vendor. Leveraging PCIe technology can help in this respect due to a rich ecosystem providing high-quality PCIe IP that can be leveraged for fast chip design and verification. Vendors can easily access compliance testing services to ensure that their chip will connect to all PCIe technology-compatible compute systems, and they have access to the large pool of PCIe architecture experts.

Conclusion

As mentioned previously, machine-learning (especially deep learning) models are growing in size and complexity. To keep up with this trend in terms of computational and memory capacity, systems with multiple interconnected accelerator chips will become increasingly necessary. Chip-to-chip interconnect performance needs to ramp up along with computation and memory capacity to realize the true performance potential of such systems.

Choosing PCIe technology for chip-to-chip interconnect helps vendors take advantage of the bandwidth increase that each new generation of PCIe technology brings to market. As an example, PCIe 6.0 is projected to offer a data rate of 64 Gtransfers/s, which is double the PCIe 5.0 data rate.

In conclusion, adopting PCIe specifications will enable ML accelerator vendors to:

- Develop market-leading accelerators with lower risk and faster time to market.

- Have a robust pathway to keep up with workload demands for scaling of chip-to-chip interconnect bandwidth.

About the Author

Dong Wei

Lead Standards Architect and Fellow, Arm Ltd. and PCI-SIG Member

Dong Wei is an Arm Fellow. He leads the Arm SystemReady program, covering industry standards such as PCI Express, Trusted Computing, CXL, UEFI, ACPI, DMTF, and OCP. Dong is a leading contributor to the PCI Firmware Specification and co-chairs the PCI-SIG Firmware Working Group since 2009.

Dong has been involved with the PCI-SIG for over 15 years and is currently a Board member. He’s also currently a Board member of the CXL Consortium, and Chief Executive and Board member of the UEFI Forum, co-chairing its ACPI Working Group.