The Big Bet Behind the Infrastructure Processing Unit

Last year, a new class of networking chip called the infrastructure processing unit (IPU) joined Intel’s portfolio of server chips. Now the company is placing a long-term bet on the IPU as it looks to win favor with cloud giants.

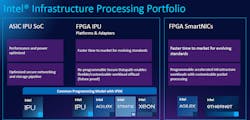

The Santa Clara, California-based company recently revealed a roadmap for IPUs that lasts through 2026, building on the first-generation IPU it co-developed with Google. Intel said it would continue to build IPUs based on both FPGAs customers can reprogram at will and ASICs that lock features into hardware at the expense of flexibility. Every new IPU will have more throughput than the last—up to 800 GB/s Ethernet by 2025 or later.

Intel’s foray into IPUs follows rival efforts by the likes of Marvell, NVIDIA, and pre-AMD Xilinx, which sell what they call the data processing unit (DPU) to offload infrastructure jobs that drag on the central (CPUs) and graphics processing units (GPUs) in a modern data center.

An IPU is fundamentally the same concept as a DPU. The goal is to free up a server’s CPU by intelligently offloading lots of networking-related and other backstage workloads to purpose-built protocol accelerators.

Patty Kummrow, a VP in Intel's Data Platforms business unit and GM of Ethernet Products, is seeing strong demand for IPUs and other domain-specific processors in everything from data centers in the cloud to edge devices.

Building Blocks

“IPU is a key part of the future data center architecture,” Kummrow said earlier this month in a briefing with reporters.

Today, the general-purpose CPU at the heart of a server acts as a traffic cop for the data center, managing networking, storage virtualization, encryption, and other security work that primarily runs on software today.

But relying on the CPU to coordinate the traffic in a data center is expensive and very inefficient. In servers, more than one-third of the CPU’s capacity is wasted on these infrastructure workloads, according to NVIDIA.

Intel is supplying the IPUs in the form of a networking card that cloud companies can attach to every server. IPUs have enough processing power offload a wide range of networking (RDMA over Converged Ethernet, or RoCE), storage (Nonvolatile Memory Express, or NVMe), and security (IPSec) workloads that run on hardened logic inside. That frees up the CPU’s clock cycles for more valuable apps—or a tenant’s workloads when it comes to cloud data centers.

"IPUs give us some critical advantages. One of them is a separation of infrastructure and tenant workloads," said Kummrow. "When we offload these infrastructure functions, we free up the CPU cores to do tenant workloads, and we can also accelerate them. The IPU is purpose-built hardware for those functions.”

SmartNICs to IPUs

Adding three generations of IPUs to its existing portfolio underscores that the underlying technology is key to Intel’s cloud data-center strategy. With the move, Intel is also further acknowledging the fact that the CPU alone is no longer sufficient to meet the needs of modern data centers.

Under CEO Pat Gelsinger, Intel is trying to strike back against AMD, NVIDIA, and other rivals in the data-center market with a strategy that focuses on a broad portfolio of chips built for different purposes. Earlier this month, Intel rolled out its latest Habana-designed server chips for carrying out machine-learning jobs. Intel is also in the final stages of developing its first discrete GPU for the data center. The IPU is yet another piece of the puzzle.

As demand for cloud services rises, networking is becoming a bottleneck in the data centers driving them. A deluge of data is overwhelming the relatively rudimentary network interface cards (NICs) in data centers today.

That has pushed cloud giants to seek new ways to manage their data-center infrastructure more efficiently while giving them the flexibility to run workloads where desired. Intel said its first FPGA-based IPUs, sometimes referred to as SmartNICs, are already being deployed by Microsoft and other hyperscalers.

AWS is also several generations into its “Nitro” series of SmartNICs that play roughly the same role as Intel’s IPUs and NVIDIA’s DPUs: They securely manage infrastructure chores while giving thousands of its customers more control over the functions of a server’s CPU and memory. Nitro uses AWS-designed Arm-based SoCs.

The IPU Roadmap

NVIDIA introduced the first processor in its Bluefield family of DPUs and the software to run them in 2020. But Intel remains in the early stages of its IPU roadmap. It only launched its first ASIC-based IPU, Mount Evans, last year.

Intel co-developed the IPU with Google's cloud computing arm. The IPU integrates 16 Arm Neoverse N1 cores—the same CPU at the heart of AWS's second-generation Graviton2 CPU, which is also one of the biggest threats to Intel’s dominance in the data center. The IPU integrates 200-GB/s Ethernet and acceleration engines for NVMe, LAN, RDMA, cryptography, and other security workloads. It adds 16 lanes of PCIe Gen 4 connectivity.

Intel has also started offering its second-generation 200-GB/s IPU, code-named Oak Spring Canyons, based on its Agilex FPGAs and Xeon D processors. The company said its FPGA-based IPUs have a “reprogrammable secure data path” that gives customers more flexibility in offloading workloads than its FPGA-based SmartNICs.

A future generation of its ASIC- and FPGA-based IPUs, code-named Hot Springs Canyon and Mount Morgan, are in development and set to be released in 2023 and 2024, featuring 400 GB/s of networking throughput.

“We have talked about our data center of the future," said Kummrow, adding that "we see the IPU as a critical piece to enable all those optimizations and performance drivers our customers see."

Intel will roll out another generation of 800-GB/s FPGA- and ASIC-based IPUs in 2025 or 2026.

Competition Ahead

The latest move by Intel into the market signals that it sees infrastructure processors as an important asset to win favor with cloud providers.

But as the category starts to attract more market interest, formidable competitors are jumping into the race.

AMD has agreed to buy networking chip startup Pensando Systems for $1.9 billion. The deal would give it access to high-performance DPUs that are already being deployed in data centers by Microsoft and Oracle.

Intel is aiming to stand out by striking a balance between customer needs for performance and flexibility.

Kummrow said customers with evolving workloads may reprogram the FPGA-based IPUs at will. The FPGAs can be reprogrammed to run different tasks more efficiently than general-purpose silicon and add features over time.

Others willing to go without flexibility can use its ASIC-based IPUs to wring out more performance for less power. "With the ASIC, there is going to be a lot more performance and power optimization because all those functions are committed to the hardware," said Kummrow. She said the lessons it learned from designing FPGA-based IPUs and the ASICs along with Google Cloud have “informed what we are hardening in the SoC.”

Kummrow noted that Intel is also investing in developing a complete set of software tools for programming both ASIC- and FGPA-based IPUs called the Infrastructure Programmer Developer Kit (IPDK).

“It will unlock the full value of the hardware underneath,” she said. “Customers can abstract the hardware, whether that means IPUs, FPGAs, or CPUs, and it will not matter what vendor the hardware comes from.”

About the Author

James Morra

Senior Editor

James Morra is the senior editor for Electronic Design, covering the semiconductor industry and new technology trends, with a focus on power electronics and power management. He also reports on the business behind electrical engineering, including the electronics supply chain. He joined Electronic Design in 2015 and is based in Chicago, Illinois.