SAS: The Interface of Choice in Modern Storage-System Architecture

What you’ll learn:

- Current and future technology advances in HDDs for designing storage infrastructure with the best TCO.

- Details to consider in technology assessments, including cost, performance, and capacity.

- Understanding of how different storage technologies can complement each other for your needs.

Mass storage is more important than ever, and today, IT architects who manage public, private, edge, and hybrid cloud infrastructures need to deploy storage solutions that deliver optimal performance for the lowest possible cost. Reducing total cost of ownership (TCO) is a primary driver when it comes to a cost-benefit analysis of storage solutions. It’s the reason cloud architects continue to use hard-disk drives (HDDs) as the primary workhorse for enterprise data centers.

The big public cloud service providers (CSPs) have dominated an era of mobile-cloud centralized architecture. HDDs currently rule the cloud exabyte market—offering the lowest cost per terabyte based on a combination of factors, including price, cost, capacity, power, performance, reliability, and data retention.

Solid-state drives (SSDs), with their performance and latency metrics, provide an appropriate value proposition for performance-sensitive, highly transactional workloads closer to compute nodes. HDDs represent the predominant storage for cloud data centers because they provide the best TCO for the vast majority of cloud workloads (i.e., cool and cold tier storage).

A well-designed storage infrastructure delivers end-to-end management and real-time access required to help businesses extract maximum value from their data. Data-center storage architectures must be optimized for massive capacity, fine-tuned for maximum resource utilization, and engineered for ultra-efficient data management.

Serial-attached SCSI (SAS) is the interface of choice for these architectures, since it’s designed to connect up to 65,536 devices per link and, from its conception, was designed for backplane applications. The SAS tunneling protocol for SATA provides shorter-reach solutions to enable the use of SATA storage devices, but SAS-to-SAS provides the maximum reach and performance when compared to a SAS-to-SATA configuration. SAS-to-SAS connectivity also maximizes utilization of emerging performance-enhancing HDD technologies.

Looking ahead, HDDs are expected to hold onto their market share, thanks in part to advances that continue to increase performance and capacity while maintaining reliability. A balance between managing price while ensuring required performance is key for cloud architects that need to deliver on performance benchmarks as capacity scales. Some HDD attributes and advances are briefly discussed in this article to show what to expect in future HDDs found in data centers.

Cost Per Gigabyte of HDD vs. SSD

HDDs remain the primary low-cost, high-capacity storage solution for cloud applications that aren’t driven by absolute performance. SSDs and non-volatile memory provide the lowest-latency solutions when performance is of the absolute priority. However, when it comes to online shopping, social media, archiving images, or watching videos, the increased latency of retrieving data stored on HDDs is virtually undetectable by a human. This provides a perfect usage model for HDDs. Although capacities continue to rise, the dollars per gigabyte for HDDs remains at all-time lows.

The performance of SSDs doesn’t come without a cost. The price per gigabyte is many times greater than that of an HDD, and in high-usage applications, there’s also endurance to consider, as the solid-state memory has a limited number of program/erase cycles. But that doesn’t mean the SSD doesn’t have its place in the market. Proper management of these properties yields great performance benefits where needed, thus playing favorably into the system TCO.

Service Life and Data-Center Reliability

SSDs are typically specified for service life in drive writes per day or terabytes written. This is due to longevity being based on NAND program-erase cycles. HDDs, on the other hand, have the service life specified in units of time. Enterprise HDDs usually have a five-year warranty.

Both SSDs and HDDs have internal mechanisms to recognize and correct media defect issues and methods are available so that the system can monitor drive health. Although it may be achieved through many different mechanisms, some sort of data backup method is always recommended, and the low cost of HDDs makes them a great candidate for redundancy.

The SAS dual-port interface provides an option for initiator (host) redundancy in case the system had an issue with the controller not being able to access the drive. An independent second controller could be implemented on the second SAS port to access the data on the drive.

SAS, with its designed bit error ratio (BER) of 1 × 10-15, has less data errors at the system level than some other popular interfaces. This is especially important when retrieving data from cool or cold storage, as the propagation delay through the system may add significant time to receiving valid data if retries are needed. In the event a drive must be added or replaced, SAS has always been designed with hot-plug capability. A hot-plug event with SAS is considered routine rather than disruptive.

HDD Performance: Multi-Actuator

The HDD is a mechanical marvel. The concept of storing data by polarizing magnetic particles on a plated disk may seem like a simple matter, but the technology involved is almost overwhelming. Rotating the media at a constant speed, positioning the heads to the proper location, as well as dealing with external forces such as shock and vibration pose some formidable challenges, yet capacities and performance keep increasing.

Rotational speed has been used to increase performance, with 2.5-in. form-factor drives spinning the disks as fast as 15,000 rpm. With the trend to maximum capacity and 3.5-in. form factors, 7,200 rpm is really the fastest practical speed the disks can be rotated to meet power versus performance tradeoffs.

Today’s HDDs use a single actuator to transfer I/Os from the device to the host using a single read/write channel. This results in fixed performance irrespective of the capacity gain and number of heads/disks per drive. By using two actuators that can transfer I/Os independent of each other within a single HDD, a parallelism can be created that enables up to twice the performance (Fig. 1).

One method of implementation within a drive is to have the top half of the heads/disks addressed by one actuator while the bottom half of the heads/disks are addressed by a second actuator. Each actuator addresses one-half the total capacity of the drive.

The SAS interface is perfect for such an implementation. The two actuators are addressed by the host via SAS logical unit number (LUN) protocol. The LUN protocol is already built into the SAS interface architecture and can be leveraged to address both actuators independently.

LUN0 and LUN1 present the same worldwide name (WWN) to the host with a unique identifier to specify LUN0 versus LUN1. When plugged into the host system, one HDD shows up as two independent devices of equal capacity—e.g., one 14-TB drive will show up as two 7-TB drives. For many workloads, the performance is essentially doubled while the power usage is significantly less than two separate drives since there’s only one spindle motor used to rotate the media.

Per-device power consumption is more for a multi-actuator HDD than a single-actuator HDD. However, at the TCO level, it saves power since it consumes less power than deploying two drives to achieve the same throughput requirement.

The multi-actuator HDD is designed to solve cloud-concentric applications in data centers, but its performance can provide significant gains in content delivery networks, surveillance, and scalable filesystems, to name a few.

HDD Evolution of Capacity: Areal Density

Standard form factors limit the number of disks that can be present, so where do the capacity increases come from? The answer is areal density—i.e., the number of bits that can be stored in a given area of media. A brief history of recording technology and looking into future advances helps to see that HDDs will continue to be a go-to solution for bulk storage needs.

The first disk drives used longitudinal recording (LR). This places the magnetization of particles in the same plane as the disk surface. A simple way to increase areal density is to decrease the particle size (Fig. 2). This works up to a point, but the smaller particles need to be made more stable, and doing so requires more energy to magnetize them for the desired data pattern.

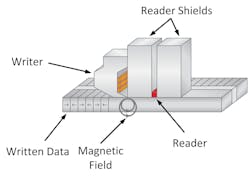

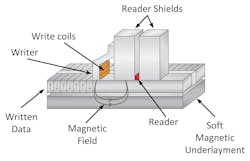

There are physical limitations involved, which led the industry to determine a new way to increase the areal density: perpendicular magnetic recording (PMR) (Fig. 3). A soft magnetic underlayer was added to the media so that the recording grains sit on top of it. In turn, the particles could stand perpendicular to the disk surface rather than lying flat on it, thus enabling even smaller particles and putting them into a stronger magnetizing field from the writer.

Grain size continued shrinking, but additional density was needed. The next development was shingled magnetic recording (SMR). SMR allows tracks to be closer together. The reader element of an HDD head is narrower than the writer. This results in the written track being wider than what’s required by the reader.

SMR overlaps tracks, effectively making the existing track narrower but still wide enough for the reader to recover the data. The overlap is similar to how shingles are mounted on a roof—thus, the name. SMR will be limited by the laws of physics to about one terabit per square inch.

As mentioned previously, longitudinal recording was replaced by perpendicular recording to increase areal density. As SMR reaches its limit, a technology change is required to provide an increase. Heat-assisted magnetic recording (HAMR) is the answer (Fig. 4).

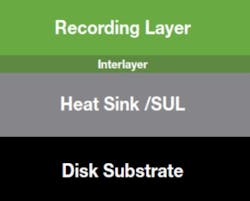

A laser is added to the HDD head to heat the media as the writing process is done so that the new media (iron platinum) can be written with the conventional writer. The heat from the laser temporarily reduces the coercivity of the media while the writing takes place, and then it returns to thermal stability after the write is completed.

The disk had to be redesigned for HAMR. A heatsink layer and an interlayer are added to control the heat flow from the storage layer. These provide the right balance of heat transfer to write and protect written data in the recording layer. The entire process of heating, writing, and cooling takes less than one nanosecond. HAMR is predicted to provide a 5X gain in areal density over SMR.

Conclusion

HDDs and SSDs complement each other in today’s storage-system architectures. HDD technology continues to advance, and HDDs will remain a long-term value proposition for storage solutions.

The SAS interface provides an excellent set of features to complement the HDD technology advances. For warm and cold storage applications, SAS provides additional advantages with its superior hot-plug capability, high performance, large addressability/connectivity, reliability, and data integrity. The current storage ecosystem is a large user of SAS for these very reasons, and it will continue to be so for the foreseeable future.

About the Author

Alvin Cox

SAS Plugfest Chair, SCSI Trade Association and Senior Staff Engineer, Seagate Technology LLC

Alvin Cox chairs the SAS PHY Working Group in the T10 committee and liaises with STA on those technical matters. He also was the STA Plugfest Chair for the most recent 24G SAS plugfest.