Startup Rolls Out Compute-in-Memory Chip for Deep Learning

This Electronic Design article is reprinted here with permission.

Mythic, a startup backed by Micron Technology and Lam Research, rolled out its first chip that can get artificial intelligence out of the cloud and into edge devices. The startup said that it can deliver tens of trillions of operations per second with up to 10 times less power than competing products in the category by processing data in memory, keeping data movement to a minimum.

Mythic said the accelerator, the M1108, is custom designed for deep learning inference. The chip is targeted for use in compact, power-constrained devices, from autonomous drones and computer-vision cameras installed on factory floors. It could also be used on embedded servers in areas such as brick-and-mortar stores to process video feeds from vast networks of cameras.

The accelerator—what it calls an intelligence processing unit or IPU—has a compact footprint of 19-mm by 19-mm. It meets the performance-per-watt requirements to run artificial intelligence in Internet of Things devices instead of in the cloud. Mythic said that it checks all the boxes for on-device AI, cramming more compute resources in a smaller area, power, and thermal budget.

The IPU pumps out up to 35 trillion operations per second, or TOPS, and consumes in the range of 4W of power while processing artificial intelligence workloads. That puts it ahead of Nvidia's Xavier AGX computing module, which can deliver up to 32 TOPS while burning through 10W to 30W of power. Its lower power footprint means that Mythic's IPU generally dissipates less heat.

The company said it has started rolling out the chip to potential early customers, and industry analysts say that it is planning to start mass production of its chip in the second half of 2021.

The IPU is based on what the company calls its proprietary analog in-memory computing architecture. That means the logic gates are co-located with memory to reduce the distance data travels in the system. The data is dumped directly in embedded memory where it can be processed in real time, promising major gains in performance. Mythic said that it burns through less power, produces less heat and cuts latency compared to more general-purpose processors.

"We are delivering technology that was previously thought to be impossible," said Mike Henry, chief executive officer and co-founder of Mythic, in a recent statement. "The analog compute engine eliminates the memory bottlenecks that plague existing solutions by efficiently performing matrix multiplication directly inside the flash memory array itself," he said.

The company said the chip can process up to 870 images per second on the industry-standard Resnet-50 test, which is widely used for clocking the performance of artificial intelligence chips.

One of the core building blocks of deep learning—the neural network—is composed of layers of computing units called nodes. These nodes are linked together by connectors, or weights, that strengthen the bonds between them. The combination of these components are stored in the system's memory and used as models to interpret the data flooding into it. The models can be used for identifying objects, faces, and other features in images or carrying out other AI chores.

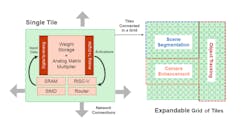

Inside the IPU are analog compute engines (ACEs), which are analogous to the cores in central processing chips. The cores are arranged in 108 compute tiles that incorporate embedded NOR memory with digital-to-analog (DAC) converters and analog-to-digital (ADC) converters. With its analog in-memory processing architecture, the IPU can process the type of computation, called the multiply-and-accumulate (MAC), at the heart of deep learning at higher power efficiency.

While most computer chips process and store data in series of 1s and 0s, the startup promises to run artificial intelligence workloads faster and more efficiently by using analog computations.

The compute tiles also have a SIMD vector processor core, RISC-V CPU, and 64 KB of SRAM that serves as the processor's cache, giving it faster access to data used continuously. These compute tiles are interconnected in a grid with network-on-a-chip (NOC) technology to control the movement of data among the tiles. The chip includes 4x PCIe lanes to connect to the CPU. The company said the chip can also run reduced precision operations, including INT4 and INT8.

Mythic said the chip brings the same level of performance as a consumer graphics processing chip while consuming up to 10 times less power. The chip is based on the 40-nanometer node, slashing its cost compared to chips that use more advanced production processes. By storing the complete machine learning model in the embedded NOR and onboard SRAM, the chip can also replace DRAM in edge devices, simplifying the system design and reducing BOM costs.

It can store more than 110 million weights, allowing it to fit larger machine learning models on the device or process lots of models in parallel. The company plans to bring the chip to market with a range of development tools to lower the bar for customers to roll out software on the IPU. The toolset supports popular software stacks: TensorFlow, PyTorch and Caffe, among others.

The company, which has headquarters in Silicon Valley and Austin, Texas, has amassed more than $85 million in total funding since it was founded in 2012. Its investors include Lockheed Martin, Lux Capital, SoftBank Ventures, Valor Partners, Micron Ventures, Threshold Ventures, Future Ventures, Lam Research, AME Cloud Ventures, Andy Bechtolsheim, and others.

The startup plans to introduce the M1108 on accelerator cards and computing modules that can be attached to various systems. The company said it would also sell the chip separately.