Exploring Overarching Properties as a COTS Software Platform Vendor

What you’ll learn:

- How the adoption of OP will affect the day-to-day work of an A&D engineering team.

- How OP can support certification activities.

- The benefits and challenges for COTS software providers that embrace OP.

For roughly a decade, the avionic assurance community has sought more effective approaches to evaluate complex digital systems over the de facto processes, e.g., DO-178C, DO-254, ARP4754A etc. After various industry surveys, studies, and international working groups, we consequently have Overarching Properties (OP).

And now, six years since the inception of the Overarching Properties Working Group (OPWG), industry leaders such as NASA, Raytheon, and AdaCore have published comprehensive articles and walkthroughs of their experience in applying Overarching Properties to some of their latest safety products. These contributions have been successful in showcasing compelling benefits, addressed critical skepticism, and have likely inspired more product developers to partake in the alternative evaluation approach.

At Lynx, we’re eager to see how the uptake in OP will affect our day to day. This is especially the case when it comes to supporting our customers’ certification activities and the product changes we must make to ensure our offerings fit with a new and better way to adjudicate platform-level safety software.

While software platform suppliers are responsible for the correct implementation of standard programming libraries (e.g., libc) and conventional operating-system capabilities (e.g., POSIX, ARINC 653), Lynx finds itself in an “interesting” position when supporting assurance-related activities for our customers.

Although our safety products are developed in accordance with DO-178C and meets DAL A objectives, we believe more work can, and should, be done to help system integrators effectively build complex predictable machines and deal with the hard-to-find hazards buried deep within operating-system kernels and modern multicore processors. This article shares our thoughts as we studied the possibility of adopting Overarching Properties, highlighting the benefits and challenges faced as a COTS software platform (RTOS + Hypervisor) provider.

Understanding OP

OP offers a refreshing approach to evaluate products as fit for use in avionic systems. At a very high level, it’s a framework that gives product authors the freedom and agility to substantiate airworthiness claims in their own terms.

In contrast to adapting internal development processes to conform to conventional checklist-style certification, which often feels dissociated from the purpose of certification, product authors are enabled to convince authorities with structured arguments that their product is designed and behaves correctly, and it’s resilient to foreseeable environmental and use conditions.

OP fundamentally relies on the assurance-case approach to evaluate system properties. Assurance cases are “A structured set of arguments and a body of evidence showing that a system satisfies specific claims with respect to a given quality attribute.”

Assurance cases are well studied and practiced throughout various industries and have been championed by distinguished safety-system experts such as SRI’s John Rushby and The University of York’s Tim Kelly. They have been employed to satisfy claims of systems possessing many types of properties, such as safety and security. In addition, they have been applied to augment system design processes, and can be used in conjunction with visualization (goal-structure-notation, or GSN) and verification tools to clarify and validate cogent arguments.

Prior to the establishment of OP, FAA studies for the next-gen software assurance evaluation approach4 showed strong aversion to assurance cases on the grounds that they were vulnerable to confirmation bias and would require costly domain expertise to determine if arguments were cogent.

While the OPWG confidently based the OP evaluation approach on assurance cases, these concerns are not to be discounted. OP isn’t “the cheaper way out,” meaning it’s not a substitute to regime standards, but rather a supplement (see “Understanding OP,” FAA). In practice, a software product will still rely on DO-178C objectives to substantiate OP arguments.

Adopting OP

OP presents significant benefits and challenges when considering its adoption. The primary benefits are rooted in the use of assurance cases to methodically rationalize that product design approaches and high-level requirements are upholding critical user assumptions and in touch with subtle hazardous complexities.

It’s important to note that assurance cases aren’t exclusively reserved for use under Overarching Properties, meaning product authors following DO-178C aren’t prohibited from using assurance cases. In fact, they have been encouraged by industry well before the establishment of OP.

Adopting OP will require investment in the development of product assurance cases and presumably demand deeper levels of technical support throughout the evaluation process. Today we suspect the commercial incentive for platform suppliers to bolster their product’s DO-178C assurance evidence with assurance cases will not come naturally. It will require making appeals up the supply chain and regulatory authorities with a convincing business case or pointing out major risks that assurance cases can mitigate.

And we indeed are facing significant technical risks inherited from rich complexities within next generation LRU requirements, e.g. multicore processors. Furthermore, modular open system approach (MOSA) standards such as FACE will impose deeper levels of hardware complexity and software abstraction.

As pointed out in the early OP FAA publications “Understanding the Overarching Properties: First Steps,” there’s a significant challenge in resolving how software can satisfy the property of Intent through complete and correct “Defined Intended behavior” with respect to desired system behavior. Obviously, software behavior depends on many variables, and many of these variables are configurable by product design.

The amount of work that goes into configuring platform variables—scheduling algorithms, process to core distribution, thread priorities etc.—to get software systems to behave within a desired threshold is non-trivial. Even when configuration variables are set, we see customers spending tremendous amounts of time studying the hardware architecture, software structure, and telemetry information of the system to reassure behavior is bound and well understood.

Too often, system integrators are caught off guard in the realization of how relative timing and performance properties are to hardware and software configurations. And they’re overwhelmed with the low-level complexities in hardware and software that compound and easily preclude system-level predictability models. We see significant opportunities to alleviate this burden in providing more technical aid in helping customers demonstrate OP Intent and Acceptability in light of this overwhelming complexity.

Appreciating COTS Software Platform Complexity

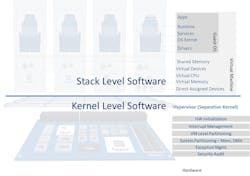

Platform software is a collection of configurable complex components—RTOS, hypervisor, libraries, drivers, compilers, debuggers etc. By design users are free to modify and extend components and fine tune behavior. These components also behave differently depending on the underlying hardware (see “Hardware/Kernel” below) and software composition (see “Stack” below).

Hardware/Kernel-Level Complexity

Kernel-level software is generally responsible for managing hardware capabilities—hardware initialization, resource allocations, event handling, security policy enforcement—and is commonly isolated from user/stack-level software. Confidently describing the intended behavior of software at the kernel level is manageable. But confidently knowing that the underlying hardware will not undermine the kernel’s desired behavior is non-trivial.

Moore’s Law hasn’t been kind to kernel engineers. When the goal of the safety engineering process is to make a predictable system, adding more transistors to processors translates into more work studying the implications of new instructions, machine registers, and architectural elements (pipeline, branch prediction, cache and memory interconnect architecture etc.) on system behavior.

At Lynx, we’re constantly studying CPU manuals and hunting down answers from chip vendors to know for sure that we have a clear and comprehensive understanding of critical configuration settings. As hardware capabilities increase—CPU virtualization, nested page tables, interrupt controller virtualization, cache partitioning etc.—the probability of “missing something” stacks up.

Stack-Level Complexity

The stack-level software is responsible for fulfilling the services extended to application runtime environments, such as standard library implementation, system management services, inter-partition messaging, data-storage services, network services, device drivers, etc. This software is highly configurable in terms of component layout, execution patterns, data rates, and so on.

Each customer’s configured stack will have different components and different configuration parameters. The sheer volume of composition layers, conditional branches, coupling techniques, concurrency techniques, and unique hardware characteristics makes deriving intended behavior more of a heuristic analysis problem versus straightforward algebra.

Consider deriving worst-case execution timing (WCET) for end-to-end transactions between FACE applications. This must account for data propagation and stack execution through transport services segment (TSS) middleware, which normally flows through the operating system services (OSS) Socket and down through the IP stack and driver layers. And if the problem couldn’t get any more difficult, now we need to account for multicore interference channels (TC 16-51).

Knowing these complexities alone warrants an assurance case to justify intended behavioral claims by both software and hardware platform suppliers to inform upstream system engineering processes to aid design considerations and hazard analysis. Additionally, as pointed out by AdaCore,1 OP has the potential to foster a jurisprudence specialized in effectively managing technical challenges, based on the curation and reuse of acceptable assurance case patterns.

Despite the overwhelming complexity inherent to general-purpose computing platforms, tremendous advances in platform architecture technologies, developments standards, system specification standards, and formal methods have successfully demonstrated the ability to divide vast complexity into manageable pieces.

Advances in hardware (CPU virtualization) and kernel-level partitioning capabilities (lynx separation kernel)5 have reached impressive levels of fidelity that can break traditional stacks into smaller segments. Segments can then be rejoined using standards (OASIS Virtio), enabling components to connect and interact predictably (virtio predictability). Ultimately, this establishes a framework of composition that can pare down large stacks into components with consistent properties and interfaces, making evaluations repeatable.

Looking one step further, formal modeling of system architectures (e.g., Architecture Analysis and Design Language, SEI), problem solvers, and model checkers are gaining significant traction. They demonstrate the ability to automate generation of correct configuration inputs according to target desired behavior, and the verification of many critical behavioral properties. As we lean more on formal modeling techniques, assurance cases are well-suited to legitimize the accuracy of system models and uphold architectural assumptions provided by the platform.

Conclusion

While computing platforms continue to grow in complexity, product assurance and regime validation methods need to scale up commensurately to maintain acceptable levels of safety. As pointed out in the comprehensive report “Assurance of Multicore Processors in Airborne Systems” (TC-16-51), there’s significant work and goodwill required between system integrators and software and hardware platform vendors to sort through the myriad hazardous conditions embedded in complex software systems.

Assurance cases can greatly improve our ability to collaboratively evaluate systems. Supplementary aids from GSN argument models to depict and contextualize claims and evidence for system- and platform-level behavior provide tremendous value in quickly orienting stakeholders and directing attention to top-priority problems.

While assurance cases aren’t exclusive to OP, we also appreciate that OP brings a sense of mission to the safety certification process. Today, airworthiness certification for COTS platform vendors comes from following a process and generating artifacts. We typically don’t collaborate with mission-oriented focus. Perhaps OP will be the conduit to change this seemingly dry transactional approach to an earnest effort.

References

1. AdaCore. (n.d.). OP_Qgen.

2. FAA. (n.d.). Understanding OP.

3. Kelly, T. (n.d.). GSN.

4. Mats Heimdahl, U. o., & Nancy Leveson, M. I. (n.d.). DOT/FAA/TC-15/57 Software Assurance Approaches.

5. Rushby. (n.d.). Formal Assurance Case.

About the Author

Will Keegan

Chief Technology Officer, Lynx Software Technologies

In his role as chief technical officer, Will Keegan leads the technology direction across all Lynx product lines. He has been instrumental in the development of key security technologies within Lynx to broaden the reach of the existing products, with a focus on cybersecurity, cryptography, and virtualization.

Will joined Lynx in 2011 as the director of security solutions, with responsibility for the LynxSecure product line. Prior to Lynx, he was a product developer at Objective Interface Systems Inc., with responsibility for product engineering of real-time middleware and high-assurance cryptographic network technologies. He holds a bachelor’s degree in Computer Science from University of Texas.