Enhancing Smart-Home Experiences with AI-Based Voice Control

What you’ll learn:

- How AI and machine learning can be used to personalize smart-home devices.

- How to design voice control for convenience and usability.

- Technical issues to evaluate when planning the development of voice-controlled smart-home environments.

The way we live, work, and play has been forever altered by smart-home technologies. When you enter a new home, chances are you'll find the technology around every corner, from large appliances such as refrigerators and laundry machines to the unexpected like window shades. Technology existing within these products increasingly aims to make homeowners' lives easier through automation and personalization. The newest way to level up convenience is through one of the oldest tools we have—our own voice.

Today, a sizable majority of U.S. homes, some 69%, own at least one smart-home device. The smart-home market is exploding, and voice control is an essential component. The speech- and voice-recognition market itself is being driven by the popularity of voice-activated virtual assistants—often referred to as “smart speakers”—as well as voice-recognition hardware and software that’s been embedded into smartphones, smart-home devices, and vehicles, among other settings.

Voice Control for All

Tech enthusiasts aren't the only ones enhancing their smart homes through voice control. Voice control makes smart-home technology accessible for individuals with disabilities, including sight or mobility issues. Even people who aren't technologically inclined, such as the aging-in-place population, can use voice control to enhance their homes and achieve a specific task. Voice capability is no longer a novelty; it’s fast becoming an expected product feature.

Amazon, Apple, and Google are well-known names in the voice market for smart home. Nonetheless, many other solutions are available to the manufacturers of smart-home devices that wish to integrate voice control. The alternatives can provide more customized end-user experiences while still retaining interoperability with these larger voice ecosystems if desired. The “best” voice platform for product developers depends entirely on the individual use case for each product or system.

Computing technology has come a long way in a short time. It’s now possible to have sophisticated voice-recognition capabilities in a wide variety of voice-control devices. Artificial intelligence and machine learning enable devices to become more accurate and more precise over time.

The latest approaches in machine learning have seen major improvements in problem solving and, thus, personalization. Through a combination of recorded speech (supervised learning), improved performance based on human feedback (reinforcement learning), and the fine-tuning of existing models (transfer learning), resulting systems can understand human language and all of its variances regardless of tone, accents, or disability. Voice control powered by AI and machine learning can remember information from past commands and use that data to personalize a user's experience.

The Voice-Control Journey

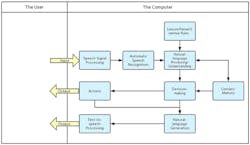

Modern voice-control technologies generally work in more or less the same way, regardless of the specific implementation or any added proprietary technologies. It begins with one or more microphones capturing the acoustic energy of a voice and converting it into an electrical wave. In turn, computing hardware converts the sound wave into digital information.

The specialized software analyzes the digital signal to collect "phonemes." These are the inner particles of words, the sounds our mouths, lips, and tongues make when we speak. Algorithms construct the phonemes into words that the computing hardware understands as a request. The hardware responds to the request by an action, a voice reply, or both.

While this path may seem simple, the hardware and software required for even the most basic voice capabilities are marvels of technology. In particular, systems capable of answering commands with synthesized speech, such as Apple's Siri or Amazon's Alexa, have made tremendous headway into the not-inconsiderable challenges of accurate voice interaction.

As the figure shows, voice control has an input (the user) and up to two outputs: device action and/or synthetic speech generated through natural language processing (NLP).

How Machines Use Language

A voice interface's job looks pretty straightforward when shown in a flow chart: listen, process, respond. It's essential to consider the complexities that the voice system must absorb, calculate, and output—with enough speed to make the interaction feel natural:

- The system must be able to hear voices clearly through its microphone(s), even when the voice commands aren't close by. A single smart speaker rarely offers enough coverage for an entire home.

- The system must assemble the "heard" phonemes into accurate words, phrases, and sentences. It then has to deduce semantic meaning from the resulting data. "Turn up the heat" and "make the house warmer" may mean the same thing to an end user, but voice systems need to be trained over time to understand these subtleties. In many cases, they can't be trained, and only one phrase can be recognized.

- The system must formulate the appropriate action to spoken requests. The action may be a contextual answer "spoken" via synthetic speech (which is also produced through phonemes). More simply, the action can enact a device command, such as changing the thermostat's temperature.

- Finally, the system typically provides a confirmation that the voice command was understood and acted upon. Voice systems like Siri and Alexa often provide a vocal confirmation with synthetic speech. Other voice-command-device (VCD) systems may use an audible beep or operate through a multimodal interface—one that employs visual cues such as an LED indicator or a display screen.

Design for Convenience and Usability

When voice-control devices are integrated throughout the home—through appliances, thermostats, lights, access controls, and more—the whole home becomes available upon the recognition of a wake word. Voice control can make the homeowner's life easier and more productive if designed with that thought in mind.

Accurate far-field audio capture requires specialized hardware and software. As its name implies, far-field is a general term for situations when the speaking voice isn't physically close to the microphone. The design will dictate if the device is to effectively capture sound in a far-field context.

Design techniques such as port orientation, microphone arrays and beamforming, and digital-signal-processing (DSP) algorithms all play a role in creating the effortless voice-capture experience expected by users. The orientation of the acoustic port, where the audio signals are received without physical obstruction, can either be on the top or bottom of the device.

Ideally, the port is near the microphone but distanced from the speakers and noise sources such as motors. A microphone array hears sounds from many directions simultaneously and must be able to differentiate the chosen spoken command. The technique of beamforming is what allows a microphone array to be programmed to selectively capture some sounds while rejecting others.

The DSP algorithm is the final step that allows for advanced techniques, including noise suppression, source separation, and speaker tracking, which may be used to process the incoming audio in more sophisticated devices.

However, simply considering the above doesn’t complete the design process. Product developers also must consider power management and conservation, interoperability with other voice-controlled devices, and latency, or how quickly the device can relay a command back to its servers and complete a request.

It's All in a Word

Voice control makes even the most sophisticated smart-home capabilities accessible for any user. It’s rapidly becoming the primary method of device interaction. The “speak and wait” microphone model is in the past, as the industry moves on to the next generation of VCDs.

With AI and machine learning, a user’s smart home can get smarter with every voice command. However, there are technical challenges in getting the experience just right. With intelligent, thoughtful design, VCDs can offer the smart-home personalization sought by homeowners. It all comes down to design.

About the Author

Mehul Kochar

Sr. Director Business Development, Audio Solutions, Knowles

Mehul Kochar is the Sr. Director Business Development, Audio Solutions at Knowles, a market leader and global provider of advanced micro-acoustic microphones and speakers, audio solutions, and high-performance capacitors and RF products. Mehul has nearly two decades of experience in establishing and leading customer strategy and execution. He excels at driving new technology into diverse user bases.