What you’ll learn:

- Groundbreaking benefits of using artificial intelligence in design verification.

- How SHAPley values can help engineers optimize debugging in design verification.

- Achieving low-latency SoC configurations using optimization techniques.

- Using a clustering-based recommendation engine for automated testing.

Design verification is a time-consuming and human-resource-intensive process. Complexity of designs is ever-increasing, leading to further delays. In the hardware industry, the ratio of design engineers to verification engineers is typically 1:4, which reflects the challenge herein.

Artificial-intelligence and/or machine-learning model applications at scale can revitalize the hardware design and verification industry. This article breaks ground on applications of AI in VLSI that lead to significant cost and time savings, and facilitate scaling design and verification processes.

SHAP for Optimizing Debugging

SHAP (Shapely Additive Explanations) is a method inspired by game theory used to explain the contribution of each feature toward a ML model outcome. It features two types of explanations: local and global explanations. Local explanations explain each individual prediction, while global explanations explain the model behavior as a whole.

The hardware design and verification process is usually mostly manual, and substantial parts of the process are redundant at times. This is where artificial intelligence finds its essential use cases. In this section, we’ll use a machine-learning model to predict error and then use SHAP explanations to optimize debugging processes while synthesizing historical data. Concepts of explainable AI would educate verification engineers about factors that lead to failures/errors.

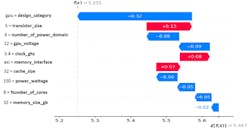

The simulated local explanation in Figure 1 explains the impact and direction of impact of several input features on model outcome. Features like design_category, number_of_power_domain, and gpu_voltage have a large negative impact toward error prediction while a feature such as transistor_size has the largest positive impact toward error prediction. SHAP clearly explains how combining all impacts from all features for a particular design leads to a prediction of no-error.

In the context of this application, SHAP local explanations will help explain which individual factors with what values caused errors, assisting engineers in real-time to execute root-cause analysis. SHAP global explanations are generated by aggregating local explanations from all training data points, the output of which can be seen in Figure 2. Analyzing the simulated global explanations above, it’s clear that memory_interface, cache_size, and number_of_cores typically have the largest negative impact toward errors, while factors like design_category and clock_ghz have the largest positive impact leading to errors.

Engineers can use this to optimize debugging by better understanding factors that contribute to errors in a particular design. Post deployment of the machine-learning model and its explanations using SHAP, verification engineers will receive suggestions/warnings of potential errors even before starting the debugging process. This makes debugging efficient and improves overall productivity and utilization of human resources planned for a project.

MIP Optimization for Low-Latency SoC Configurations

All hardware engineers strive to design a system-on-chip (SoC) that operates at extremely low latency. Parameters (e.g., buffer size, cache size, cache placement policy, cache replacement policy, etc.) in register transfer level (RTL) are tweaked and simulations are run to find out latency numbers under different test scenarios. This time-consuming process takes many iterations to find optimum parameter configurationss, and engineers have to wait a long time to arrive at such configurations.

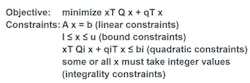

Mixed integer programming (MIP) is an optimization technique that can be applied to solve large complex problems. It can be used to minimize or maximize an objective within the defined constraints. This is an example of defining MIP objective and constraints:

In SoC design, MIP can be used to predict optimum parameters with parameter constraints set around parameters such as buffer size, cache size, cache placement policy, cache replacement policy, etc. MIP will solve this to output a set of parameters that leads to reduced latency. Different scenarios can also be set in MIP to identify different sets of parameters to achieve lower latency for each of these scenarios.

Effective parameter configurations can be picked from recommendations of MIP, thereby saving lots of time that would otherwise be spent on running many unnecessary simulations. Typically, a test simulation at the SoC level takes a few days to run, while on MIP it's a matter of minutes to a few hours.

Recommendation Engine for Automated Testing

Test development is yet another time-consuming process for verification engineers. Verifying a design requires numerous tests to be run and often engineers write tests from scratch when a new design is developed. In addition, thinking of different tests that are required to verify the entire design requires much thought and time. On the other hand, when a design change is made, verification engineers run an entire test suite to validate the design change, which is highly inefficient.

The recommendation system is a system that filters data to recommend the most relevant action. This is based on patterns detected by the system from historical training data. There are many ways to create a recommendation system—here, we’ll use clustering.

In design validation, years of designs and tests conducted are collected and clustered into similar groups based on how close and similar they are with each other. When a new design is created, based on its similarity with historic designs and verification patterns, specific tests will be recommended by the system that are likely needed to validate it. This system won’t be effective in the beginning, but with multiple iterations through human-in-loop (HIL) feedback, the recommendation engine learns over time (Fig. 3) and will be able to recommend highly relevant tests defined in Figure 4.

The recommendation system is a disruptive machine-learning application that significantly reduces redundant efforts. Verification engineers receive recommendations of tests with the same intent as tests that were historically written. Selecting tests that efficiently verify a design or a change brings sophistication to verification process.

Conclusion

This article barely scratches the surface of the applications and benefits of AI/ML in VLSI. Immense untapped potential of AI that can revolutionize this field is just waiting to be discovered/invented. ML can substantially reduce major roadblocks that limit scalability and are painfully manual and time-consuming. In the future, the majority of these tasks can be automated by application of ML, although more research is needed and industry leaders must weigh the risks and costs associated with such a major change.

References

About the Author

Krishna Kanth

Hardware Engineer, Intel Corp

Krishnakanth, a hardware engineer at Intel, works on verifying RTL designs. He graduated from San Jose State University with Master’s in Electrical Engineering. His field of interests are logic design, computer architecture, and design verification.