The Data Distribution Service (DDS) standard has been around for many years (over 15 years actually) as a solution for connecting real-time distributed applications. While DDS first gained widespread acceptance in aerospace and defense, DDS has now emerged as a standout technology for the industrial Internet of Things (IIoT). With DDS now entering this “new” market, there’s a tendency for various opinions and misconceptions to arise regarding any new technology. Let’s take a look at some of these myths that have formed since DDS has come into play for IIoT applications.

1. DDS is just another IoT connectivity transport.

Several IoT connectivity solutions are available today, and DDS tends to get mixed into the collection of solutions for IoT. Each of these solutions has specific capabilities that must be considered when looking for the correct infrastructure for a specific application.

One popular IoT connectivity solution used today is Message Queue Telemetry Transport (MQTT). This solution is well-suited to connecting many remote devices to a single server, delivering status at a relatively low rate (< 10 Hz is typical). Other IoT connectivity solutions, such as Advanced Message Queueing Protocol (AMQP) and Constrained Application Protocol (CoAP) provide mechanisms for moving data between distributed applications, where some are resource-constrained and others are not.

However, none of these solutions provide a mechanism to specify the behavior of the data being delivered with extensive Quality of Service (QoS) control. Nor are any of these data centric—they have no knowledge of the format or type of information being shared.

DDS is the only infrastructure that’s both data-centric and provides extensive QoS. Data centricity enables applications to share just the data being asked for, and enables the middleware to provide efficient filtering based on data ranges or thresholds. The other IoT protocols require this filtering to be done within your application code.

The rich set of QoS behavioral settings enable DDS to be used in applications that require real-time data delivery reliably even when a reliable transport isn’t available, such as lossy and intermittent RF and satellite-communications transports. Since DDS is peer-to-peer, DDS-based systems are inherently massively parallel. The elimination of a central server/broker means no single point of failure or attack, and no choke point from a performance perspective. This makes DDS-based systems extremely well-suited for edge-autonomy applications, where low latency, high reliability, and massive scalability are paramount. Yes, DDS can provide the functionality of all the other IoT solutions, but it can also deliver so much more.

2. DDS provides only a publish/subscribe communication design pattern.

The primary mode of communication within DDS is publish/subscribe. In real-time distributed applications, publish/subscribe is the most efficient communication design pattern to use. It enables delivery of data to multiple destinations without having to add a lot of code to your applications to track all of these destinations. It decouples applications in time and location.

However, there are times when your application either wants to invoke a service or send a command for which you’re expecting a response. For this design pattern, DDS also supports a request/reply mechanism. What’s great about this mechanism is that because it uses DDS topics underneath, your requesting application can issue a request to multiple service nodes or issue a command to multiple destinations and still be able to track their responses individually. Thus, you end up with the efficiency of one-to-many delivery along with the correlation of the independent responses.

Let’s take the one-to-many command use case as an example: Using DDS request/reply, my application can issue a mode change command to multiple controlling applications with a single request. Then it can ensure that each control application has applied that mode change command by independently tracking their responses.

3. DDS is not secure.

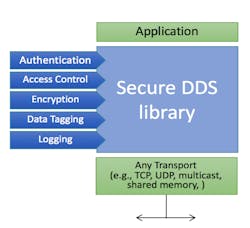

DDS uses a wire-level protocol called RTPS that runs over any transport. For years, there’s been support for using either Transport Layer Security (TLS) transport as well as the Datagram Transport Layer Security (DTLS) transports with DDS. These secure transports provided a minimal set of security options. In August of 2016, the Object Management Group (OMG) released the DDS Security specification. This specification provides a framework for securing systems at the data/topic level through the use of:

- Authentication

- Access control

- Encryption/decryption

- Data tagging

- Security event logging

The standard did not want to select a single solution for doing things like Authentication and Access Control. We all know that what works well for secure communications today will evolve and change over time as new security threats emerge. This plugin architecture enables DDS applications to evolve their security over time, taking advantage of the latest solutions to make sure their systems are secure from outsider/ insider threats (Fig. 1).

1. The implementation of these security features is done through the use of a plugin architecture.

4. DDS is too complicated for my system.

A quick look into the DDS standard (managed by the OMG, https://www.omg.org/spec/DDS/) will show that getting started with DDS is actually quite simple. The area where a developer could get overwhelmed is when they first investigate the Quality of Service Policies that are provided by DDS. There are approximately 23 high-level QoS Policies, and inside each of these policies there can be a number of individual settings.

Yes, this does sound like a lot; however, any one particular use case (sensor data, or alarm/event data, or streaming video data) would only use a few of these QoS policies. The most important thing to remember here is to break down the behavioral requirements of each of your application use cases and then apply the correct QoS policies to achieve the desired behavior. Luckily, many resources and examples are available for each of these use cases from DDS vendors. In fact, some vendors have built QoS profiles directly into their product for the most commonly applied use cases. From there, it’s really just a matter of applying a few tweaks to different parameters to get them set correctly for the desired operation based on the deployed environment.

It’s important to point out that not all of the data in your system is the same; you will require different QoS settings for different types of data flows. Periodic streaming data, like that from a sensor, is very different than asynchronous data, like an alarm or event. And sometimes you have small data, such as a command interleaved with large data like an image or a file. DDS is the only IoT solution that provides you with the capability to tune each use case independently within the communications framework itself.

This is important: If such a capability isn’t built into the communications solution, then the developers of your application will have to create and maintain this functionality as custom code. The rich QoS provided by DDS is what enables it to work in all different kinds of applications, such as telemetry, real-time control, large-scale SCADA systems, and many more. DDS is a single solution that can be used across all of your data-communication requirements, and thus negates the need to employ multiple IoT solutions in your application.

5. DDS is too heavyweight to be used in embedded systems.

The IIoT requires connectivity solutions that run on embedded systems. Otherwise, you end up building gateways everywhere just to be able to communicate with your entire array of devices. As system-on-chip (SoC) devices continue to evolve, so does the amount of RAM availability for the device. Someday soon, it will be commonplace to have >1 GB of RAM on a simple embedded device. However, in the meantime, we still need to be able to address devices with <256 kB of RAM.

Several DDS implementations do support these levels of memory resources. The key here is the part of the standard for DDS that supports interoperability. The Interoperability specification for DDS details an on-the-wire protocol called RTPS (Real Time Publish Subscribe). By adhering to this protocol, implementations of smaller versions of DDS can also easily communicate with larger, more fully featured versions of DDS. Please check with your DDS vendor(s) to see what their RAM requirements are and if they have a smaller version that can support your device. Going forward, the OMG is also working on a new standard for eXtremely Resource Constrained Environments (XRCE) that enables support for communications with very small devices that are much less than 256 kB.

6. DDS is inefficient because it publishes data everywhere.

The publish/subscribe design pattern describes the availability of data everywhere within your network. The workflow within DDS is that, as a developer or software architect, you define individual data flows that are called topics. Through the discovery mechanism built into DDS, each application learns about the existence of topics within their selected DDS domain.

Yes, data is available everywhere. However, each application selects which topics it will receive. There’s no notion of broadcasting data within DDS. Applications only discover other applications within the same domain, allowing you to isolate discovery by using multiple domains. And when a topic is subscribed to, the subscribing application can apply a content-based filter to select the specific data within that topic it receives.

Some implementations of DDS go a step further by sending data using multicast and providing writer-side filtering. Therefore, any data that a subscriber doesn’t want is filtered out at the source or by the network switch. The net result is that your applications have access to any data, but through the use of domains, topics, multicast, and filters, the amount of data is efficiently scaled across your network so that the network is only pushing the data that is needed.

7. DDS isn’t widely used in critical infrastructure.

Released as a standard by the OMG in 2003, DDS was quickly adopted by the aerospace and defense industry. During this time, implementations of DDS became fully featured and battle-tested. With the advent of the IIoT, DDS has now taken off in the commercial industrial world, too. Here’s a sampling of the types of applications using DDS:

- Healthcare patient monitoring

- Surgical robots

- Autonomous cars

- Hyperloop

- Wind-power generation systems

- Air traffic control

- Mass-transit systems

- Medical imaging

- Space launch systems

- Power-plant energy generation

- Train transportation

- Mining vehicles

- Oil & gas drilling

- Robotics

- Smart-grid power distribution

8. DDS does not interface with web services.

In today’s world, a system must have some way of interfacing with web services. Web services has become commonplace for business systems to extract and present information about running industrial systems so that more timely decisions can be made about operation. To address this requirement, the OMG created and adopted a specification for web-enabled DDS. Through this interface, a web application can be built that directly interacts with a running DDS system using a gateway. The gateway provides the ability to create participants, topics, writers, and readers such that any web application can basically act just like a live DDS application. Please check with your DDS vendor to see if it has implemented the web-enabled DDS standard

9. DDS doesn’t scale outside of the local-area network.

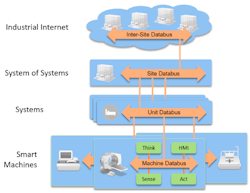

Most developers that look at DDS can quickly see that it’s really well-suited for real-time device-to-device communications within an autonomous car, a microgrid, a ship, a surgical robot, etc. However, after investigating all of the use cases for DDS, they then realize that it’s actually the layered databus approach where DDS also shines (Fig. 2).

2. The layered databus architecture enables data to be shared across multiple devices, multiple networks, and from the edge to the fog to the cloud.

A databus instantiation within the edge control application uses DDS QoS and filtering to make local data communication as efficient as possible. Using a gateway between smart machines and higher-level systems enables scaling of the overall data delivery. These gateways, as defined by the Industrial Internet Consortium’s (IIC) Reference Architecture document, provide a configurable connectivity path that lets high-volume, real-time data stay localized, while the broader business status and command data is shared over the wide-area network (WAN).

The key to these gateways is twofold: First, and foremost, the integrity of the real-time system must not be compromised by outside influence that could delay critical data delivery. Second, the volume of data in the real-time control part of the application could easily overwhelm the WAN connection to the backend business servers. Therefore, it must be filtered and subsampled. The net result is that DDS is certainly appropriate to use for scaling data to and from the WAN. In fact, the QoS and content-awareness of DDS uniquely endow it with the intelligence to ensure that both sides work as they’re designed to do and thus achieve their intended value.

10. DDS won’t run on my platform.

DDS implementations are available for over 100 different platforms. A “platform” is defined as a combination of CPU architecture, operating system, and compiler. DDS transparently mediates between different platforms, relieving your application code of having to handle differences in CPU widths, endianness, and programming language.

Formal language bindings exist for Java and C/C++ (including modern C++). And vendor-specific implementations include the ability to access DDS networks from modern scripting languages like JavaScript, Lua, and Python, plus non-traditional programming environments like LabVIEW and Simulink. DDS is really an interoperability standard that allows any software, written in any language, on any operating system, running on any CPU, over any network, to communicate seamlessly.

11. DDS systems aren’t evolvable.

The last myth that we will take a look at here relates to how a system evolves over time. As mentioned above, the standard workflow within DDS is to define your data topics that will be used to communicate between your applications. These topics are strongly typed, which provides several key attributes, such as application communication integrity, bandwidth usage efficiency, data availability discovery, and filtering efficiency. So, if you strongly define your data topics, then how can you evolve their datatype in future releases?

To address this need, the OMG added a standard called Extensible and Dynamic Topic Types (X-Types) for DDS. This standard not only enables a developer to add/change/delete individual data fields within a datatype, it also provides a mechanism to define some fields as optional fields that don’t require inclusion with each data publication. The standard enables older applications to interoperate with newer applications that use the updated data type. This forward and backward compatibility provides exactly the infrastructure needed to incrementally evolve your system, but also keep it as efficient as possible. Please check with your DDS vendor to see if Type Extensibility is provided.

As you can see, many “myths” about DDS have arisen over the past few years as IoT developers become acquainted with the standard. In the end, you must choose the correct connectivity solution based on the requirements of your application. DDS is a very capable infrastructure and can be leveraged to help solve some of the hardest real-time communications problems that exist today. Don’t let the “myths” about DDS prevent you from including it in your next distributed application.

Bert Farabaugh is Global Field Applications Engineering Manager at Real-Time Innovations (RTI).

About the Author

Bert Farabaugh

Global Field Applications Engineering Manager

Bert Farabaugh is the Global Field Applications Engineering Manager at RTI. He works with customers to identify and develop solutions and design patterns tailored for their projects. Bert has over 16 years of experience developing networking protocols and communications design patterns from scratch for robotics and embedded systems. He has been a Field Applications Engineer for the past 10 years, with hundreds of different applications in his portfolio.